I’ve been meaning to write about a real cloud based project for some time but the criteria a good candidate project needs to fit are challenging:

- Significant enough to illustrate numerous design and implementation decisions

- Not so large that the time investment for a reader to get into it is prohibitive

- I need to own, or have free access to, the intellectual property

- It needs to be something I want, or am contracted, to build for reasons beyond writing about it

To expand upon that last point a little – I don’t have the time to build something just for a series of blog posts and if I did I suspect it would be too artificial and essentially would end up a strawman.

The real world and real development is constrained messy, you come across things that you can’t economically solve in an ivory towered fashion. You can’t always predict everything in advance, you get things wrong and don’t always have the time available to start again and so have to do the best that you can with what you have.

In the case of this project I hadn’t really thought about it as a candidate for writing about until I neared the end of building the MVP and so it comes, rather handily, with warts and all. For sure I’ve refactored things but no more than you’d expect to on any time and budget constrained project.

My intention is, over the course of a series of posts, to explore this application in an end to end fashion: the requirements, the architecture, the code, testing, deployment – pretty much its end to end lifecycle. Hopefully this will contain useful nuggets of information that can be applied on other projects and help those new to Azure get up and running.

About the project

So what does the project do?

If you’re a keen cyclist you’ll know that you need to check various components on your bike at regular intervals. You’ll also know that some of the components last just long enough that you’ll forget about them – my personal nemesis is chain wear, more than once I’ve taken that to the point where it is likely to start damaging the rear cassette having completely forgotten about it.

I’m fortunate enough to have a rather nice bike and so there is nothing cheap about replacing anything so really not a mistake you want to be making. Many bikes also now contain components that need charging – Di2 and eTap are increasingly common and though I’ve yet to get caught out on a ride I’ve definitely run it closer than I realised.

After the last time I made this mistake I decided to do something about it and thus was born Bike Reminders: a website that links up with Strava to send you reminders as you accrue mileage on each of your bikes. While not a substitute for regularly checking your bike I’m hopeful it will at least give me a prod over chain wear! I contemplated going direct to Garmin but they seem to want circa $5000 for API access and thats a lot of component damage before I break even – ouch.

In terms of an MVP that distilled out into a handful of high level requirements:

- Authenticate with Strava

- Access a users bikes in Strava

- Allow a mileage based maintenance schedule to be set up against a bike

- Allow email reminders to be dismissed / reset

- Allow email reminders to be snoozed

- Update the progress towards each reminder based on rider activity in Strava

There were also some requirements I wanted to keep in mind for the future:

- Time based reminders

- “First ride of the week” type reminders

- Allow reminders to be sent via push notifications

- Predictive information – based on a riders history when is a reminder likely to be triggered, this is useful if you’re going away on a training camp for example and want to get maintenance done before you go

Setting off on the project I set a number of overarching goals / none functional requirements for it:

- Keep it small enough that it could be built alongside a two (expanded to three!) week cycling training block in Mallorca

- To have a very low cost to run both in terms of minimum footprint (cost to run 1 user) and per user cost as the system scales up

- To require little to no maintenance and a fully automated delivery mechanism

- To support multiple client types (initially web but to be followed up with a Flutter app)

- Keep personal data out of it as far as possible

- As far as possible spin out any work that isn’t specific to the problem domain as open source (I’m fairly likely to reuse it myself if nothing else)

And although I try not to jump ahead of myself that mapped nicely onto using Azure as a cloud provider with Azure Functions for compute and Azure DevOps and Application Insights for the operational side of things.

Architecture

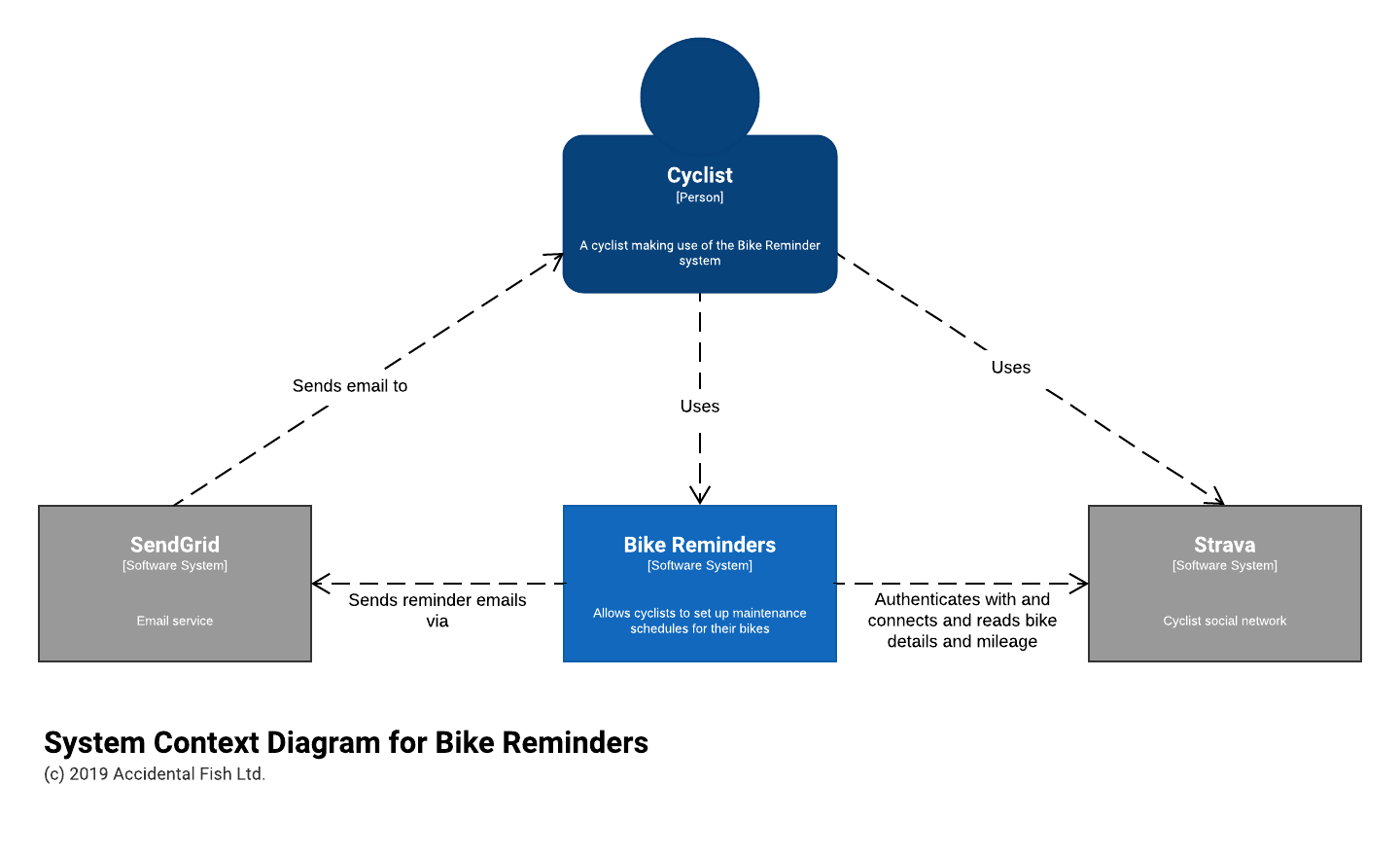

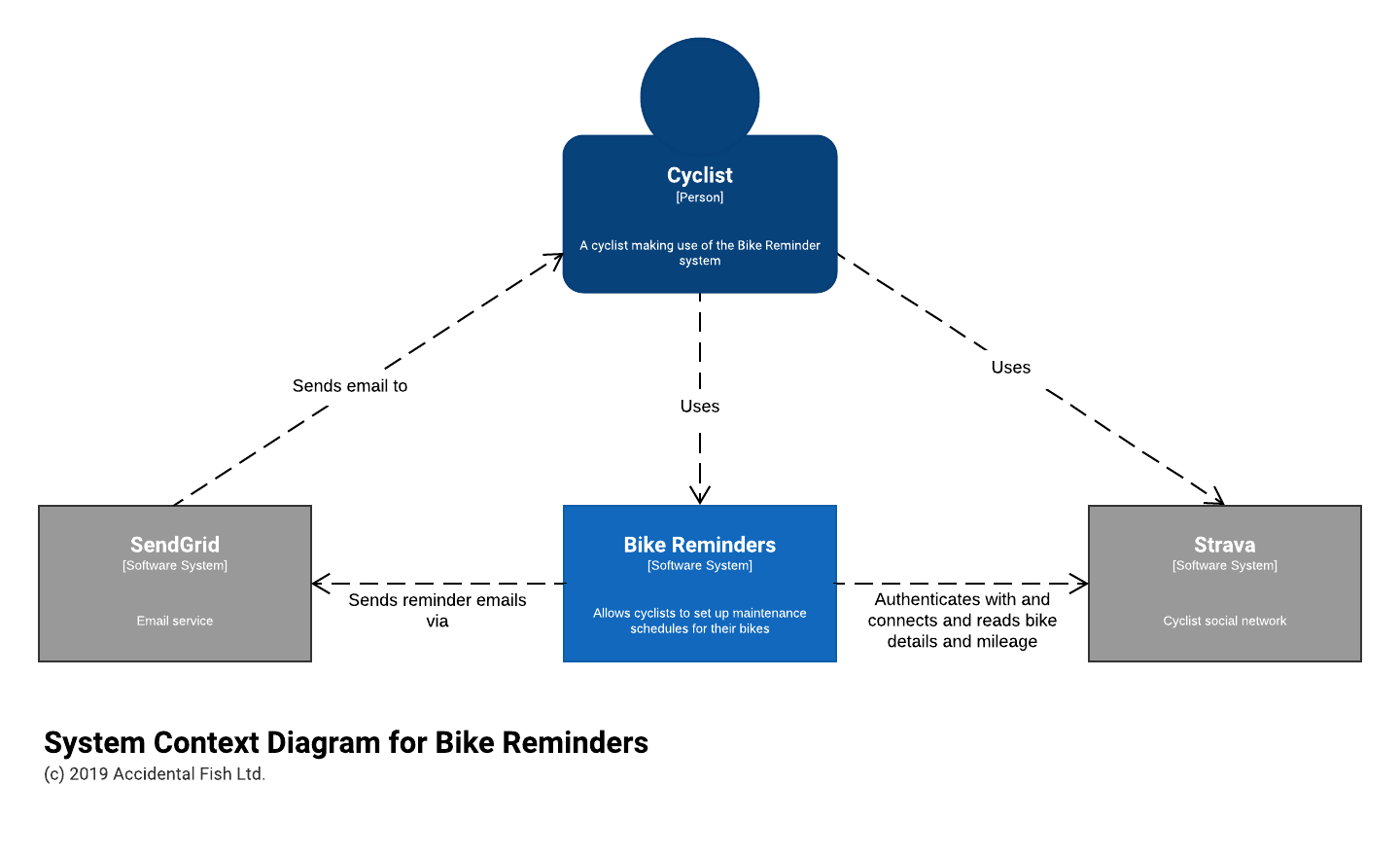

The next step was to figure out what I’d need to build – initially I worked this through on a “mental beermat” while out cycling but I like to use the C4 Model to describe software systems. It gives a basic structure and just enough tools to think about and describe systems at different levels of architecture without disappearing up its own backside in complexity and becoming an end in and of itself.

System Context

For this fairly simple and greenfield system establishing the big picture was fairly straight forward. It’s initially going to comprise of a website accessed by cyclists with their Strava logins, connecting to Strava for tracking mileage, and sending emails for which I chose SendGrid due to existing familiarity with it.

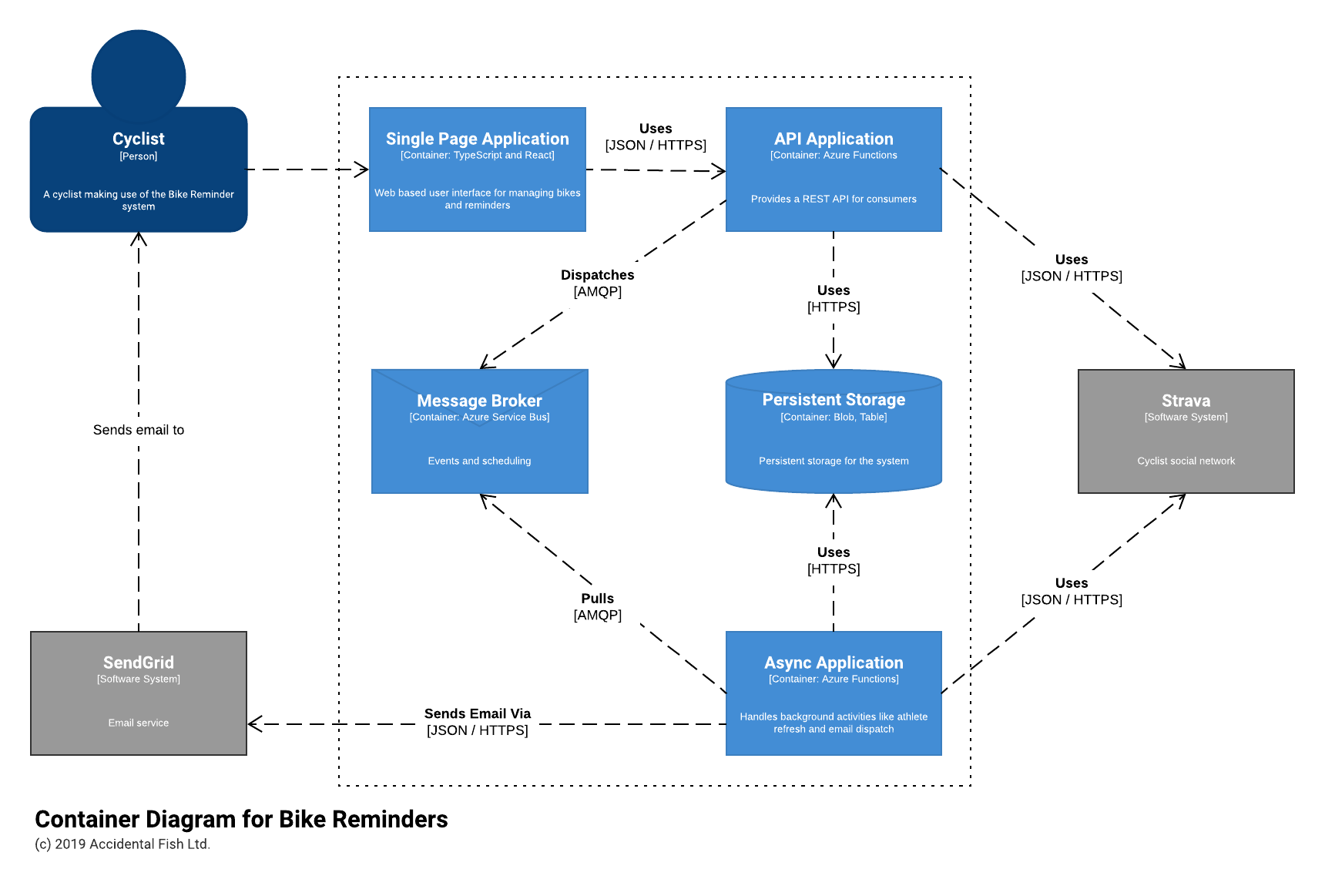

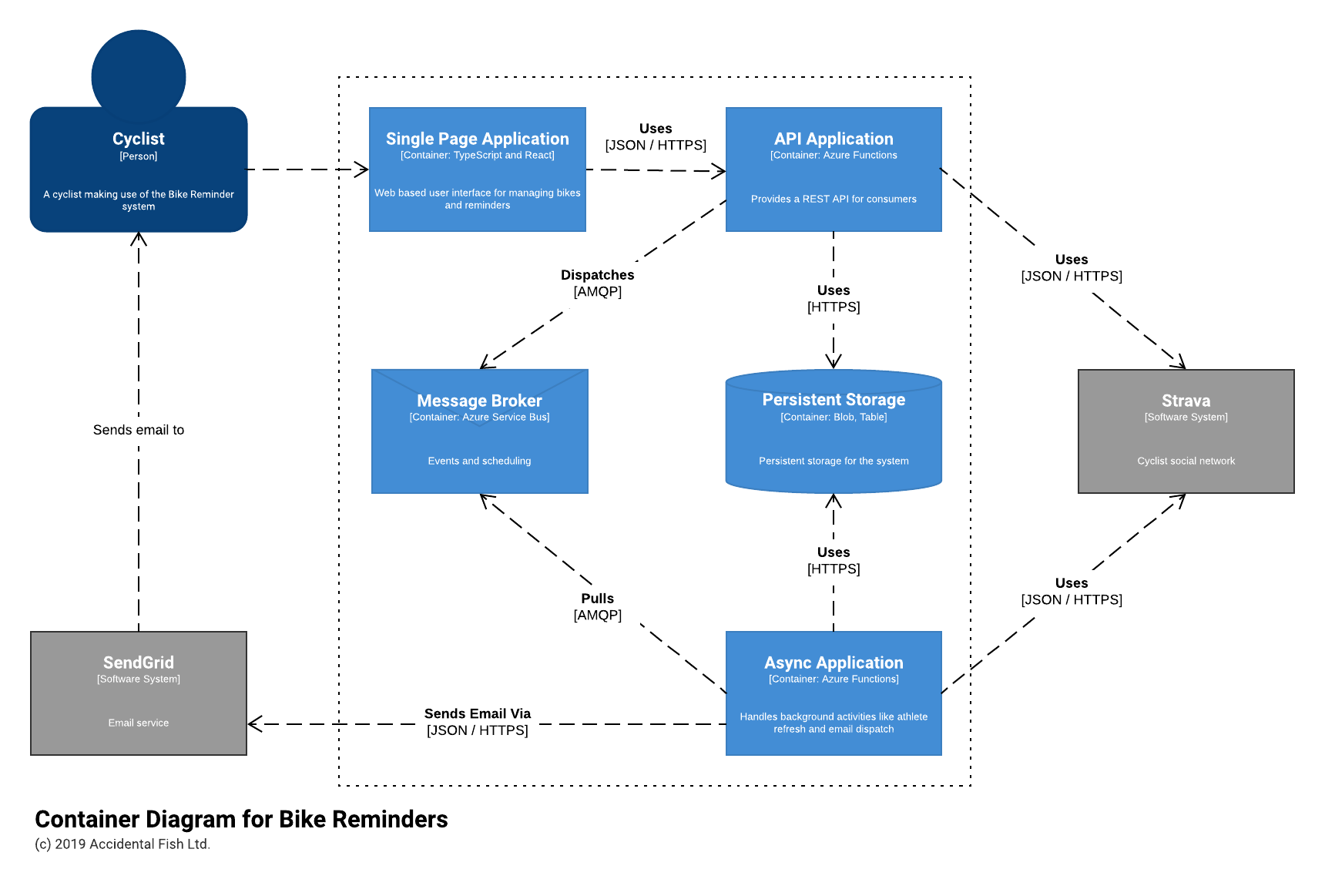

Containers

Breaking this down into more detail forced me to start making some additional decisions. If I was going to build an interactive website / app I’d need some kind of API for which I decided to use Azure Functions. I’ve done a lot of work with them, have a pretty good library for building REST APIs with them (Function Monkey) and they come with a generous free usage allowance which would help me meet my low cost to operate criterion. The event based programming model would also lend itself to handling things like processing queues which is how I envisaged sending emails (hence a message broker – the Azure Service Bus).

For storage I wanted something simple – although at an early stage it seemed to me that I’d be able to store all the key details about cyclists, their bikes and reminders in a JSON document keyed off the cyclists ID. And if something more complex emerged I reasoned it would be easy to convert this kind of format into another. Again cost was a factor and as I couldn’t see, based on my simple requirements, any need for complex queries I decided to at least start with plain old Azure Storage Blob Containers and a filename based on the ID. This would have the advantage of being really simple and really cheap!

The user interface was a simple decision: I’ve done a lot of work with React and I saw no reason it wouldn’t work for this project. Over the last few months I’ve been experimenting with TypeScript and I’ve found it of help with the maintainability of JavaScript projects and so decided to use that from the start on this project.

Finally I needed to figure out how I’d most likely interact with the Strava API to track changes in mileage. They do have a push API that is available by email request but I wanted to start quickly (and this was Christmas and I had no idea how soon I’d hear back from them) and I’d probably have to do some buffering around the ingestion – when you upload a route its not necessarily associated with the right bike (for example my Zwift rides always end up on my main road bike, not my turbo trainer mounted bike) to prevent confusing short term adjustments.

So to begin with I decided to poll Strava once a day for updates which would require some form of scheduling. While I wasn’t expecting huge amounts of overnight for the website Strava do rate limit APIs and so I couldn’t use a timer function with Azure as that would run the risk of overloading the API quite easily. Instead I figured I could use enqueue visibility on the Service Bus and spread out athletes so that the API would never be overloaded. I’ve faced a similar issue before and so I figured this might also make for a useful piece of open source (it did).

All this is summarised in the diagram below:

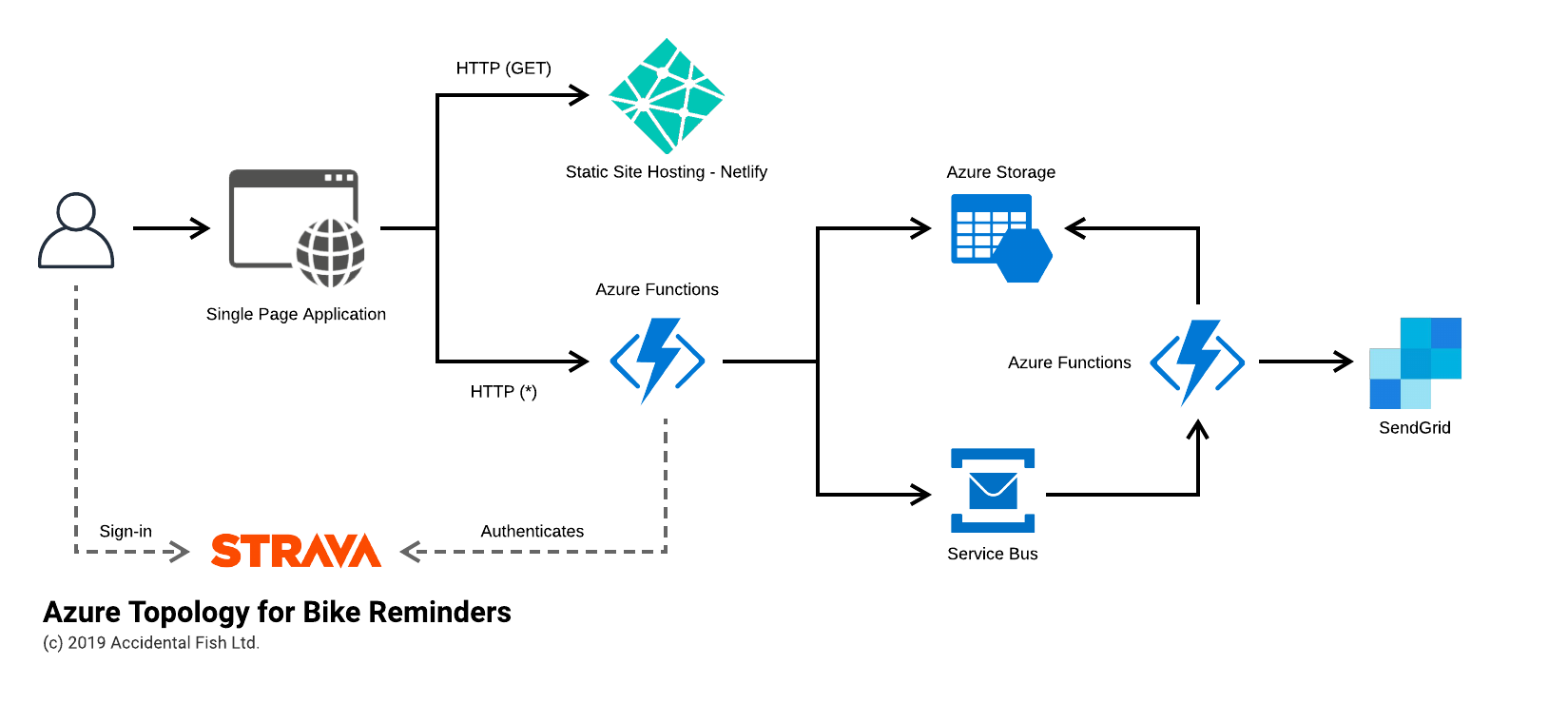

Azure Topology

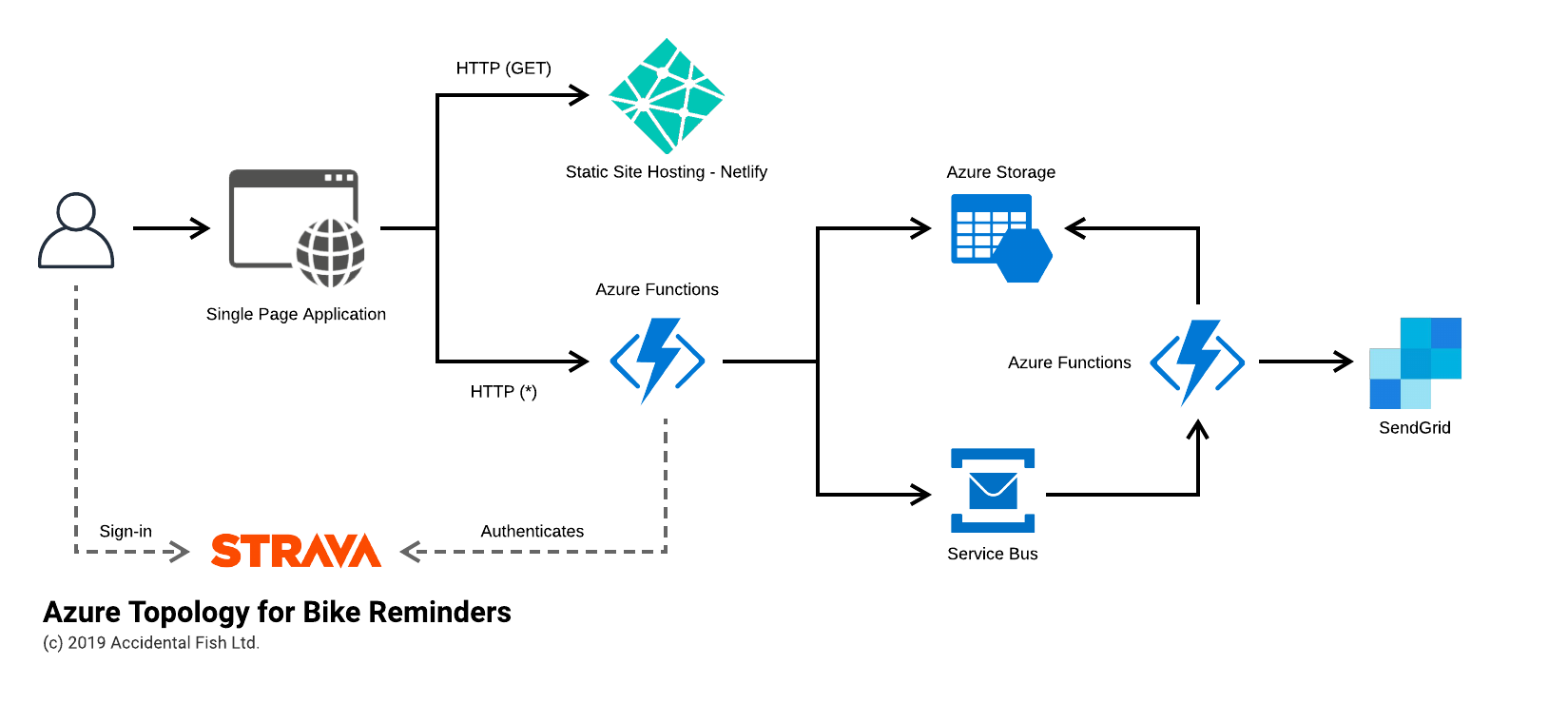

Mapped (largely) onto Azure I expected the system to look something like the below:

The notable exception is the introduction of Netlify for my static site hosting. While you can host static sites on Azure it is inelegant at best (and the Azure Storage SPA support is useless as you can’t use SSL and a custom domain) and so a few months back I went searching for an alternative and came across Netlify. It makes building, deploying and hosting sites ridiculously easy and so I’ve been gradually switching my work over to here.

I also, currently, don’t have API Management in front of the Azure Functions that present the REST API – the provisioned approach is simply too expensive for this system at the moment and the consumption model, at least at the time of writing, has a horrific cold start time. I do plan to revisit this.

Next Steps

In the next part we’ll break out the code and begin by taking a look at how I structured the Azure Function app.

Recent Comments