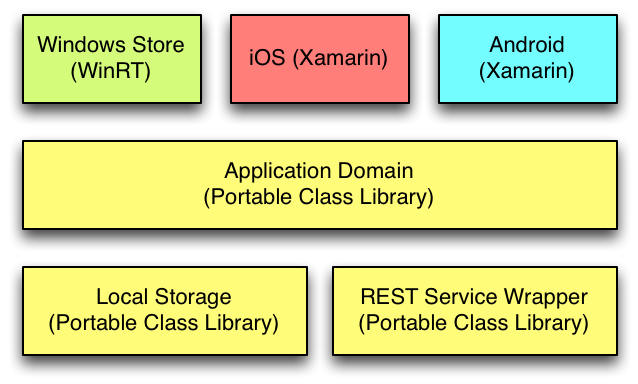

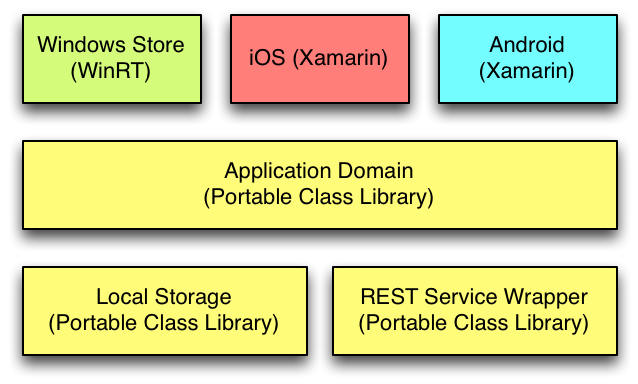

In my last post I looked at using view models, commands and behaviors in the user interface layer of applicatons to get a clean separation of concerns and make it easier to achieve a high level of code reuse and easy management when writing an application that targets multiple platforms by getting most of our code into portable class libraries leaving just a thin platform specific layer at the top aiming for an application architecture that looks roughly like this:

However at some point in such a project you are almost certainly going to find yourself needing to use platform specific features beyond just the user interface: perhaps the storage system, the networking system or a database. Below are my two most commonly used techniques for accessing platform specific features while maintaining a high level of code reuse and keeping a good separation of concerns. In a nutshell:

It’s important to realise that there is no such thing as a portable .net application. Portable class libraries always run within a non-portable .net target – be that Windows Store, iOS, Android or plain old Windows .Net. The two techniques presented below take advantage of this.

To go along with this blog post there is a Visual Studio solution in GitHub here containing worked examples that I’ll refer to below. The examples are stripped down to clearly illustrate specific points and so aren’t necessarily representative of production code. You can find them on GitHub here.

Dependency Injection

Perhaps the easiest way to access platform specific code from a portable class library is via dependency injection. To utilise this technique all you do is declare interfaces within your portable class libraries and provide implementations within your non-portable application targets.

To illustrate how this works I’m going to create a simple application that writes a hello world text file to the local folder of an app. The final output of the below worked example can be found in the DependencyInjection project in GitHub here.

Firstly create a new solution and into it add two projects – a Windows Store app and a Portable Class library. In my example solution they are called DependencyInjection.WindowsStore and DependencyInjection.Domain. Set the Windows Store project to reference the domain project.

In the domain project I declare an interface IFileWriter:

1

2

3

4

| public interface IFileWriter

{

Task Write(string filename, byte[] bytes);

} |

public interface IFileWriter

{

Task Write(string filename, byte[] bytes);

}

And a simple domain class that outputs my message using the supplied file writer:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| public class BasicDomainImplementation

{

private readonly IFileWriter _fileWriter;

public BasicDomainImplementation(IFileWriter fileWriter)

{

_fileWriter = fileWriter;

}

public async Task WriteBytes()

{

await _fileWriter.Write("somebytes.txt", Encoding.UTF8.GetBytes("Hello World"));

}

} |

public class BasicDomainImplementation

{

private readonly IFileWriter _fileWriter;

public BasicDomainImplementation(IFileWriter fileWriter)

{

_fileWriter = fileWriter;

}

public async Task WriteBytes()

{

await _fileWriter.Write("somebytes.txt", Encoding.UTF8.GetBytes("Hello World"));

}

}

In the Windows Store target add an implementation of the IFileWriter class that, you’ll note, uses decidedly non-portable API calls:

1

2

3

4

5

6

7

8

| internal class FileWriterImpl : IFileWriter

{

public async Task Write(string filename, byte[] bytes)

{

StorageFile file = await ApplicationData.Current.LocalFolder.CreateFileAsync(filename);

await FileIO.WriteBytesAsync(file, bytes);

}

} |

internal class FileWriterImpl : IFileWriter

{

public async Task Write(string filename, byte[] bytes)

{

StorageFile file = await ApplicationData.Current.LocalFolder.CreateFileAsync(filename);

await FileIO.WriteBytesAsync(file, bytes);

}

}

Then add a button to the MainPage.xaml file:

1

| <Button HorizontalAlignment="Center" Content="Write Some Bytes" Click="WriteSomeBytes"></Button> |

<Button HorizontalAlignment="Center" Content="Write Some Bytes" Click="WriteSomeBytes"></Button>

And add the event handler in the code behind:

1

2

3

4

5

6

| private async void WriteSomeBytes(object sender, RoutedEventArgs e)

{

IFileWriter fileWriter = new FileWriterImpl();

BasicDomainImplementation domainImplementation = new BasicDomainImplementation(fileWriter);

await domainImplementation.WriteBytes();

} |

private async void WriteSomeBytes(object sender, RoutedEventArgs e)

{

IFileWriter fileWriter = new FileWriterImpl();

BasicDomainImplementation domainImplementation = new BasicDomainImplementation(fileWriter);

await domainImplementation.WriteBytes();

}

If you run the project and click the button the file will be created and the bytes written. If you click the button a second time you’ll get a “file exists” exception.

So what have I done here? It’s pretty simple really: I’ve defined an interface in a portable class library for which I have supplied the implementation from a platform targetted library letting me keep the PCL blissfully unaware of the mechanics of writing bytes to a file in a Windows Store app.

It’s a very simple example but you can see how this approach can be extended to abstract away all sorts of platform specific complexity from your domain assemblies. Over time you’re likely to build up a useful set of abstractions for platform features that you’re using in your apps which leads us nicely to the next approach.

Bait and Switch PCLs

There is a “trick” you can do with portable class libraries called bait and switch that relies on the fact that NuGet will always prefer a platform specific assembly to a portable class library. You can use this to build NuGet packages that allow platform specific code to, seemingly, be mixed with portable code.

Additionally some dependencies are supplied only in platform specific binary forms – the most common example in the App world probably being SQLite, used widely across iOS and Android for structured local device storage and while perhaps newer to Windows developers it’s just as useful for the same reasons in Store apps.

However it’s supplied as a platform specific binary which begs the question – how do you use this in a portable class library?

To illustrate how this works I’m going to use Frank Krueger’s excellent SQLite client for .Net with a Windows Store app. The final output of the below worked example can be found in the BaitAndSwitch project in Github here.

Firstly create a new solution and into it add two projects – a Windows Store app and a Portable Class library. Using NuGet add Unity In my example solution they are called BaitAndSwitch.WindowsStore and BaitAndSwitch.Domain.

Add the sqlite-net-pcl package to the portable class library either using the NuGet package manager GUI or the console:

Install-Package sqlite-net-pcl

If you look at the references added to the project you’ll see that the project now contains references to SQLite-net (Frank Kreuger’s SQLite client) and something called SQLitePCL.raw. This latter assembly is the “bait and switch” assembly developed by Eric Sink and for which the source is in GitHub here. I’ll come back to it shortly.

I don’t want to get bogged down in SQLite while illustrating the bait and switch technique so all I’ll do is create a basic storage model and create a database file using it for the schema.

In my sample project I created a poco as follows:

1

2

3

4

5

6

7

8

9

| public class Person

{

[PrimaryKey]

public int Id { get; set; }

public string Name { get; set; }

public int Age { get; set; }

} |

public class Person

{

[PrimaryKey]

public int Id { get; set; }

public string Name { get; set; }

public int Age { get; set; }

}

And a class with a single method for creating our database:

1

2

3

4

5

6

7

8

9

10

11

| public class Storage

{

public void Initialize(string path)

{

string databaseFilename = Path.Combine(path, "mydatabase.sql");

using (SQLiteConnection connection = new SQLiteConnection(databaseFilename))

{

connection.CreateTable<Person>();

}

}

} |

public class Storage

{

public void Initialize(string path)

{

string databaseFilename = Path.Combine(path, "mydatabase.sql");

using (SQLiteConnection connection = new SQLiteConnection(databaseFilename))

{

connection.CreateTable<Person>();

}

}

}

In MainPage.xaml of the Windows Store app next add a button to the main grid:

1

| <Button Content="Initialize Storage" HorizontalAlignment="Center" Click="InitializeStorage"></Button> |

<Button Content="Initialize Storage" HorizontalAlignment="Center" Click="InitializeStorage"></Button>

And in the code behind event handler (of course having read my last post you’d never do this right!):

1

2

3

4

5

| private void InitializeStorage(object sender, RoutedEventArgs e)

{

Storage storage = new Storage();

storage.Initialize(ApplicationData.Current.LocalFolder.Path);

} |

private void InitializeStorage(object sender, RoutedEventArgs e)

{

Storage storage = new Storage();

storage.Initialize(ApplicationData.Current.LocalFolder.Path);

}

If you run the app at this point and click the button you’ll get an exception with an interesting message:

Something went wrong in the build configuration. This is the bait assembly, which is for referencing by portable libraries, and should never end up part of the app. Reference the appropriate platform assembly instead.

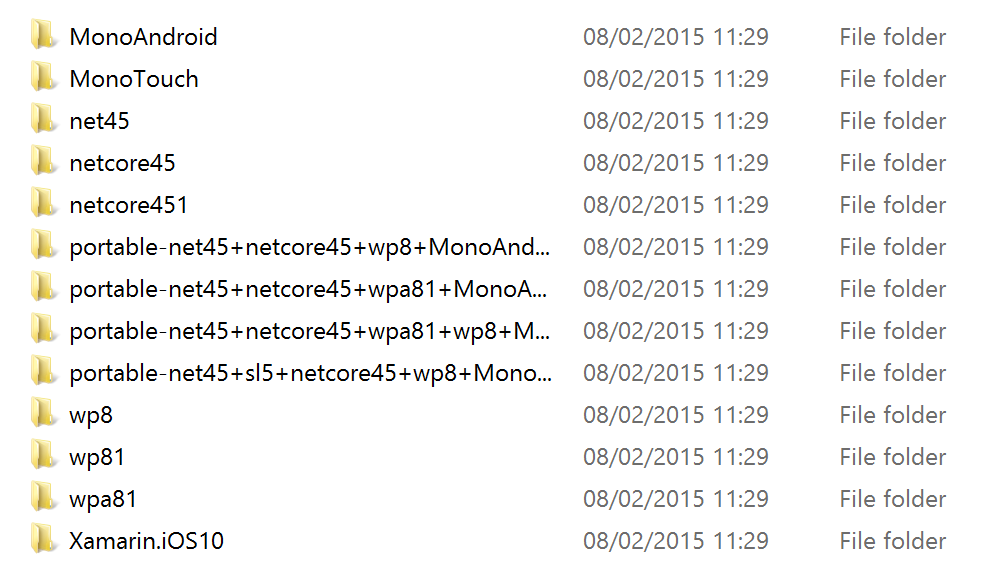

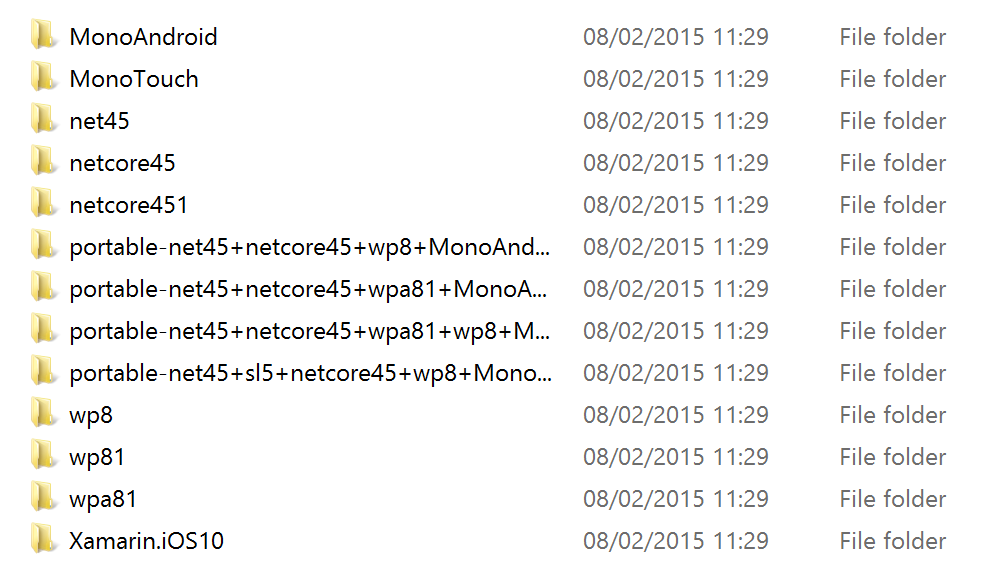

So what’s going on here and how do we fix it? This exception is raised by the SQLitePCL.raw assembly and to understand what’s going on we need to lift the lid on the NuGet package a little. This is the contents of the packages lib folder:

You can see there are provided assemblies for a whole host of targets including portable class libraries and “real” executable targets. If you were to decompile one of portable assemblies using a tool such as dotPeek and look at the SQLite3Provider class you’d see methods like:

1

2

3

4

| int ISQLite3Provider.sqlite3_open(string filename, out IntPtr db)

{

throw new Exception("Something went wrong in the build configuration. This is the bait assembly, which is for referencing by portable libraries, and should never end up part of the app. Reference the appropriate platform assembly instead.");

} |

int ISQLite3Provider.sqlite3_open(string filename, out IntPtr db)

{

throw new Exception("Something went wrong in the build configuration. This is the bait assembly, which is for referencing by portable libraries, and should never end up part of the app. Reference the appropriate platform assembly instead.");

}

The portable versions of the assemblies are never meant to actually be run. Whereas if you look at the same method in the net45 targetted assembly you’ll see the below:

1

2

3

4

| int ISQLite3Provider.sqlite3_open(string filename, out IntPtr db)

{

return SQLite3Provider.NativeMethods.sqlite3_open(util.to_utf8(filename), out db);

} |

int ISQLite3Provider.sqlite3_open(string filename, out IntPtr db)

{

return SQLite3Provider.NativeMethods.sqlite3_open(util.to_utf8(filename), out db);

}

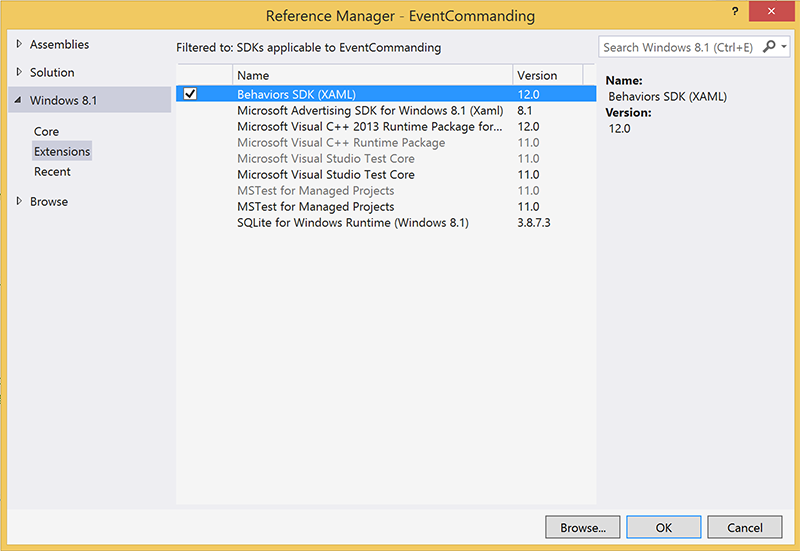

So how do we get our application to use the correct version of the assembly for our target? Well we could fiddle around with file copying and complicated build set ups but remember what I said about NuGet earlier: it will always prefer a platform specific assembly to a portable class library. To take advantage of this all we need to do is also add the SQLitePCL.raw NuGet package to our Windows Store project:

Install-Package SQLitePCL.raw_basic

Now before building and running the application pick a target – you can’t run sqlite3 under Any CPU. Change to x86 or x64 and hit run (if you don’t do this then you’ll get further than before – the correct SQLitePCL.raw assembly will be used but it won’t be able to find the sqlite3.dll C library). If you tap the Initialize button now the database will be created – the version of the assembly making it’s way into our final target is the one most appropriate for it, which is no longer the “bait” PCL version but the Windows Store version.

It’s worth noting that you can use this approach to share code yourself and this is my favoured approach for sharing my own library code across my projects. Once you understand how to use them and roughly how they work it’s fairly simple to do and I’ll cover building a Bait and Switch PCL NuGet package in an upcoming post.

Recent Comments