If you want to follow along with this series of posts the source code and be found here, the published game here and the source for this specific post here.

If you read my blog or follow me on Twitter you’ll know I’ve really fallen in love with F# this last year and am using the SAFE stack to build out my cycling performance application. A big part of that toolkit is Fable – the F# to JavaScript transpiler that also comes with some great bindings and tools for building single page applications.

A few people have asked me how to get started with Fable, why I think F# is so well suited for this kind of work, and how to make the transition from C# and so I thought I’d build out a simple game using Fable and talk about it in a series of posts / videos.

Now before anyone gets too excited this isn’t going to be the next Halo! Instead I’m going to rework my iOS version of the classic Star Trek game (PaddTrek) into Fable – now called FableTrek!

Its not going to be flashy but hopefully strikes the right balance between complex enough to show real problems and solutions and simple enough to understand. I don’t have a grand up-front plan for building out this game and I’m creating each part as I go – lets just hope it doesn’t turn out like the new Star Wars trilogy!

I’ll also add that I still class myself as a relative beginner with F# but I do manage to get stuff done.

With all that out of the way – lets take a look at the first code drop.

Exploring the code

To get started I used this bare bones starting point for a simple Fable app with webpack and debug support for Visual Studio Code (hat tip to Isaac Abraham who created this for me to help me out a little while back).

I’ve then added a few things to it:

- The Stylus pre-processor for CSS

- Elmish and its React bindings

- Remote dev tool support for Elmish

One of the first things to wrap your head round with Fable is that it lives in both .NET and JavaScript worlds. And so in the root folder you’ll find a package.json and a webpack.config.js and in the src folder a F# project file – App.fsproj.

When adding dependencies you’ll often find yourself adding both a NuGet package and an npm package. I’m using the dotnet command line tools to manage the former and yarn for the latter.

The Fable compiler itself is generally invoked through webpack using the fable-loader plugin. I’ve got a fairly simple webpack file – I’ve added support for Stylus and a little work to output a production build to a deploy folder.

App.fs and Index.html – entry point

Starting up a React app in F# is not much different to doing the same with JavaScript or TypeScript. First you need a HTML file:

<!doctype html>

<html>

<head>

<title>Fable</title>

<meta http-equiv='Content-Type' content='text/html; charset=utf-8'>

<meta name="viewport" content="width=device-width, initial-scale=1">

<link rel="shortcut icon" href="fable.ico" />

</head>

<body>

<div id="trek-app"></div>

</body>

</html>

We’re going to create the React app inside the trek-app div block. You might notice their is no <script> tag – so how does any code get invoked? I’ve set up webpack to inject the <script> tag during the build process using the HtmlWebpackPlugin plugin – this way we can handle unique script filenames that include a hash component.

The code entry point for our app is App.fs in the source folder. At the top of the file first we import some dependencies:

open Elmish open Fable.React open App.Types open App.State open Fable.Core.JsInterop importSideEffects "./game.styl"

The interesting line here is the final one where we import game.styl. This is our Stylus CSS file. Importing it like this will ensure webpack will compile it to CSS and include it in our final bundle – to do this I’ve used the stylus-loader package for webpack.

If we skip to the end of the file we can see a block of code that starts up a Fable Elmish based React app:

Program.mkProgram init update root |> Program.toNavigable (parseHash Router.pageParser) urlUpdate #if DEBUG |> Program.withConsoleTrace |> Program.withDebugger #endif |> Program.withReactBatched "trek-app" |> Program.run

We’re passing in a whole load of stuff to this startup block – init, update, root, pageParser and urlUpdate are the basic building blocks of our Elmish app. And so I guess its time I answered the question: what is Elmish?

Elmish…

… is a set of abstractions that allow us to implement the Model View Update pattern in F# based on Elm. Essentially its a pattern where a user interface (a view) is created from immutable state and from which messages are passed back to our program to update the state (via a transformation – its immutable) and regenerate the view. To make this work their are a number of concepts which I’ve started to flesh out in our game.

Thomas Bandt has written a blog post around a simple counter example that introduces the same concepts that is worth reading before continuing. I’m going to go through the same concepts but using the game we’re creating which is a little more complex as we have more data to model and in our game I’m subdividing the system into smaller sub-applications.

Model

The model contains our application state and the best way to express this in F# is using a record. You can see our applications top level model in our App.Types.fs file:

type Model =

{

CurrentPage: Router.Page

StartScreen: Interface.StartScreen.Types.Model option

}

static member Empty =

{ CurrentPage = StartScreenPage

StartScreen = Some Interface.StartScreen.Types.Model.Empty

}

Our root model keeps track of the current page we’re looking at (based on a URL – we’ll look at the router later) and the state for our sub-applications of which we currently only have one – the start screen. These are optional pieces of state that we create as required as the URL changes. I’ve added a static member that returns an empty model that we can use when we need to initiate a default model.

Being records the state is immutable and we’ll look at how we manage updates to this a little later.

The StartScreen model is currently just a placeholder which we’ll flesh out in later parts.

Messages

Messages are what we use to glue our system together. They’re typically sent by the user interacting with our views through a dispatcher and are handled by update functions that we’ll look at in a moment. Each of our sub-applications will have its own set of messages only visible within its own scope – this makes for a great way to isolate the moving parts of a system and is particularly useful in large systems like my cycling analytic website. It means I can change one sub-application without worrying about breaking others.

In our game you can find the messages for the start screen sub-application in Interface/StartScreen/Types.fs:

type StartScreenMsg = | NewGame

When we flesh out our start screen this message will be dispatched when the user clicks a new game button.

Discriminated unions are almost tailor made for messages. If you come from a C# background you might think they look like an enumerated type but they are much more! For one thing they can be associated with payloads and we already have an example of this – we use a top level set of messages that themselves encapsulate our sub-application messages to enable our program to route messages to the correct sub-application. You can see an example of this in App.Types.fs:

type Msg = | GameScreenDispatcherMsg | StartScreenDispatcherMsg of Interface.StartScreen.Types.StartScreenMsg

Updates

Update functions are how we respond to messages and update our state however they never modify state. Instead given the current state and a message they return new state and a command (often another message).

We don’t yet have a simple example of this but we do have an example of an update function coordinating between our sub-applications which you can find in App.State.fs:

let update msg model =

match (msg, model) with

| (StartScreenDispatcherMsg subMsg, { StartScreen = Some extractedModel }) ->

let (subModel, subCmd) = Interface.StartScreen.State.update subMsg extractedModel

{ model with StartScreen = Some subModel}, Cmd.map StartScreenDispatcherMsg subCmd

| _ ->

console.error("Missing match in App.State")

model, Cmd.none

If you’re not familiar with F# you might currently be asking what is this crazy wizardry. I’ll try and break it down! F# has great support for pattern matching – we begin a match set using the match with construct. In our case we’re saying match the msg and model together (what we’re actually doing is creating a tuple out of the message and the model).

Each pattern for consideration, they are evaluated in order, is started with the pipe | operator and in our case we have two possible matches.

Our first pattern match we look for a message of type StartScreenDispatcherMsg and a model that has the StartScreen attribute set to Some. While matching we can also extract values – in our case we’re extracting the sub message for the start screen and the model we have assigned to StartScreen. If we find these things then we pass them on to to our sub-applications updater – it also returns a model and a command. Finally we return a model with the new submodel returned by our child updater and whatever command it returned.

Our second match (the one that reads | _) is our fall through match. This will match anything and simply return the model and the command but pop an error into our browsers console. I’ve got no implementation at all yet for the GameScreenDispatcherMsg.

Routing

I briefly mentioned routing earlier when we looked at models – routing being the process of displaying the correct content within a SPA for a given URL. Elmish comes with some support for routing which I’ve used to build the router here which you can find in Router.fs. Firstly we use a disciminated union to define our routes:

type Page = | StartScreenPage | GameScreenPage

If we had sub-routes we could use the payload of a discriminated union to nest them but we don’t (at least not yet) so for now that’s it. Next we need a way to turn out union members into URL paths:

let private toHash page = match page with | StartScreenPage -> "#/" | GameScreenPage -> "#/game"

Here I’m using hash based paths but you can also use full paths. Finally we need a parser – a function that can extract values from routes and return a correctly structured Page discriminated union. We don’t have any parameters for the routes yet so this is quite simple:

let pageParser =

oneOf [

map GameScreenPage (s "game")

map StartScreenPage top

]

Finally when a URL changes we need a way of updating the current page and state in our model. In App.State.fs we have a urlUpdate function:

let urlUpdate (result: Option<Router.Page>) model =

match result with

| None ->

console.error("Error parsing url: " + window.location.href)

model, Router.modifyUrl model.CurrentPage

| Some page ->

let model = { model with CurrentPage = page}

match page with

| StartScreenPage ->

let (subModel, subCmd) = Interface.StartScreen.State.init ()

{ model with StartScreen = Some subModel }, Cmd.map StartScreenDispatcherMsg subCmd

| _ -> model, Cmd.none

This is very similar in form to a regular update function except rather than take a message in takes our page discriminated union. Like the regular update it returns an updated model and command.

Views

Ok. With all that plumbing in place we need a way of transforming our state into user interface and we do that with views. Our root view can be found in App.fs:

let root model dispatch =

div [Class "container"] [

match model with

| { CurrentPage = Router.Page.StartScreenPage ; StartScreen = Some extractedModel } ->

Interface.StartScreen.View.root model dispatch

| _ -> div [] [str "not found"]

]

Like much of our code so far this is somewhat infrastructural – it looks at our model, determines the current sub-application based on the route, and delegates its handling down to that (which you can find in Interface/StartScreen/View.fs):

let root model dispatch =

div [Class "startScreen"] [

div [Class "title"] [str "< work in progress >"]

]

If you come from a typical React background you’re probably used to using JSX – a DSL that is extremely similar to HTML. In F# we use a DSL that is, well, F#. Its still very similar in use but being F# is typesafe and because its F# you don’t have those slightly awkward breaks between JSX and JavaScript. We’ll see later on how easy it is to use with FP techniques to create complex interfaces cleanly.

Styling with Stylus

I’m building the CSS by hand for this project and to help me do that I’m using a preprocessor called Stylus. I like it because it removes a lot of the noise and clutter from a CSS file. You can find the, fairly limited, amounts of CSS in our game.styl file.

For the time being I’m referring to class names by strings but I may do something about this as we progress 🙂

Putting it all together

If we wind back to where we started and look at the block of code at the bottom of App.fs:

Program.mkProgram init update root |> Program.toNavigable (parseHash Router.pageParser) urlUpdate #if DEBUG |> Program.withConsoleTrace |> Program.withDebugger #endif |> Program.withReactBatched "trek-app" |> Program.run

You can see how all these bits we’ve looked at are brought together in the first two lines of this to create our Elmish program that can now parse URLs, update models, and translate models into views.

Deploying to Azure Static Web Hosting with GitHub Actions

Right back at the start of this piece I shared a link to the game running live on the web – you can find it at https://www.fabletrek.com. Its not all that exciting at the moment:

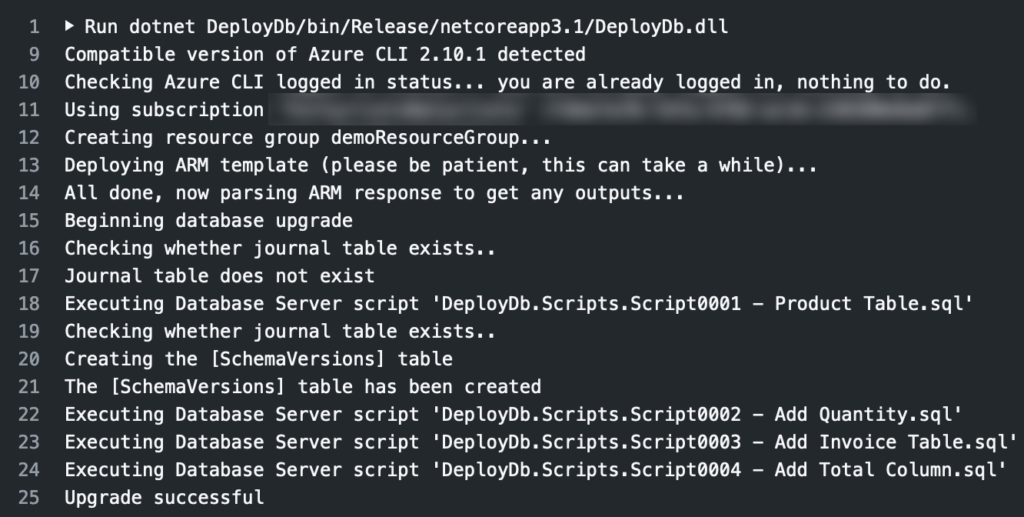

But it is running – and its deploying automatically and directly of the GitHub repository using GitHub Actions and Azure Static Web Apps. This solution doesn’t come with automatic support for Fable but its pretty straightforward to add.

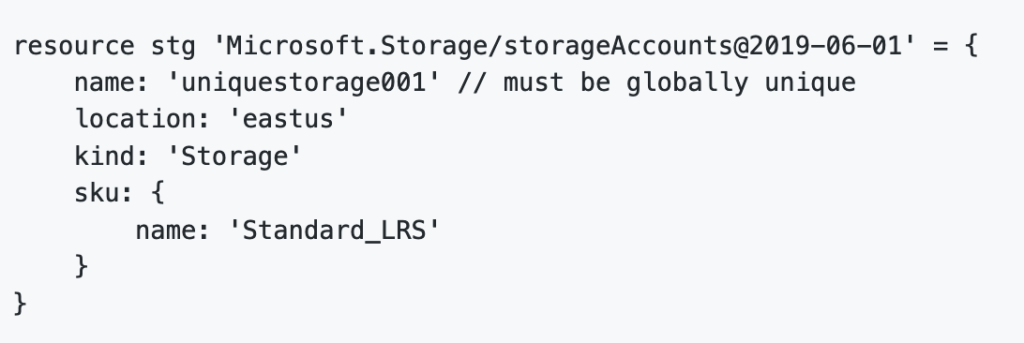

Start by creating the Azure Static Web App itself in Azure following these instructions. For the app artifact directory enter deploy but otherwise follow those instructions.

This will create a GitHub Action for you in the repository but if you run it you’ll get an error stating that the job they provide doesn’t know how to build out code. Their is support for using a custom build command (and we build our solution with the command yarn webpack) but I found using that gives an error with locating and running dotnet from within the Fable compiler webpack loader.

Instead what we need to do is move the build outside of their job so all we need to then do is pick up the contents of our deploy folder. To do that we add these steps:

- name: Setup .NET Core

uses: actions/setup-dotnet@v1

with:

dotnet-version: 3.1.402

- name: Install .NET dependencies

working-directory: "src"

run: dotnet restore

- name: Install JS dependencies

run: yarn install

- name: Build

run: yarn webpack

This makes sure the correct version of .NET Core is installed, restores our NuGet and NPM packages, and then finally builds the solution.

We then need to modify the provided Azure Static Web App job to prevent it from trying to build the solution again. Their is no way to disable the build process but what you can do is supply it with a harmless build command – rather than build anything I simply run yarn –version:

with:

azure_static_web_apps_api_token: ${{ secrets.AZURE_STATIC_WEB_APPS_API_TOKEN_UNIQUE_FOR_YOU }}

repo_token: ${{ secrets.GITHUB_TOKEN }} # Used for Github integrations (i.e. PR comments)

action: "upload"

###### Repository/Build Configurations - These values can be configured to match you app requirements. ######

# For more information regarding Static Web App workflow configurations, please visit: https://aka.ms/swaworkflowconfig

app_location: "/" # App source code path

api_location: "api" # Api source code path - optional

app_artifact_location: "deploy" # Built app content directory - optional

# we need a separate build step as the fable-compiler fails when run under the context of the static site builder

# and their is no disable build property that I am aware of so we run a harmless command

app_build_command: "yarn --version"

###### End of Repository/Build Configurations ######

With those changes made every time you commit code to your branch it will be built and deployed. You can find the complete action here. I then added a custom domain which gives you a free SSL cert too.

Next Steps

That’s the setup part done really – if you’re new to Fable, F# and Elmish then this I think is the more complex bit. In the next stage we’ll build out a start screen and get to the point where we can start a new game.

Recent Comments