In my previous post I looked at how to register, login and authenticate using the new OWIN based ASP.Net architecture underpinning MVC 5 and Web API 2. The default website provided was configured to use SQL database which is why we needed to configure a SQL Database within Azure as we deployed our website.

There’s a fair chance, if you’re experienced with Azure, that you’re wondering if you can swap that out and use Table Storage, fortunately one of the improvements in this latest version of ASP.Net is to better abstract storage away from management.

There is however a fair bit of leg work to doing so. I’m going to firstly touch on how you go about this, then look at the NuGet package I’ve put online (source code in GitHub) that means you don’t have to do this leg work!, and finally we’ll look at the changes you would need to make in the Web API 2 sample project we introduced in the previous post. I’m going to make reference to that code so you can quickly grab it from GitHub if that’s useful.

1) Implementing a New Identity Store

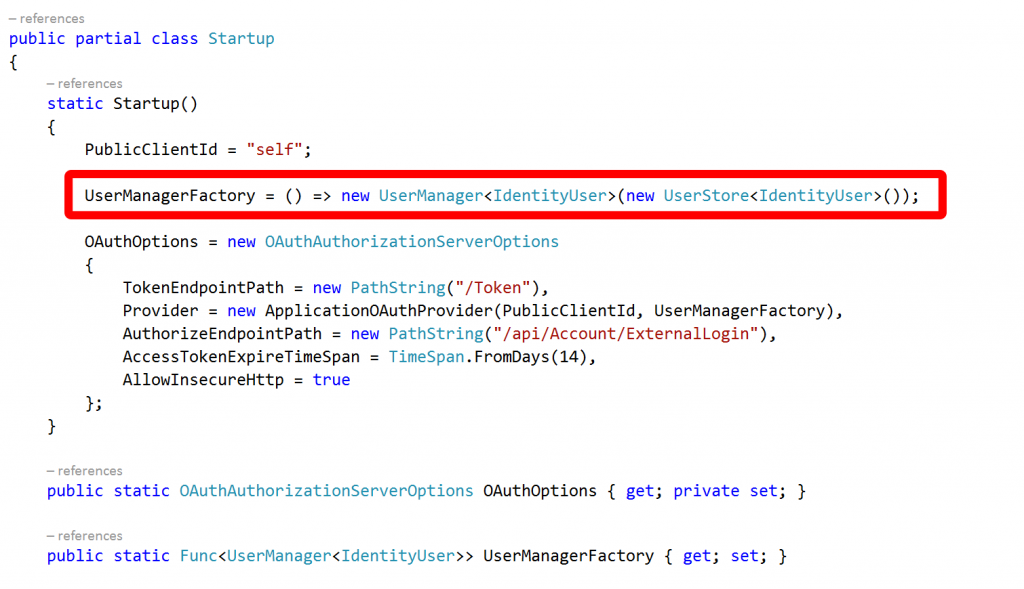

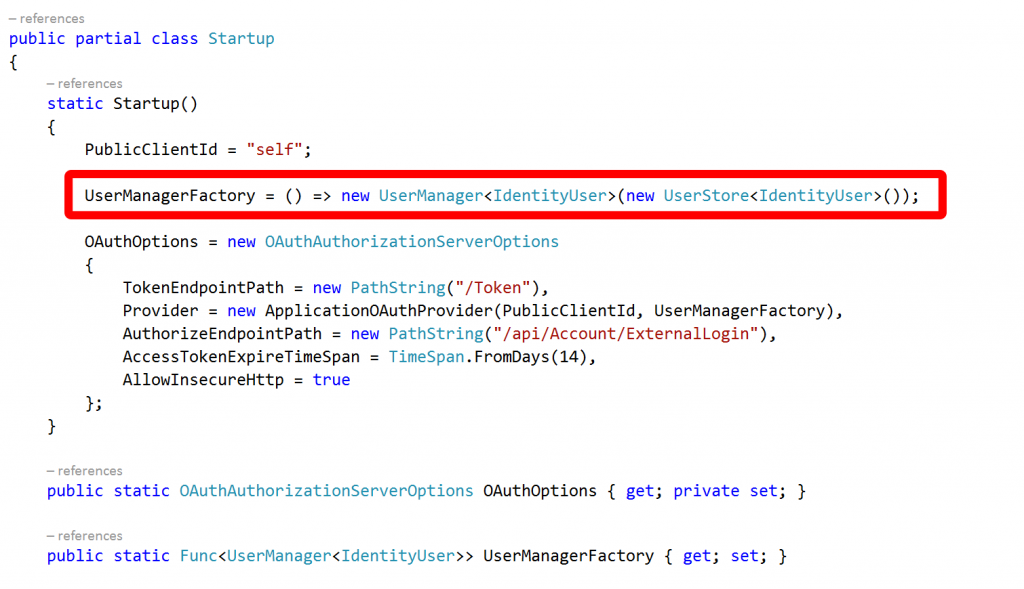

The clue to how to go about this can, again, be found at the top of the Startup.Auth.cs file in the App_Start folder:

A factory function is assigned and asked to return a user manager and a user store.

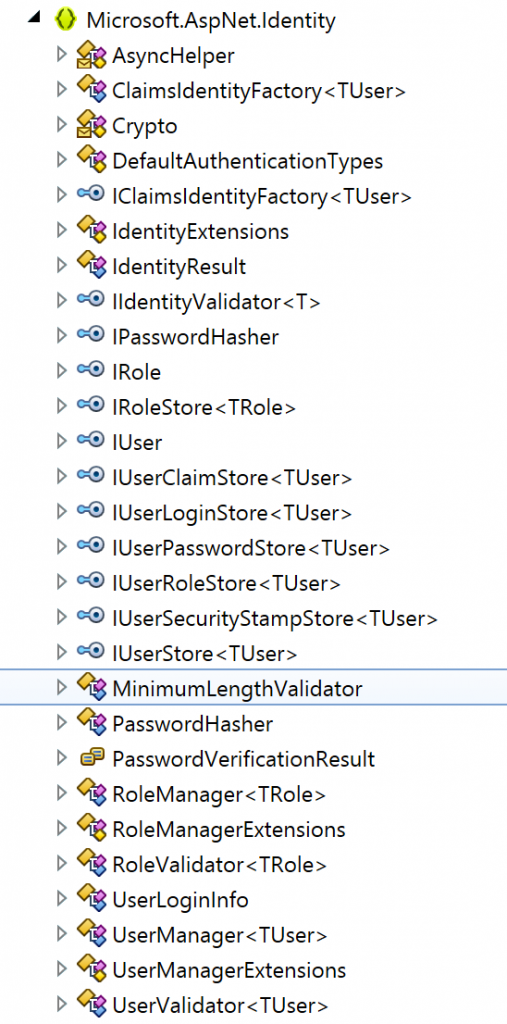

The UserManager is a core class of the identity framework (Microsoft.AspNet.Identity.Core) and works with classes that describe users through the IUser interface (another core class) and persists them with implementations of the IUserStore interface.

The core identity framework provides no storage implementation and the UserStore class that is being instantiated here is provided by Microsoft.AspNet.Identity.EntityFramework as is the IdentityUser class.

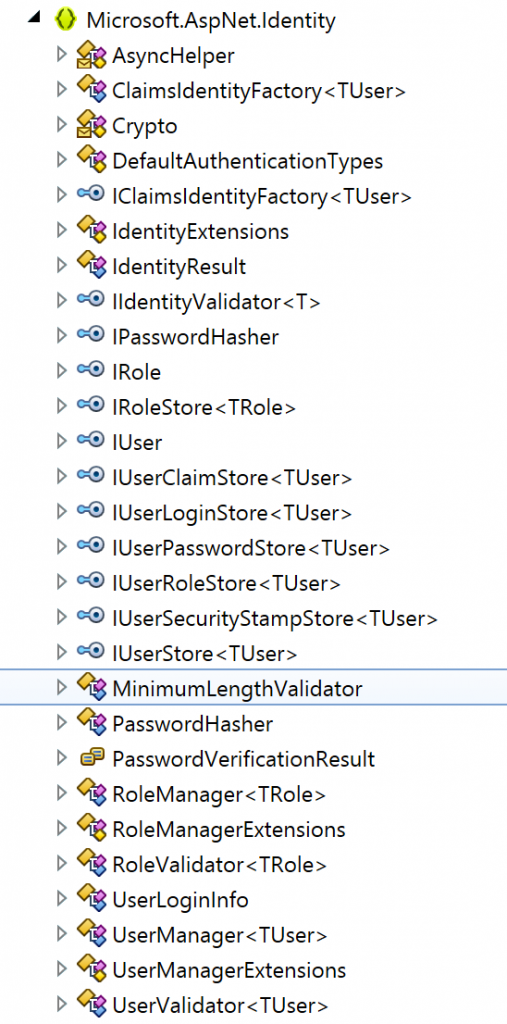

In fact if we look at the Microsoft.AspNet.Identity.Core assembly we can see it’s really very focussed on managing abstract interfaces:

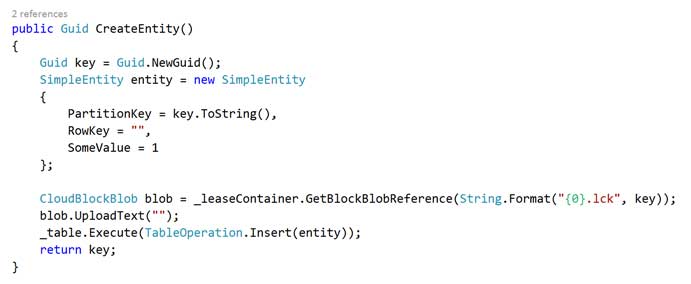

It’s not difficult to see where we’re going at this point – to implement our own store we need to provide implementations for a number of interfaces. If we want to replicate full local identity management in the same way as the Entity Framework supplied implementation then realistically we need to implement most of the interfaces shown above – IRole, IRoleStore, IUser, IUserClaimStore, IUserLoginStore, IUserPasswordStore, IUserRoleStore, IUserSecurityStampStore and IUserStore.

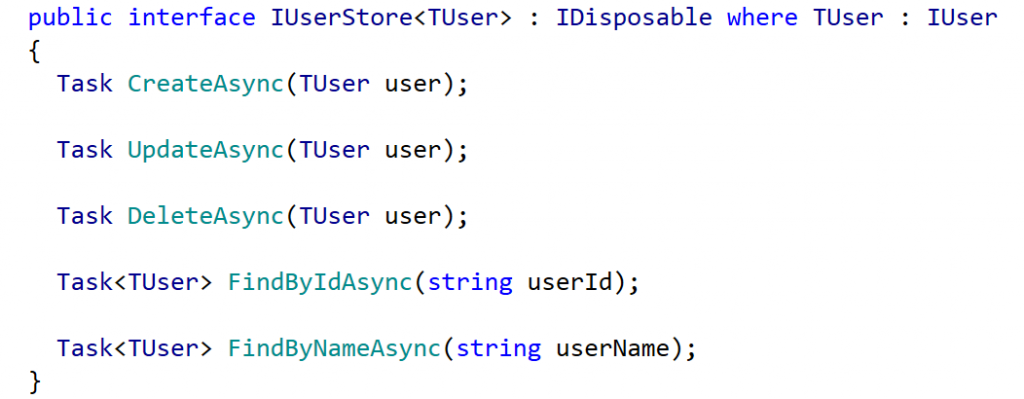

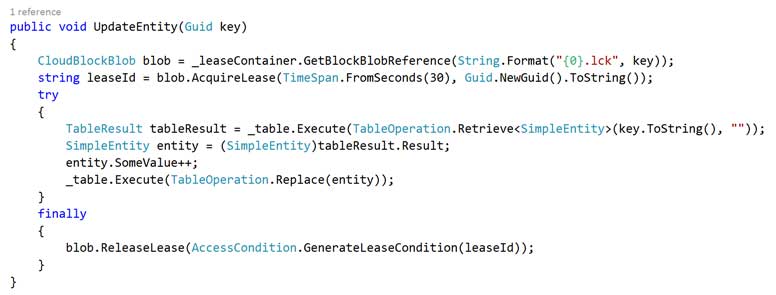

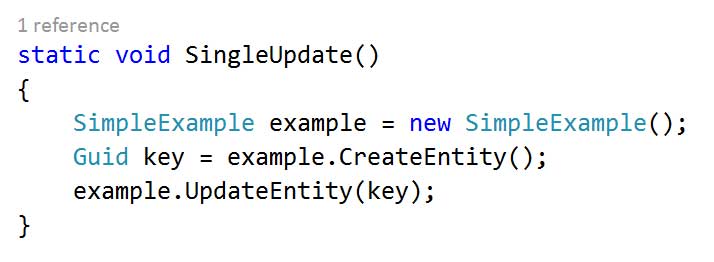

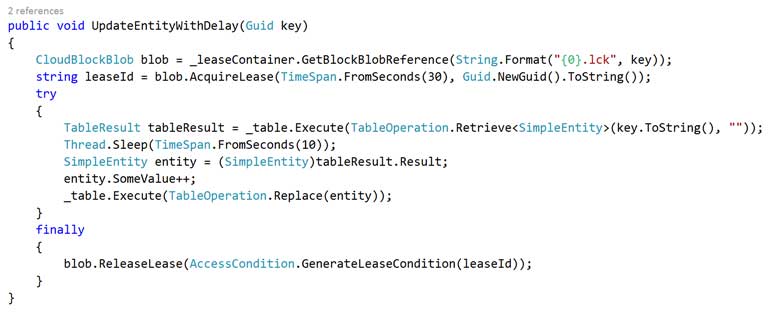

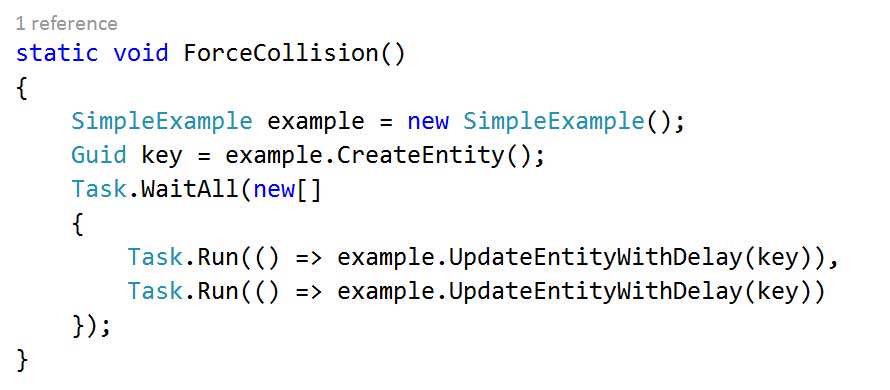

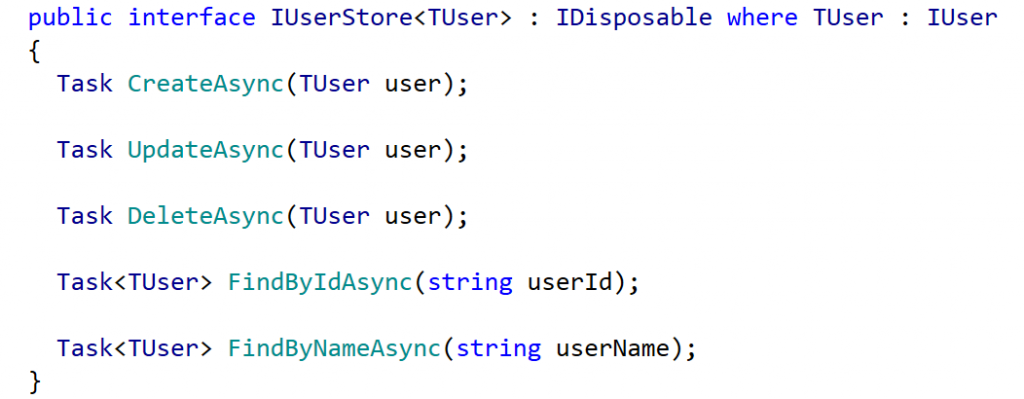

That’s not as daunting as it sounds as most of the interfaces are quite simple, for example IUserStore:

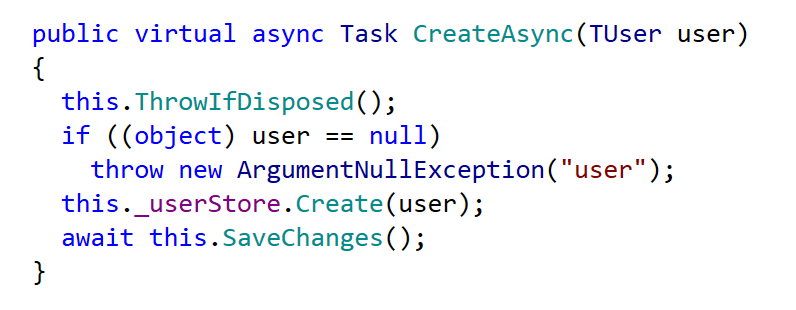

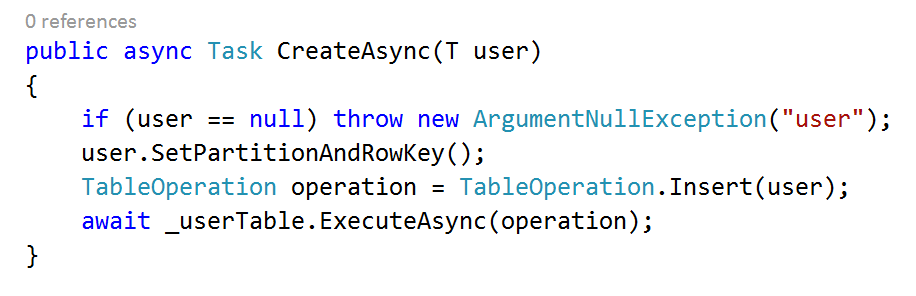

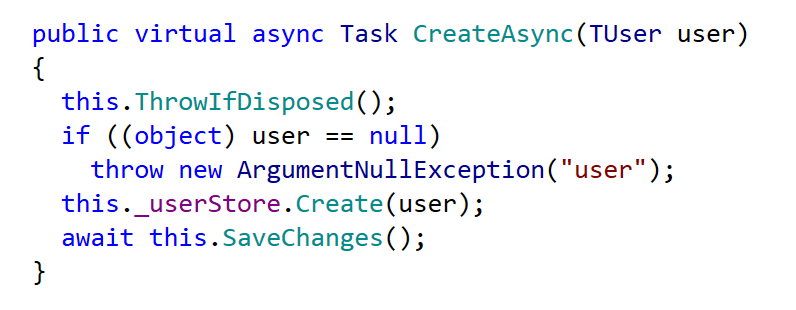

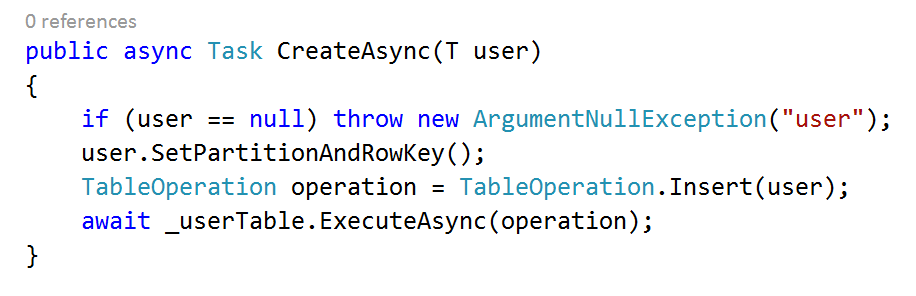

The remaining interfaces also follow this asynchronous CRUD pattern and are fairly simple to implement, here’s the Entity Framework implementation for CreateAsync:

And by way of contrast here’s a Table Storage implementation:

Pretty much the same I think you’ll agree, however it’s still a lot of boilerplate code to write so I’ve wrapped it into a NuGet package called AccidentalFish.AspNet.Identity.Azure which can also be found on GitHub.

2) Using AccidentalFish.AspNet.Identity.Azure

To get started either download the package from NuGet using the package manager in Visual Studio or download the source from GitHub and attach the project to your solution. The NuGet package manager console to install the package is:

Install-Package accidentalfish.aspnet.identity.azure

You can use the package in commercial or open source projects as it’s published under the permissive MIT license though as ever I do appreciate an email or GitHub star if it’s useful to you – yes I’m that vain (and I like to hear about my code being used).

Once you’ve got the package installed you’ll find there is still a little work to do to integrate it into your Web API 2 project as although the Microsoft.AspNet.Identity framework is nice and clean it seems that whoever put the Web API 2 project template didn’t think it made sense to keep a nice level of abstraction and have tied it tightly to the Entity Framework implementation in a few places.

However it’s not too onerous (just a couple of steps) and I’ve built the package with replacement in mind. To help I’ve included the Web API 2 project from my previous post and commented out the old Entity Framework code that bleeds into the MVC host site. I’ll walk through these changes below.

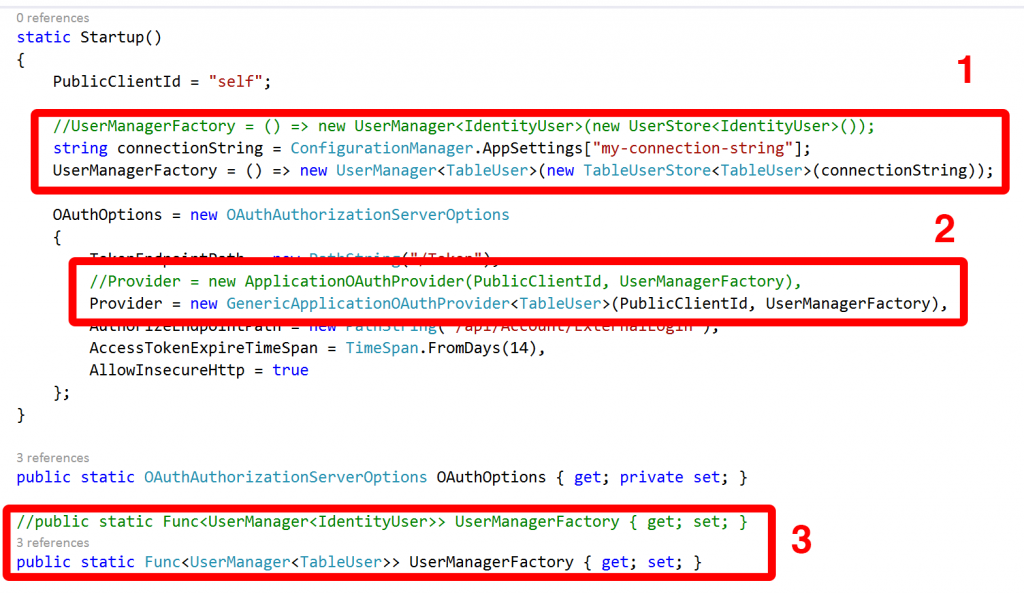

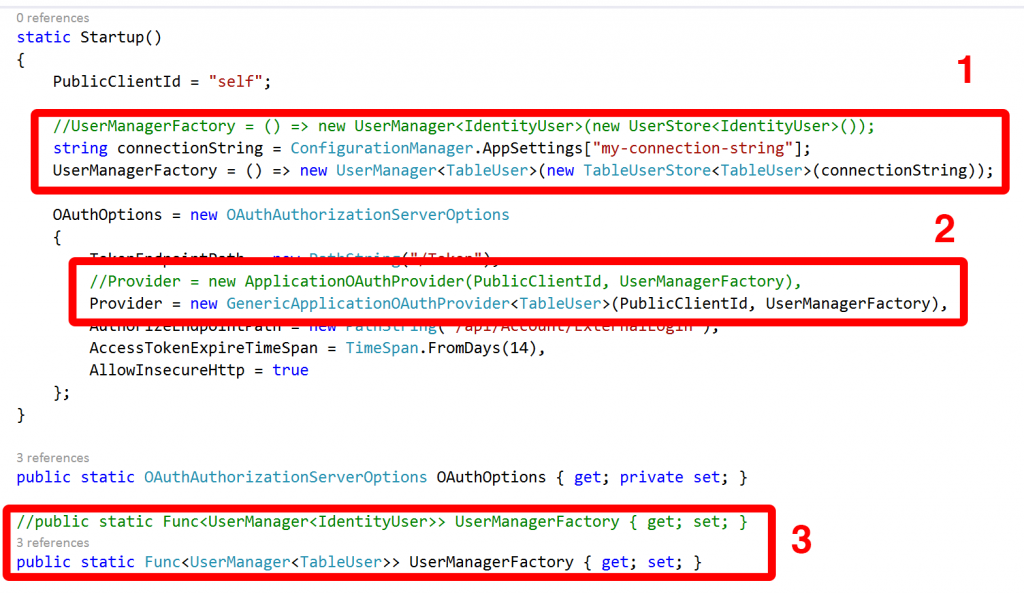

Firstly we need to visit the Startup.Auth.cs file and make three changes at the start:

1) Update the factory assignment to return a UserManager that manipulates users of type TableUser and users a data store of type TableUserStore. Pass it your Azure connection string. The constructor is overloaded with parameters for table names and whether or not to create them if they don’t exist – by default it will.

2) Replace the ApplicationOAuthProvider with a generic version of it contained within the package. This code is exactly the same but replaces the fixed IdentityUser types with a generic (you can see what I was referring to in regard to the template – this ought to have been this way out of the box).

3) Update the declaration for the factory to return a UserManager manipulating users of type TableUser.

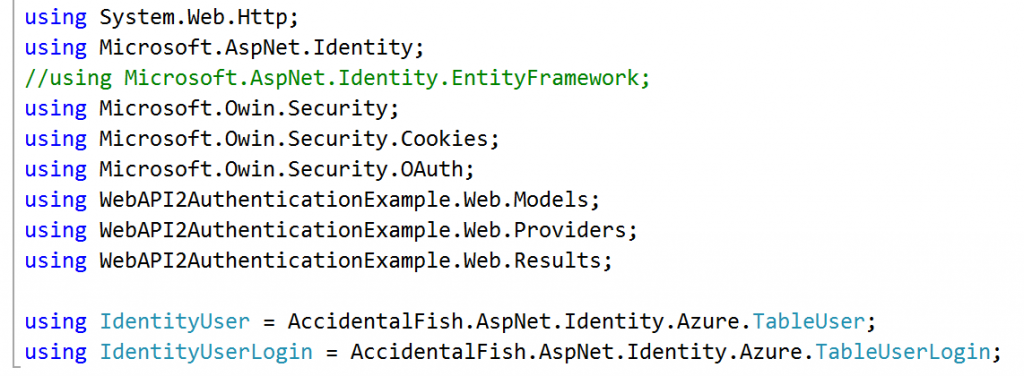

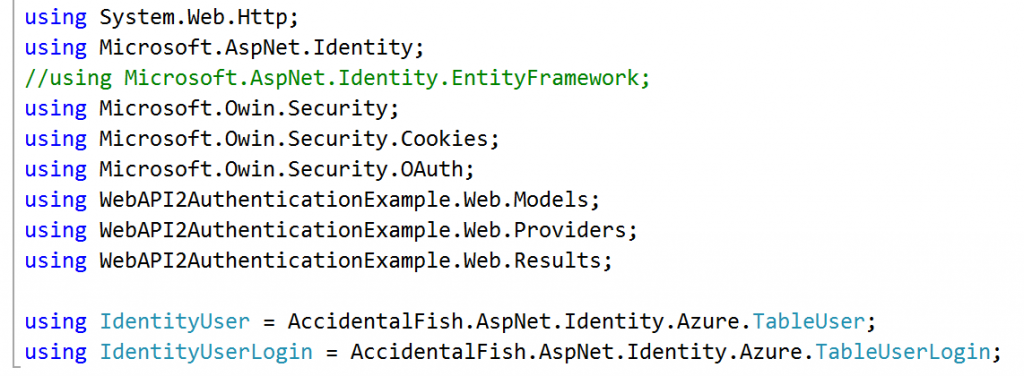

At this point we’ve done the bulk of the OAuth work but unfortunately the MVC AccountController also has a, needless, hard dependency on the Entity Framework library so we need to sort that out. To do this either go through the class and replace the type declarations of IdentityUser and IdentityUserLogin with TableUser and TableUserLogin respectively. Alternatively you can “cheat” and remove the Microsoft.AspNet.Identity.EntityFramework using reference and add a pair of aliases:

That’s it you’re done. Identity information should now be persisted in Azure Table Storage.

I’ll be doing some more work on this package in the coming weeks – I want to test it at scale and I know I need to build at least one index for one of the IUserStore calls which queries in the reverse way to which the partition and row key are set up: I’ve tried to set up the partition and row keys for the most commonly used methods.

If you find any problems or have any feedback then please let me know.

Recent Comments