When I originally posted this piece the library I was using and developing was in an early stage of development. The good news if you’re looking to build REST APIs with Azure Functions its now pretty mature, well documented, and carries the name Function Monkey.

A good jumping off point is this video series here:

Or the getting started guide here:

http://functionmonkey.azurefromthetrenches.com/guides/gettingStarted.html

There are also a few more posts on this blog:

https://www.azurefromthetrenches.com/category/function-monkey/

Original Post

Serverless technologies bring a lot of benefits to developers and organisations running compute activities in the cloud – in fact I’d argue if you’re considering compute for your next solution or looking to evolve an existing platform and you are not considering serverless as a core component then you’re building for the past.

Serverless might not form your whole solution but for the right problem the technology and patterns can be transformational shifting the focus ever further away from managing infrastructure and a platform towards focusing on your application logic.

Azure Functions are Microsoft’s offering in this space and they can be very cost-effective as not only do they remove management burden, scale with consumption, and simplify handling events but they come with a generous monthly free allowance.

That being the case building a REST API on top of this model is a compelling proposition.

However… its a bit awkward. Azure Functions are more abstract than something like ASP.Net Core having to deal with all manner of events in addition to HTTP. For example the out the box example for a function that responds to a HTTP request looks like this:

public static class Function1

{

[FunctionName("Function1")]

public static IActionResult Run([HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)]HttpRequest req, TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

string name = req.Query["name"];

string requestBody = new StreamReader(req.Body).ReadToEnd();

dynamic data = JsonConvert.DeserializeObject(requestBody);

name = name ?? data?.name;

return name != null

? (ActionResult)new OkObjectResult($"Hello, {name}")

: new BadRequestObjectResult("Please pass a name on the query string or in the request body");

}

}

It’s missing all the niceties that come with a more dedicated HTTP framework, there’s no provision for cross cutting concerns, and if you want a nice public route to your Function you also need to build out proxies in a proxies.json file.

I think the boilerplate and cruft that goes with a typical ASP.NET Core project is bad enough and so I wouldn’t want to build out 20 or 30 of those to support a REST API. Its not that there’s anything wrong with what these teams have done (ASP.NET Core and Azure Functions) but they have to ship something that allows for as many user scenarios as possible – whereas simplifying something is generally about making decisions and assumptions on behalf of users and removing things. That I’ve been able to build both my REST framework and now this on top of the respective platforms is testament to a job well done!

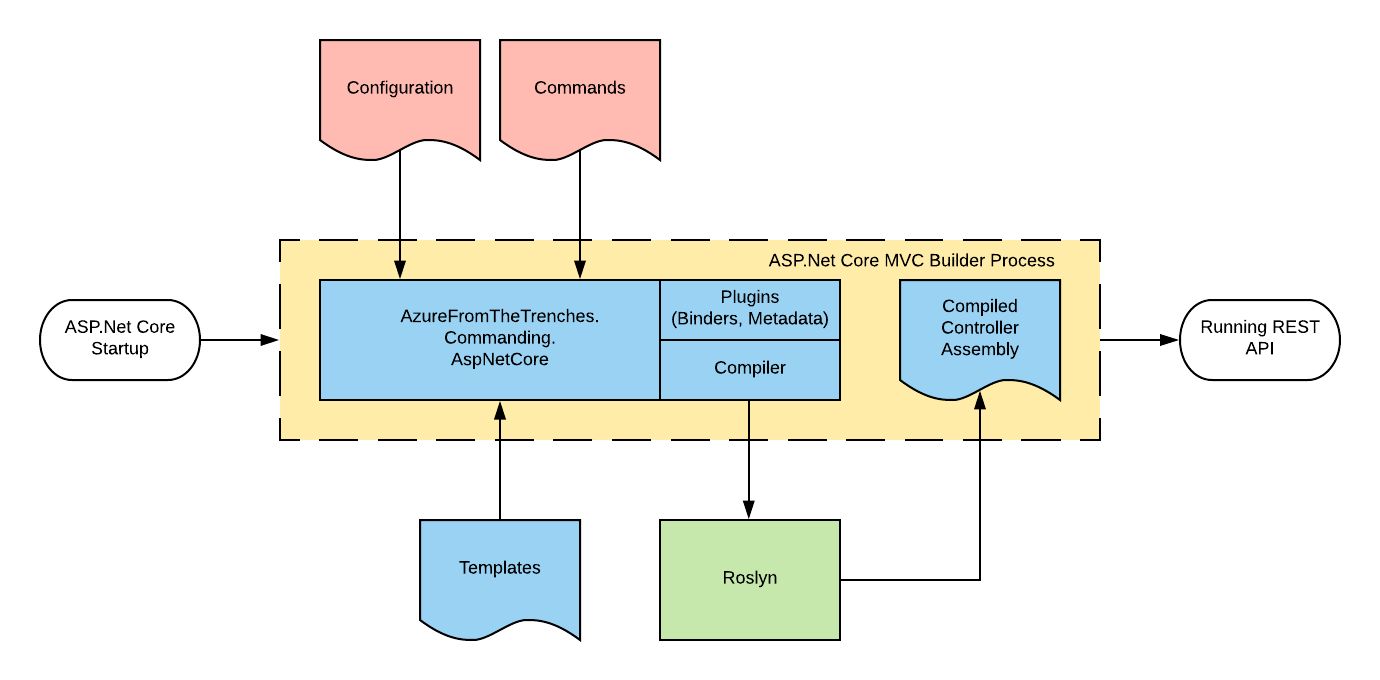

In any case to help with this I’ve built out a framework that takes advantage of Roslyn and my commanding mediator framework to enable REST APIs to be created using Azure Functions in a more elegant manner. I had some very specific technical objectives:

- A clean separation between the trigger (Function) and the code that executes application logic

- Promote testable code

- No interference with the Function runtime

- An initial focus on HTTP triggers but extensible to support other triggers

- Support for securing Functions using token based security methods such as Open ID Connect

- Simple routing

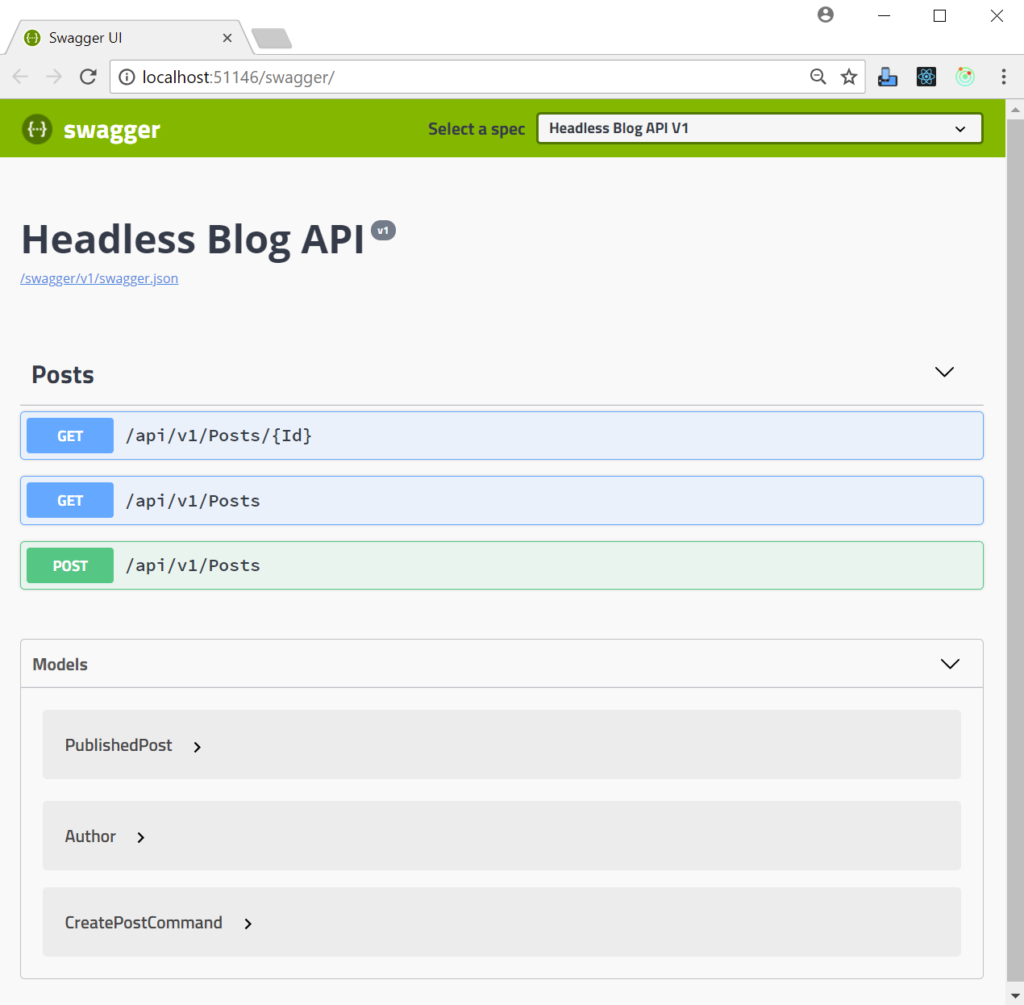

- No complicated JSON configuration files

- Open API / Swagger generation – under development

- Validation – under development

Probably the easiest way to illustrate how this works is by way of an example – so fire up Visual Studio 2017 and follow the steps below.

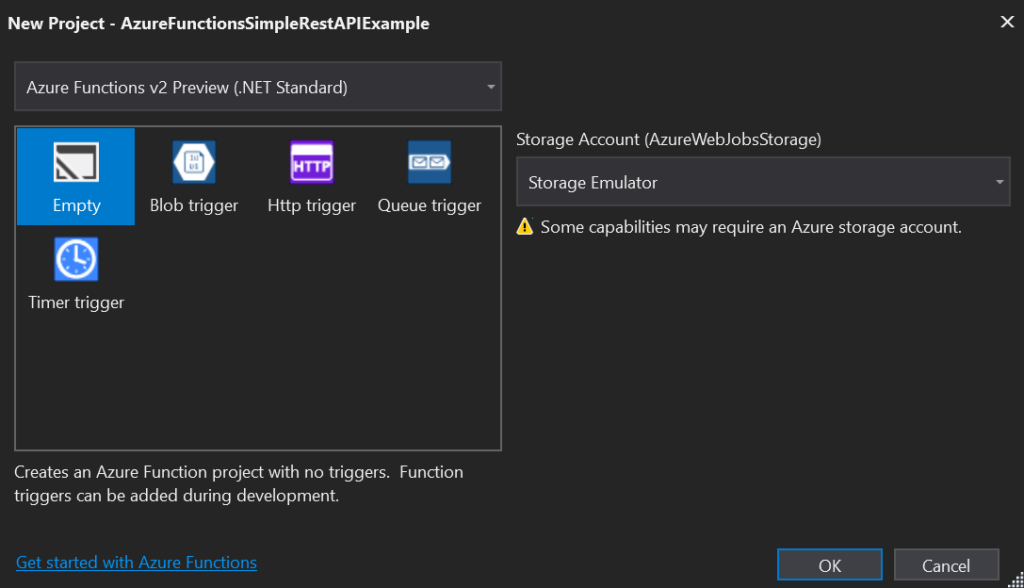

Firstly create a new Azure Function project in Visual Studio. When you’re presented with the Azure Functions new project dialog make sure you use the Azure Functions v2 Preview and create an Empty project:

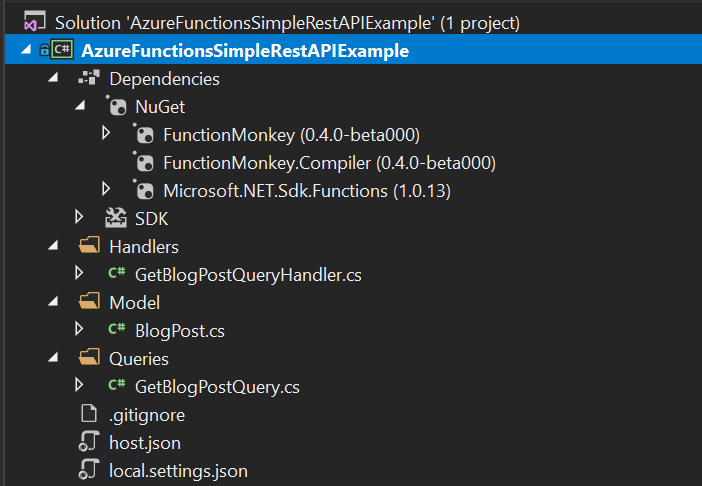

After your project is created you should see an empty Azure Functions project. The next step is to add the required NuGet packages – using the Package Manager Console run the following commands:

Install-Package FunctionMonkey -pre Install-Package FunctionMonkey.Compiler -pre

The first package adds the references we need for the commanding framework and Function specific components while the second adds an MSBuild build target that will be run as part of the build process to generate an assembly containing our Functions and the corresponding JSON for them.

Next create a folder in the project called Model and into that add a class named BlogPost:

class BlogPost

{

public Guid PostId { get; set; }

public string Title { get; set; }

public string Body { get; set; }

}

Next create a folder in the solution called Queries and into that add a class called GetBlogPostQuery:

public class GetBlogPostQuery : ICommand<BlogPost>

{

public Guid PostId { get; set; }

}

This declares a command which when invoked with a blog post ID will return a blog post.

Now we need to write some code that will actually handle the invoked command – we’ll just write something that returns a blog post with some static content but with a post ID that mirrors that supplied. Create a folder called Handlers and into that add a class called GetBlogPostQueryHandler:

class GetBlogPostQueryHandler : ICommandHandler<GetBlogPostQuery, BlogPost>

{

public Task<BlogPost> ExecuteAsync(GetBlogPostQuery command, BlogPost previousResult)

{

return Task.FromResult(new BlogPost

{

Body = "Our blog posts main text",

PostId = command.PostId,

Title = "Post Title"

});

}

}

At this point we’ve written our application logic and you should have a solution structure that looks like this:

With that in place its time to surface this as a REST end point on an Azure Function. To do this we need to add a class into the project that implements the IFunctionAppConfiguration interface. This class is used in two ways: firstly the FunctionMonkey.Compiler package will look for this in order to compile the assembly containing our function triggers and the associated JSON, secondly it will be invoked at runtime to provide an operating environment that supplies implementations for our cross cutting concerns.

Create a class called ServerlessBlogConfiguration and add it to the root of the project:

public class ServerlessBlogConfiguration : IFunctionAppConfiguration

{

public void Build(IFunctionHostBuilder builder)

{

builder

.Setup((serviceCollection, commandRegistry) =>

{

commandRegistry.Discover<ServerlessBlogConfiguration>();

})

.Functions(functions => functions

.HttpRoute("/api/v1/post", route => route

.HttpFunction<GetBlogPostQuery>(HttpMethod.Get)

)

);

}

}

The interface requires us to implement the Build method and this is supplied a IFunctionHostBuilder and its this we use to define both our Azure Functions and a runtime environment. In this simple case its very simple.

Firstly in the Setup method we use the supplied commandRegistry (an ICommandRegistry interface – for more details on my commanding framework please see the documentation here) to register our command handlers (our GetBlogPostQueryHandler class) via a discovery approach (supplying ServerlessBlogConfiguration as a reference type for the assembly to search). The serviceCollection parameter is an IServiceCollection interface from Microsofts IoC extensions package that we can use to register any further dependencies.

Secondly we define our Azure Functions based on commands. As we’re building a REST API we can also group HTTP functions by route (this is optional – you can just define a set of functions directly without routing) essentially associating a command type with a verb. (Quick note on routes: proxies don’t work in the local debug host for Azure Functions but a proxies.json file is generated that will work when the functions are published to Azure).

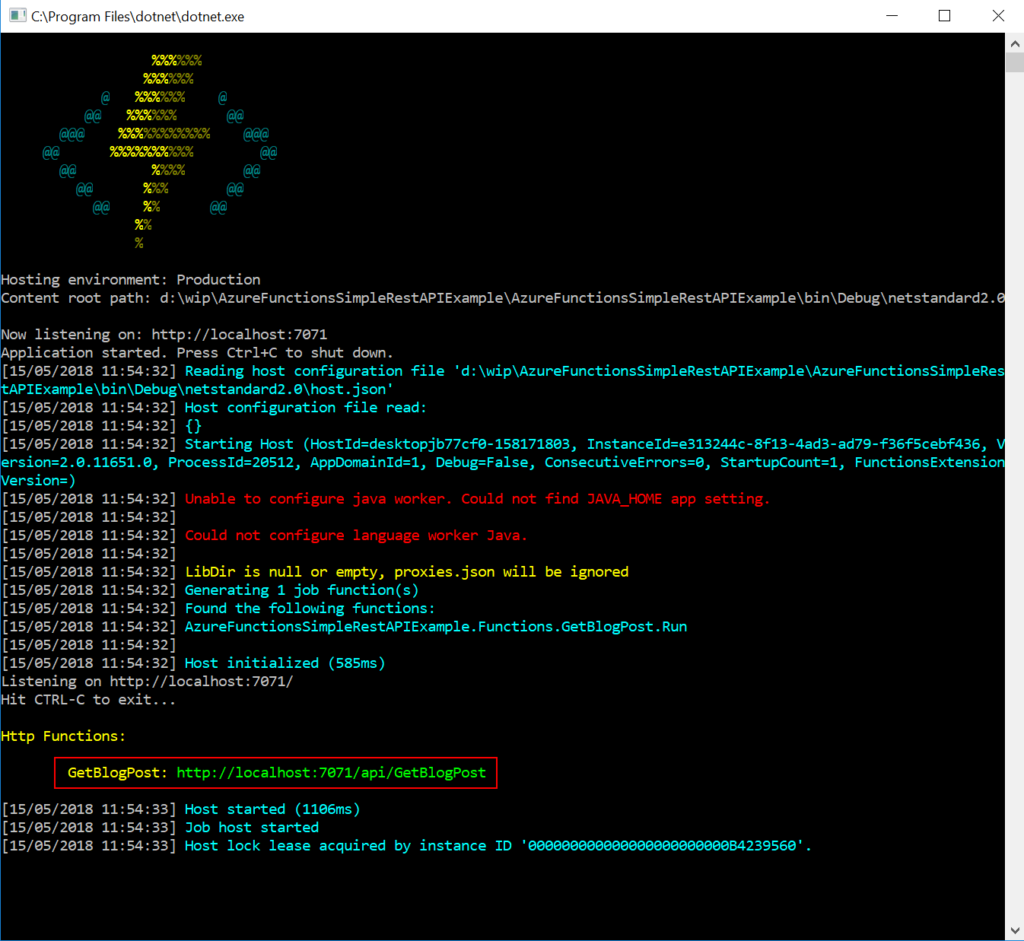

If you run the project you should see the Azure Functions local host start and a HTTP function that corresponds to our GetBlogPostQuery command being exposed:

The function naming uses a convention based approach which will give each function the same name as the command but remove a postfix of Command or Query – hence GetBlogPost.

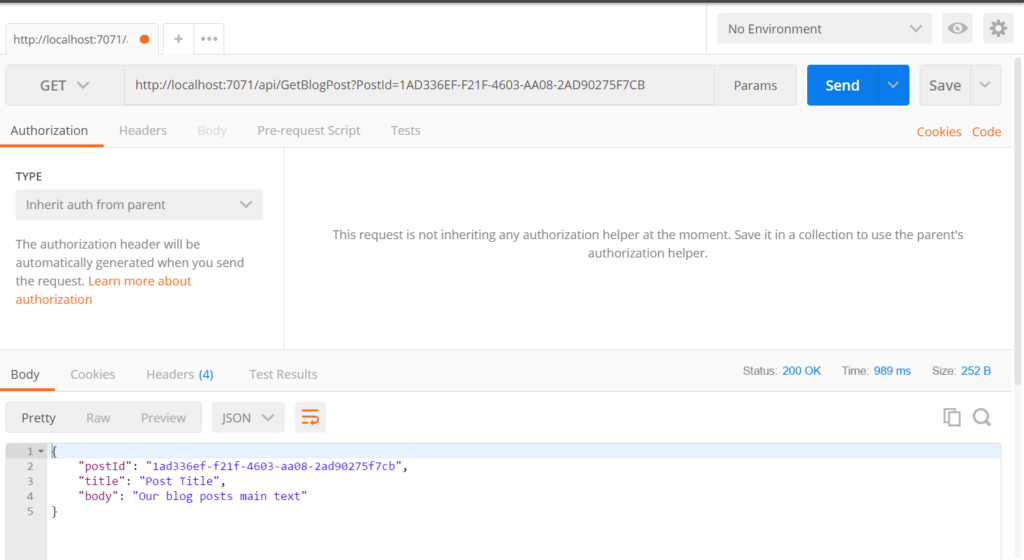

If we run this in Postman we can see that it works as we’d expect – it runs the code in our GetBlogPostQueryHandler:

The example here is fairly simple but already a little cleaner than rolling out functions by hand. However it starts to come into its own when we have more Functions to define. Lets elaborate on our configuration block:

public class ServerlessBlogConfiguration : IFunctionAppConfiguration

{

private const string ObjectIdentifierClaimType = "http://schemas.microsoft.com/identity/claims/objectidentifier";

public void Build(IFunctionHostBuilder builder)

{

builder

.Setup((serviceCollection, commandRegistry) =>

{

serviceCollection.AddTransient<IPostRepository, CosmosDbPostRepository>();

commandRegistry.Discover<ServerlessBlogConfiguration>();

})

.Authorization(authorization => authorization

.TokenValidator<BearerTokenValidator>()

.Claims(mapping => mapping

.MapClaimToCommandProperty(ClaimTypes.ObjectIdentifierClaimType, "AuthenticatedUserId"))

)

.Functions(functions => functions

.HttpRoute("/api/v1/post", route => route

.HttpFunction<GetBlogPostQuery>("/{postId}", HttpMethod.Get)

.HttpFunction<CreateBlogPostCommand>(HttpMethod.Post)

)

.HttpRoute("/api/v1/user", route => route

.HttpFunction<GetUserProfileQuery>(HttpMethod.Get)

.HttpFunction<UpdateProfileCommand>(HttpMethod.Put)

.HttpFunction<GetUserBlogPostsQuery>("/posts", HttpMethod.Get)

)

.StorageQueueFunction<CreateZipBackupCommand>("StorageAccountConnectionString", "BackupQueue")

);

}

}

In this example we’ve defined more API endpoints and we’ve also introduced a Function with a storage queue trigger – this will behave just like our HTTP functions but instead of being triggered by an HTTP request will be triggered by an item on a queue and so applying the same principles to this trigger type (note: I haven’t yet pushed this to the public package).

You can also see me registering a dependency in our IoC container – this will be available for injection across the system and into any of our command handlers.

We’ve also added support for token based security with our Authorization block – this adds in a class that validates tokens and builds a ClaimsPrincipal from them which we can then use by mapping claims onto the properties of our commands. This works in exactly the same way as it does on my REST API commanding library and with or without claims mapping or Authorization sensitive properties can be prevented from user access with the SecurityPropertyAttribute in the same way as in the REST API library too.

The code for the above can be found in GitHub.

Development Status

The eagle eyed will have noticed that these packages I’ve referenced here are in preview (as is the v2 Azure Functions runtime itself) and for sure I still have more work to do but they are already usable and I’m using them in three different serverless projects at the moment – as such development on them is moving quite fast, I’m essentially dogfooding.

As a rough roadmap I’m planning on tackling the following things (no particular order, they’re all important before I move out of beta):

- Fix bugs and tidy up code (see 6. below)

- Documentation

- Validation of input (commands)

- Open API / Swagger generation

- Additional trigger / function support

- Return types

- Automated tests – lots of automated tests. Currently the framework is not well covered by automation tests – mainly because this was a non-trivial thing to figure out. I wasn’t quite sure what was going to work and what wouldn’t and so a lot of the early work was trying different approaches and experimenting. Now all that’s settled down I need to get some tests written.

I’ve pushed this out as a couple of people have been asking if they can take a look and I’d really like to get some feedback on it. The code for the implementation of the NuGet packages is in GitHub here (make sure you’re in the develop branch).

Please do let me have any feedback over on Twitter or on the GitHub Issues page for this project.

Recent Comments