A while back I wrote about the improvements Microsoft were working on in regard to the HTTP trigger function scaling issues. The Functions team got in touch with me this week to let me know that they had an initial set of improvements rolling out to Azure.

To get an idea of how significant these improvements are I’m first going to contrast this new update to Azure Functions with my previous measurements and then re-examine Azure Functions in the wider context of the other cloud vendors. I’m specifically separating out the Azure vs Azure comparison from the Azure vs Other Cloud Vendors comparison as while the former is interesting given where Azure found itself in the last set of tests and to highlight how things have improved but isn’t really relevant in terms of a “here and now” vendor comparison.

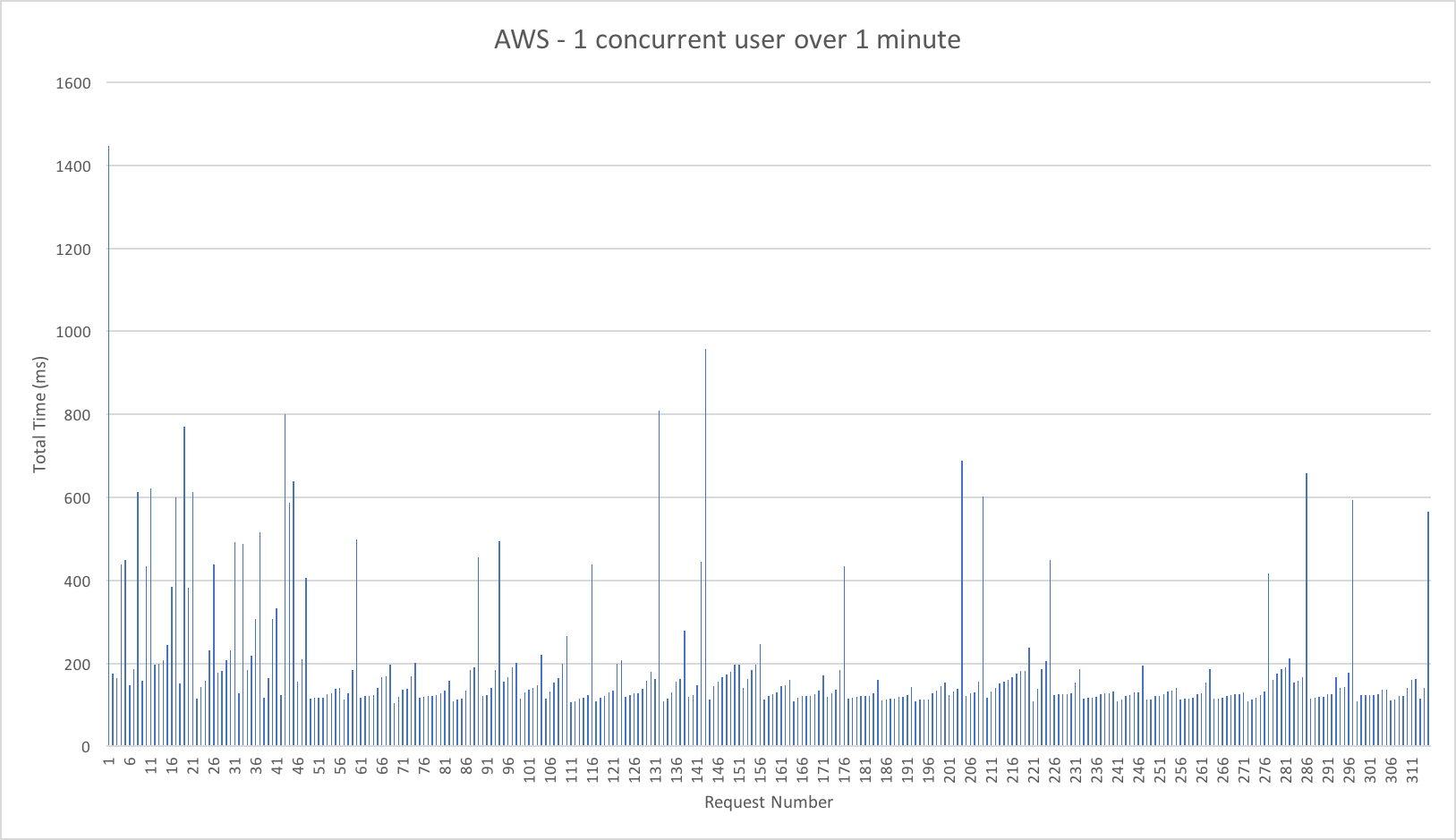

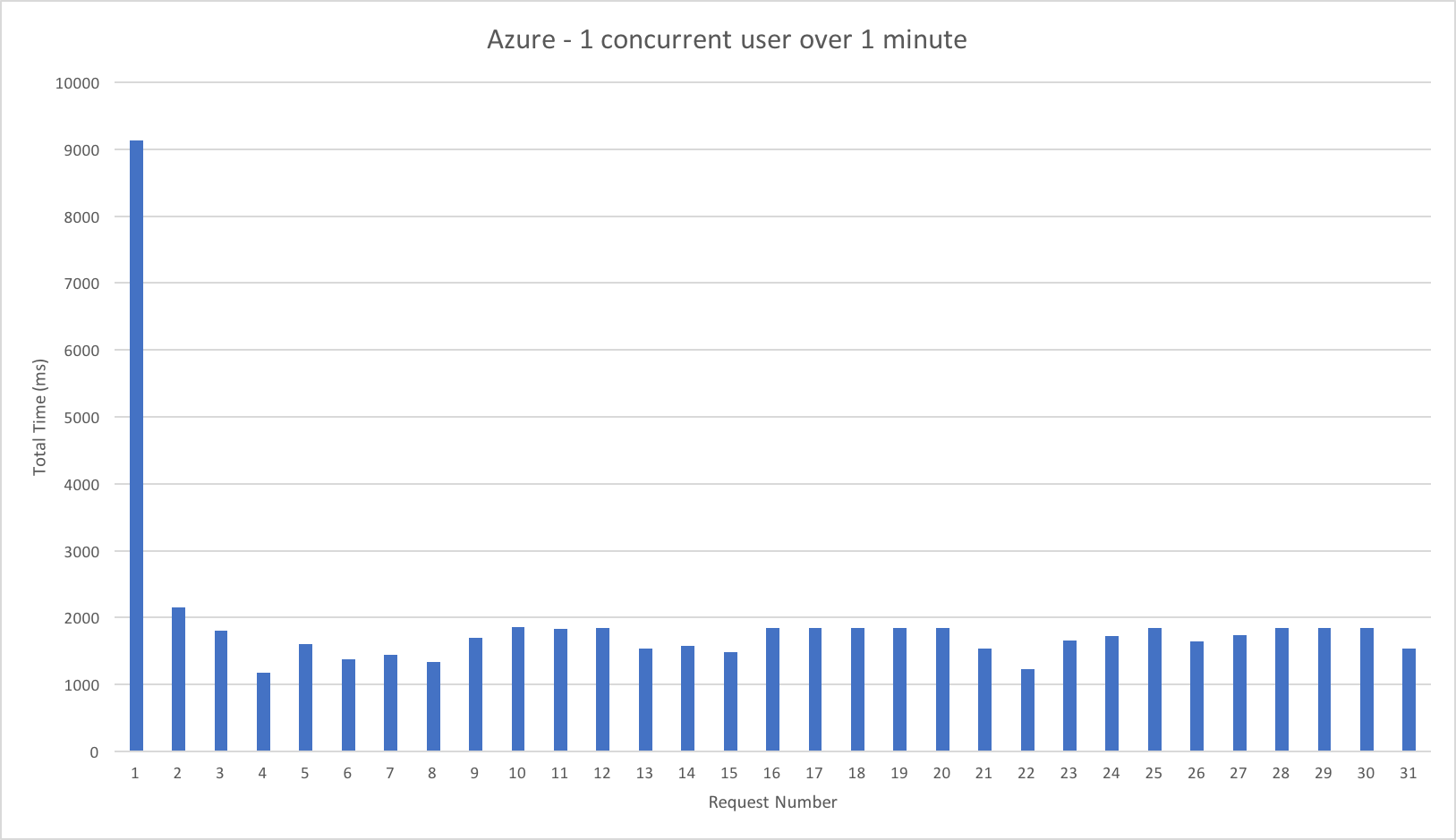

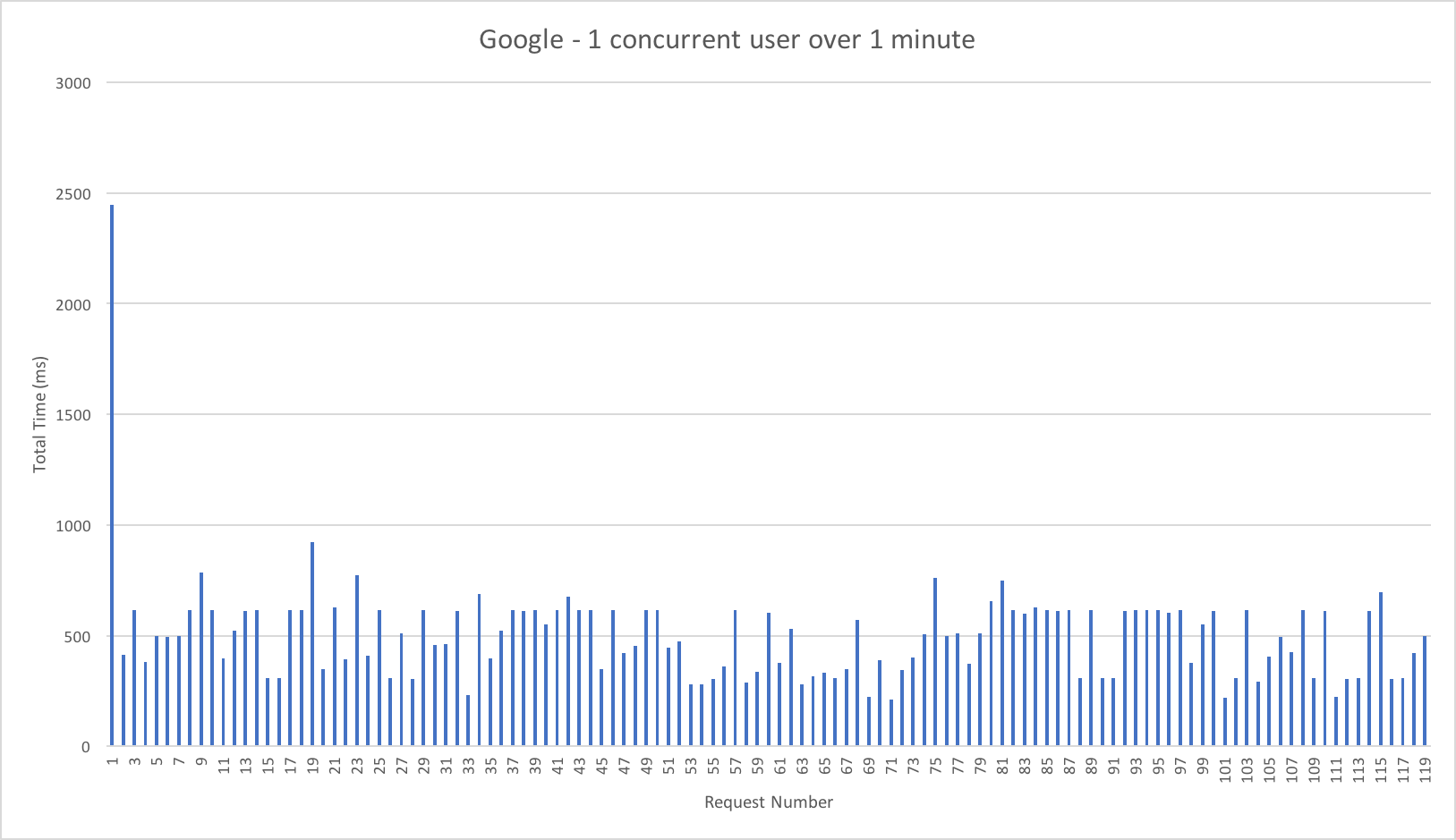

A quick refresh on the tests – the majority of them are run with a representative typical real world mix of a small amount of compute and a small level of IO though tests are included that remove these and involve no IO and practically no computer (return a string).

Although the improvements aren’t yet enabled by default towards the end of this post I’ll highlight how you can enable these improvements for your own Function Apps.

Azure Function Improvements

First I want to take a look at Azure Functions in isolation and see just how the new execution and scaling model differs from the one I tested in January. For consistency the tests are conducted against the exact same app I tested back in January using the same VSTS environment.

Gradual Ramp Up

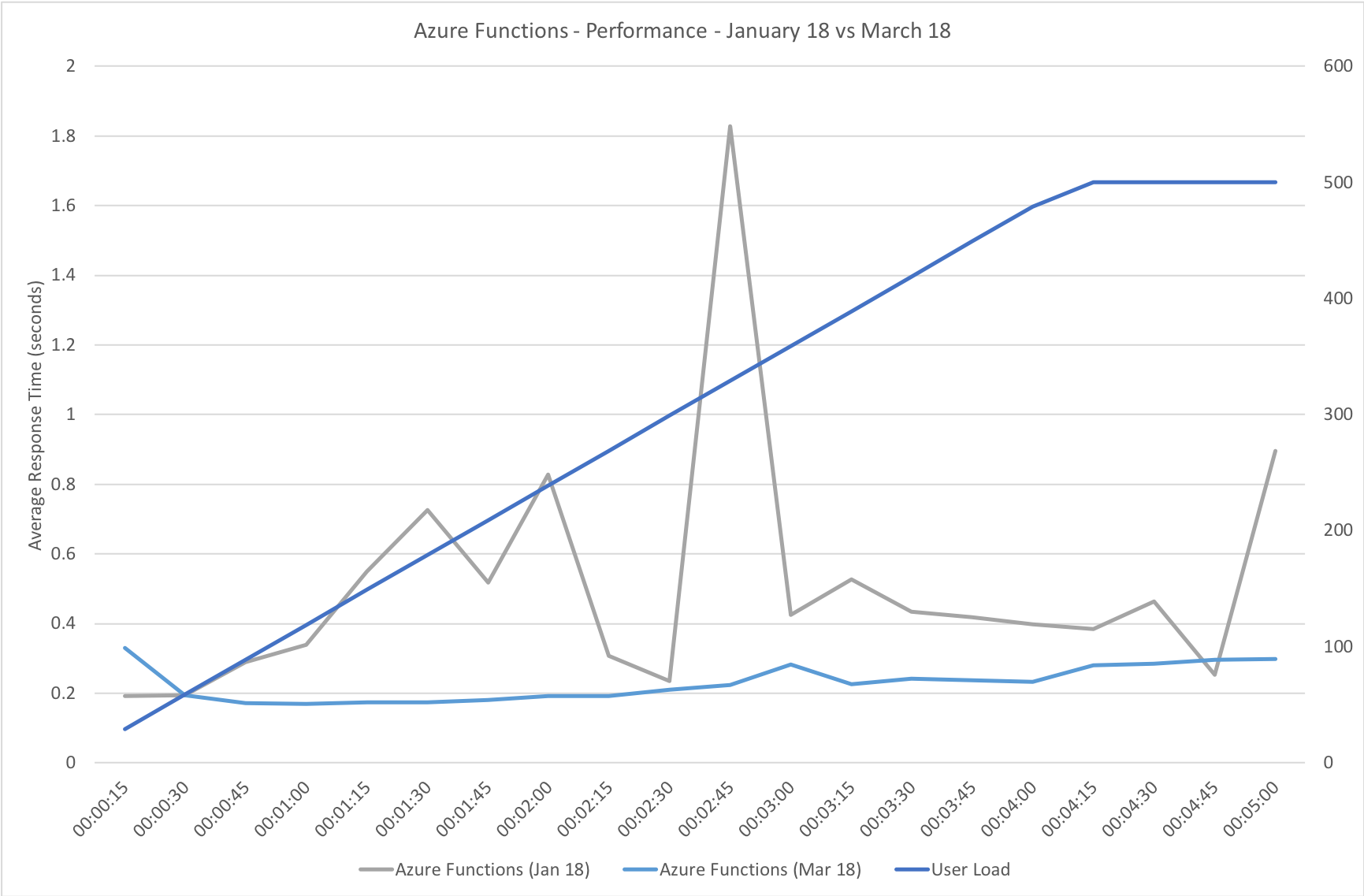

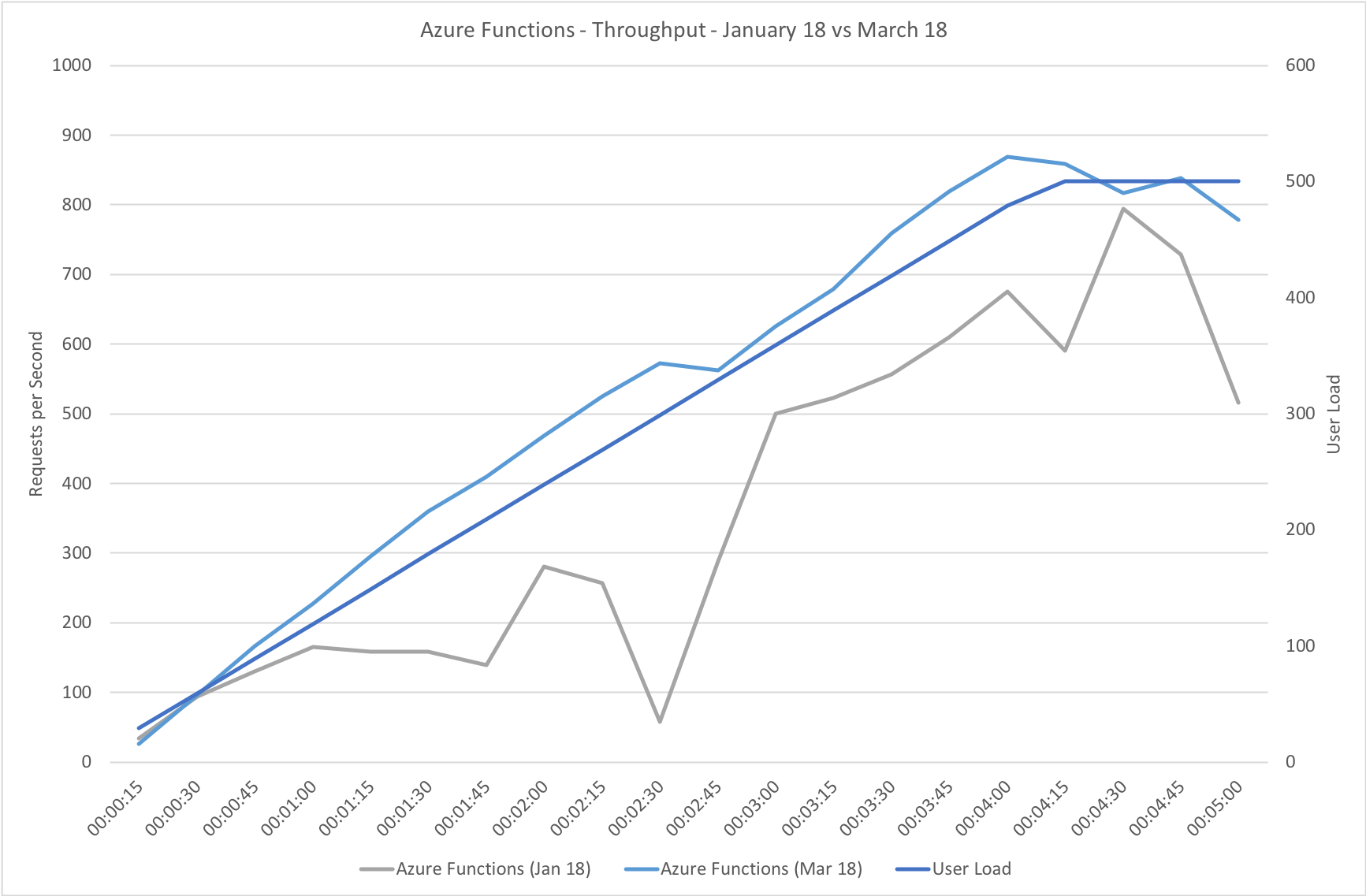

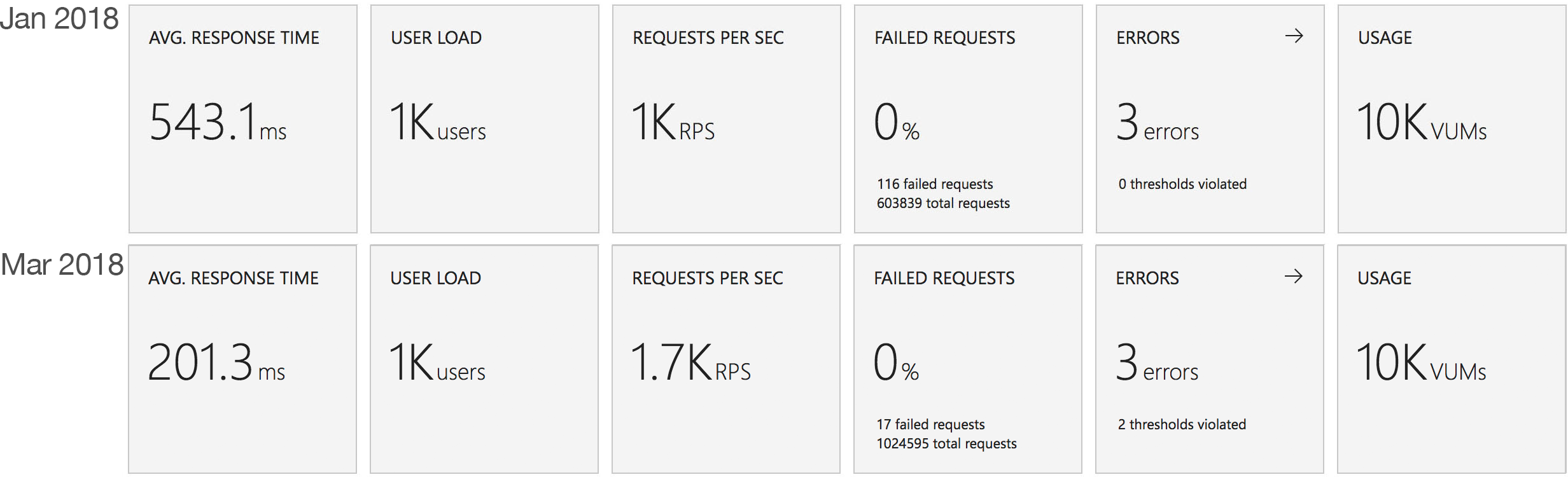

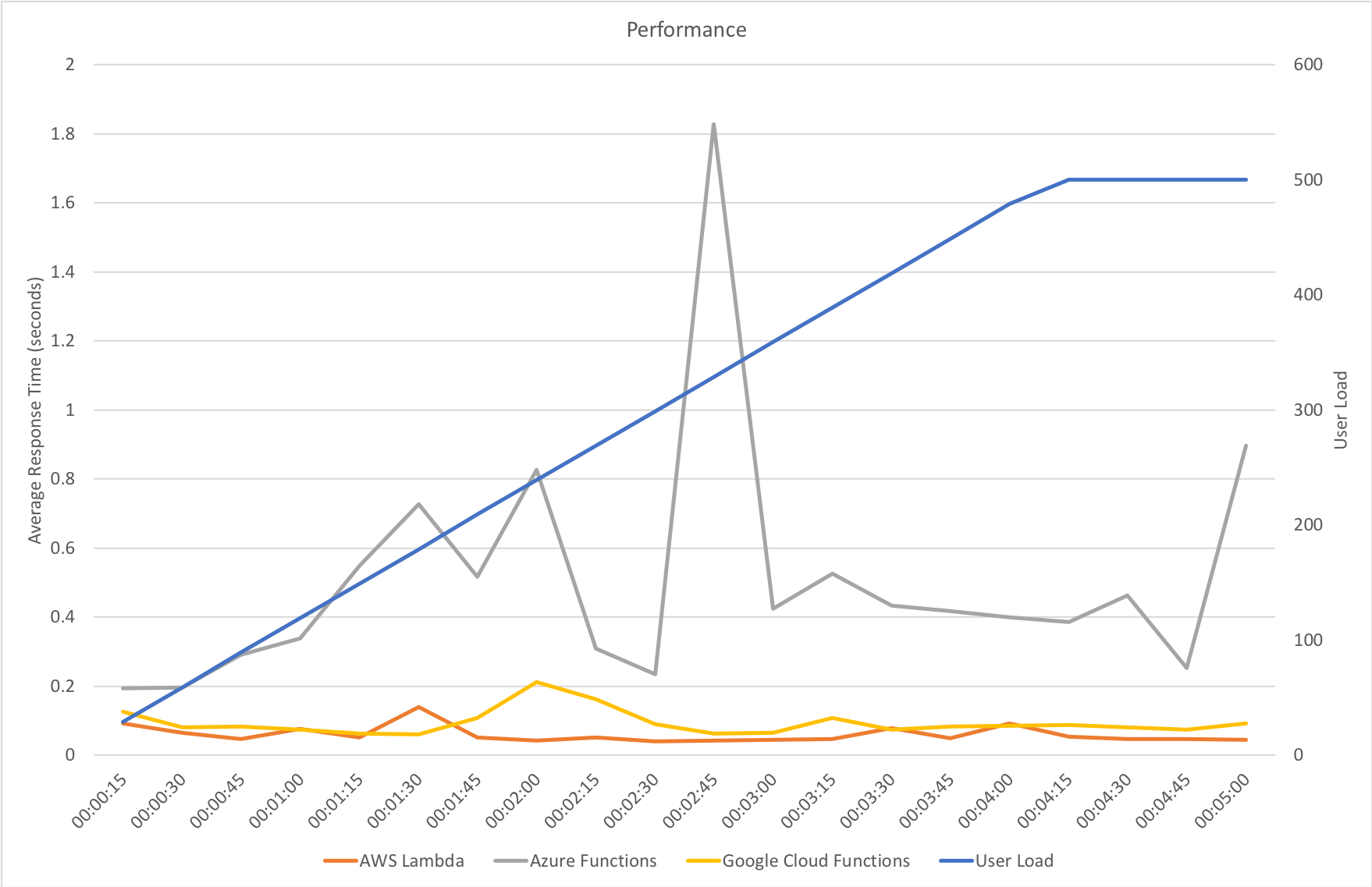

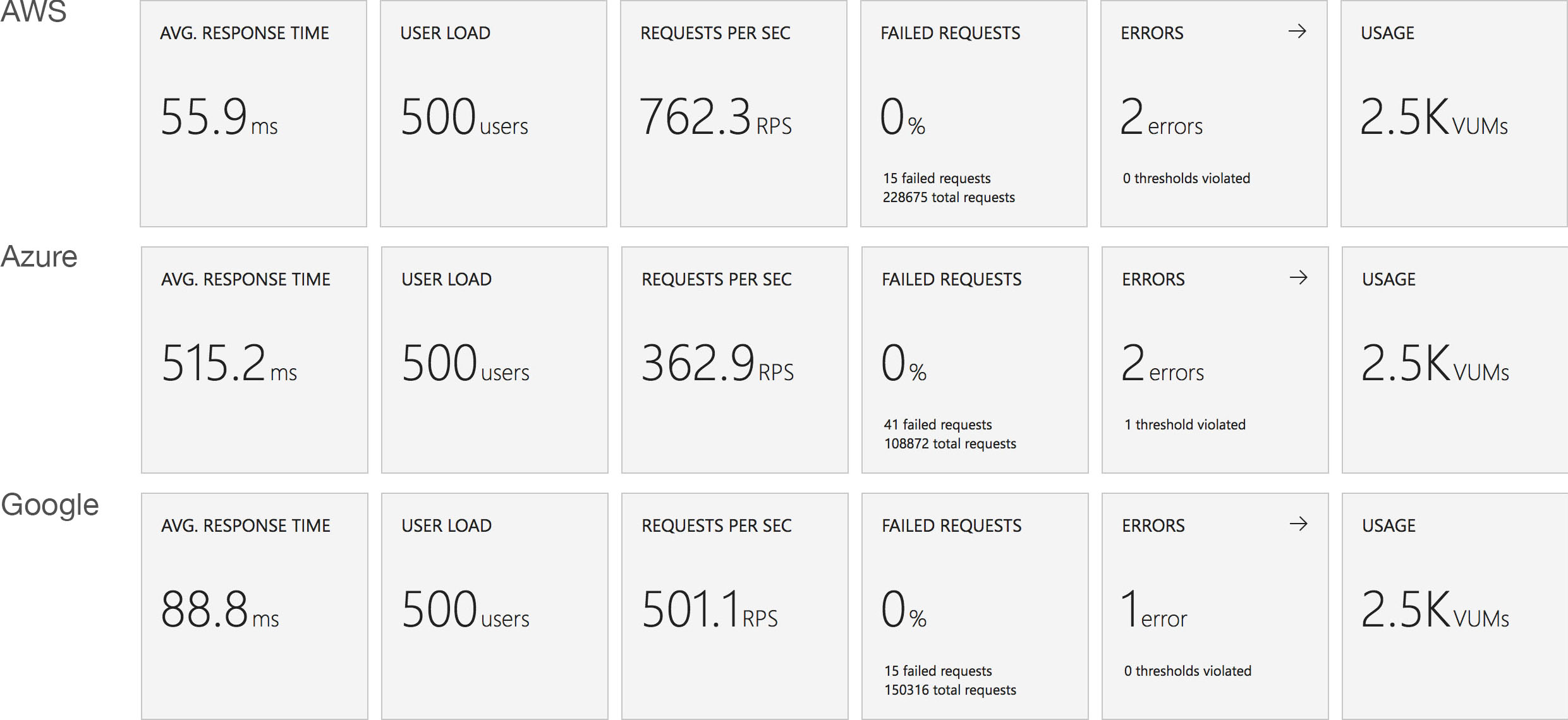

This test case starts with 1 user and adds 2 users per second up to a maximum of 500 concurrent users to demonstrate a slow and steady increase in load.

This is the least demanding of my tests but we can immediately see how much better the new Functions model performs. When I ran these tests in January the response time was very spiky and averaged out around the 0.5 second mark – the new model holds a fairly steady 0.2 seconds for the majority of the run with a slight increase at the tail and manages to process over 50% more requests.

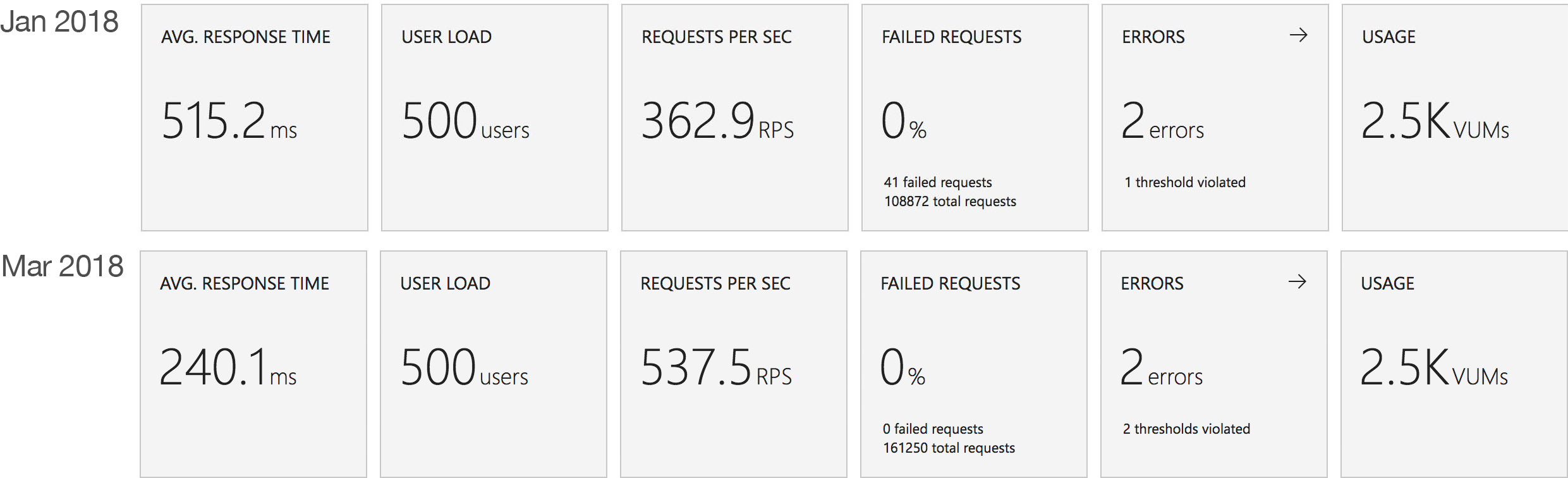

Rapid Ramp Up

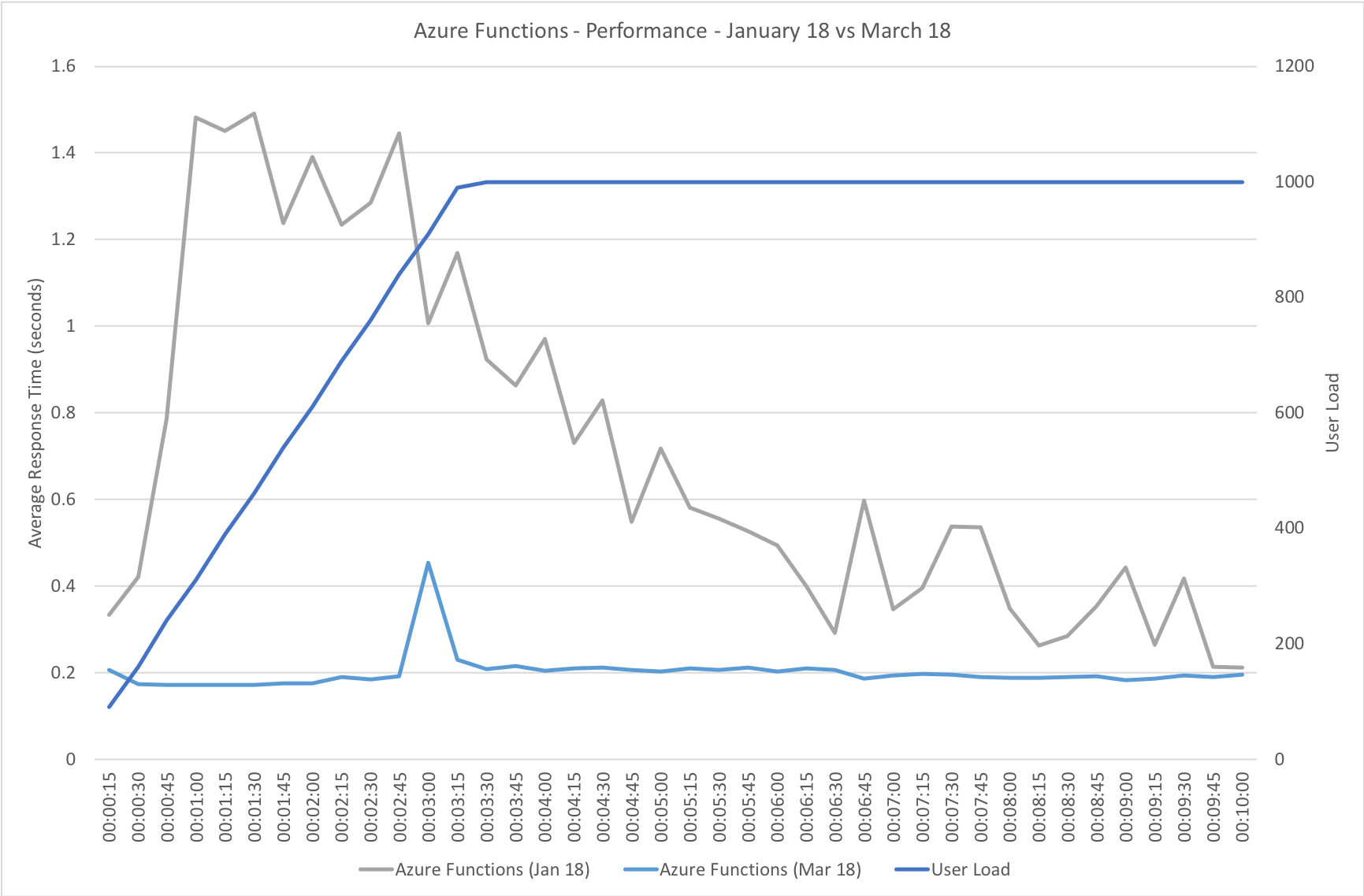

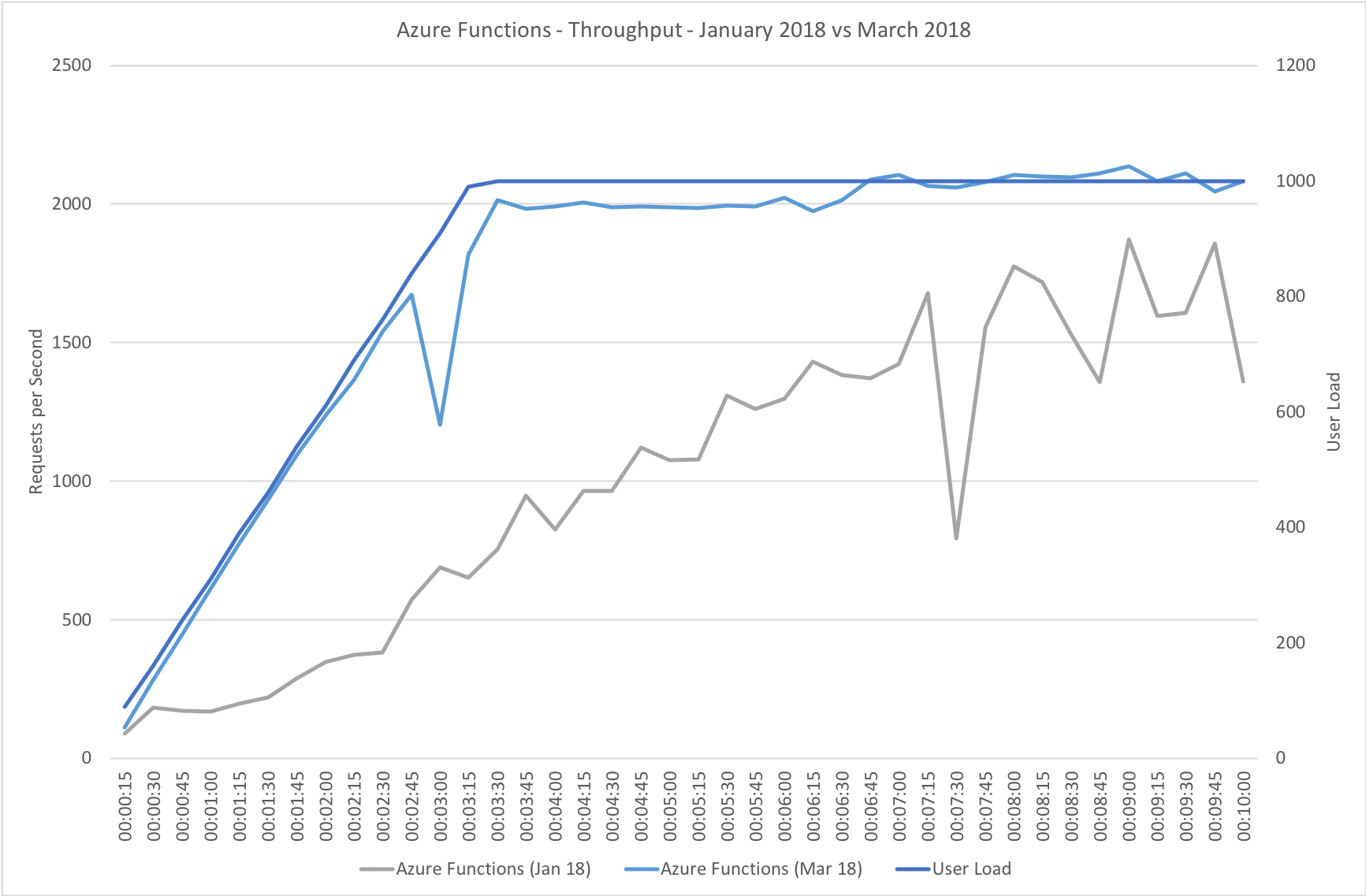

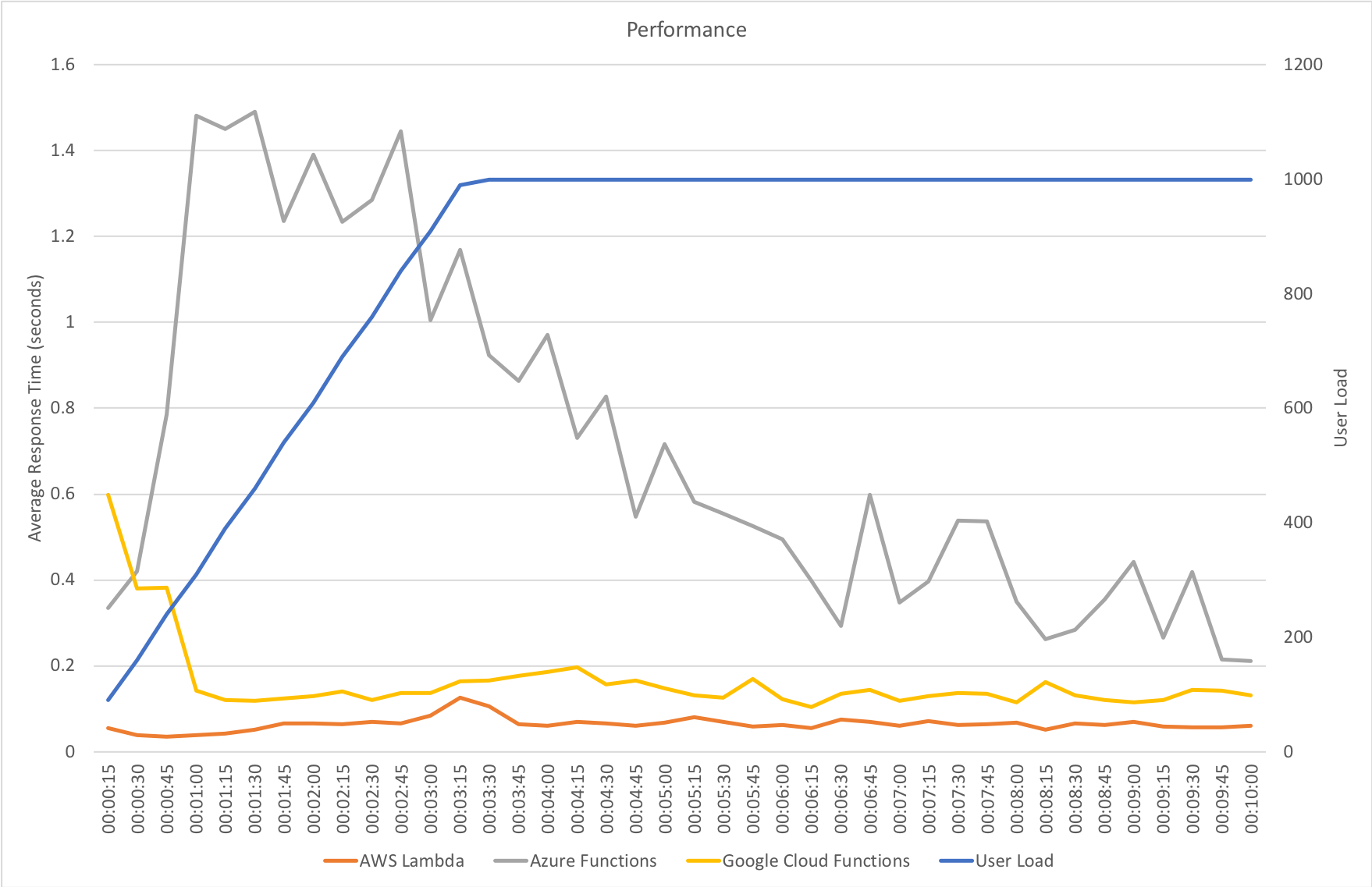

This test case starts with 10 users and adds 10 users every 2 seconds up to a maximum of 1000 concurrent users to demonstrate a more rapid increase in load and a higher peak concurrency.

In the previous round of tests Azure Functions really struggled to keep up with this rate of growth. After a significant period of stability in user volume it eventually reached a state of being semi-acceptable but the data vividly showed a system really straining to respond and gave me serious concerns about its ability to handle traffic spikes. In contrast the new model grows very evenly with the increasing demand and, other than a slight spike early on, maintaining a steady response time throughout.

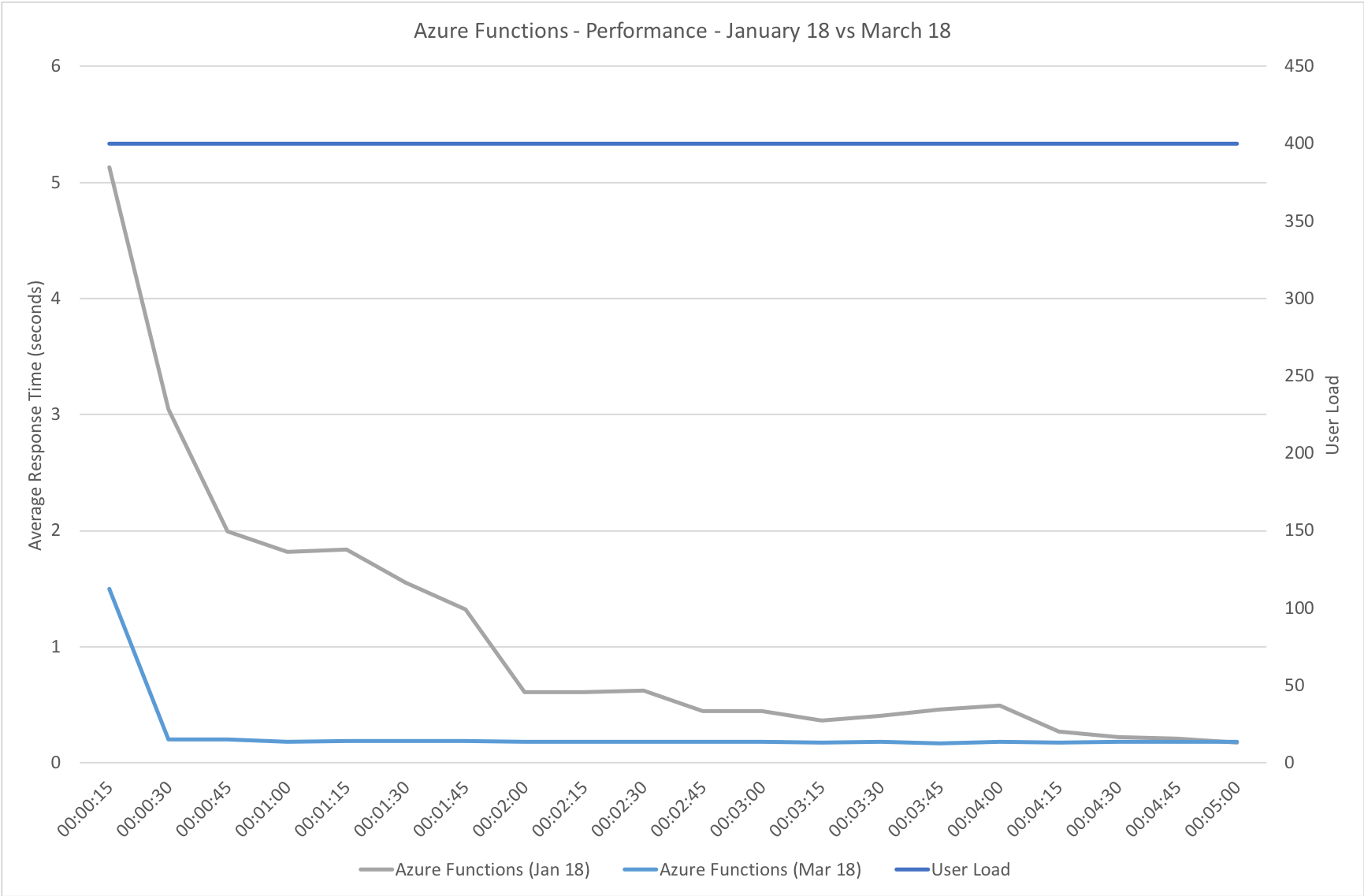

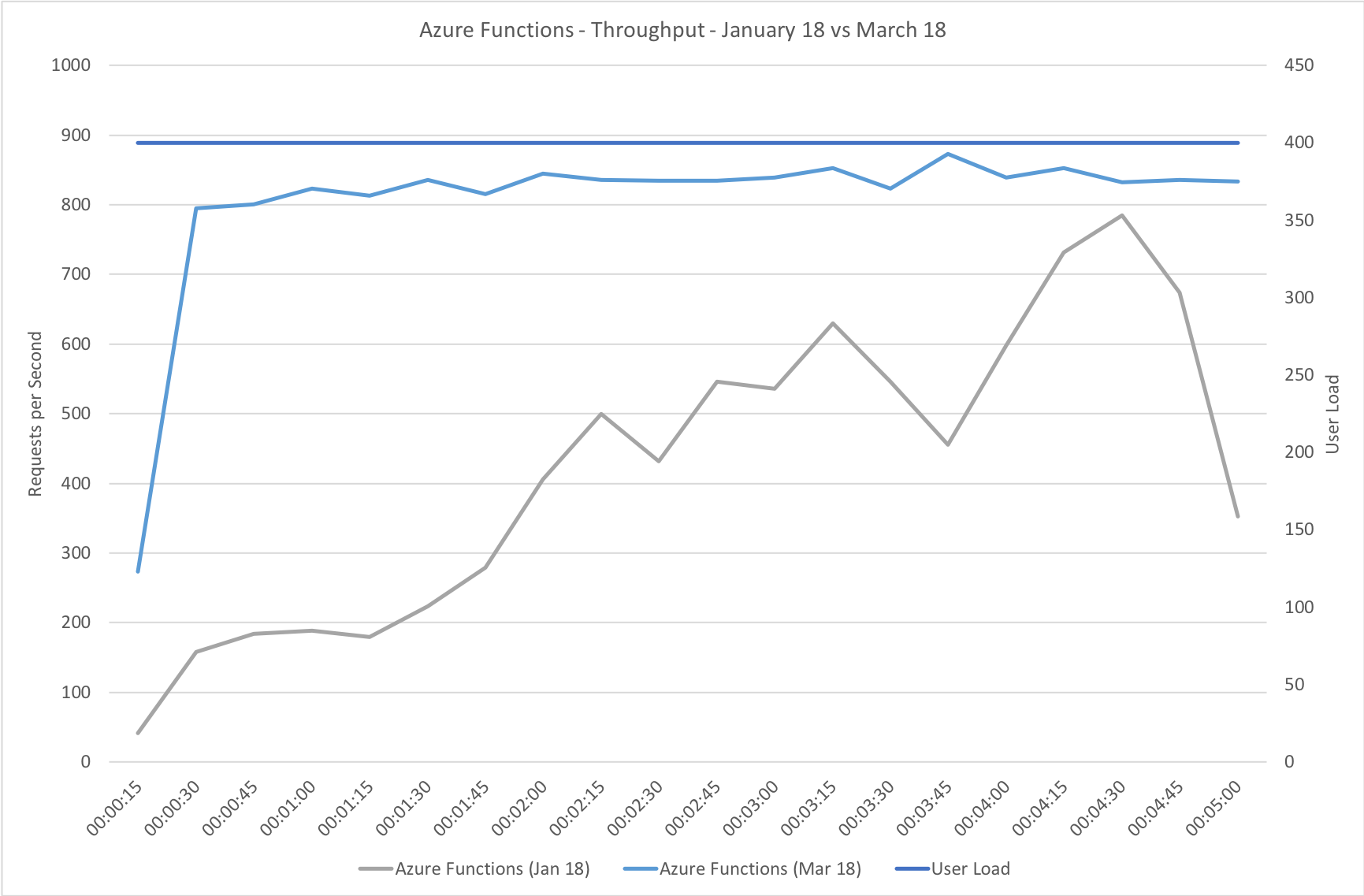

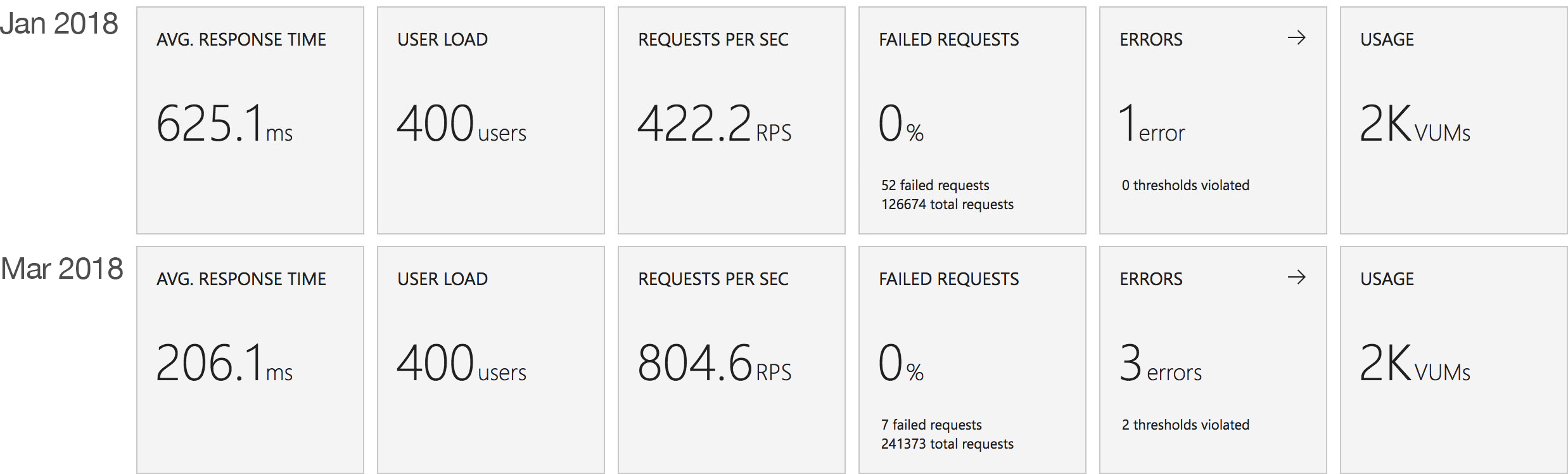

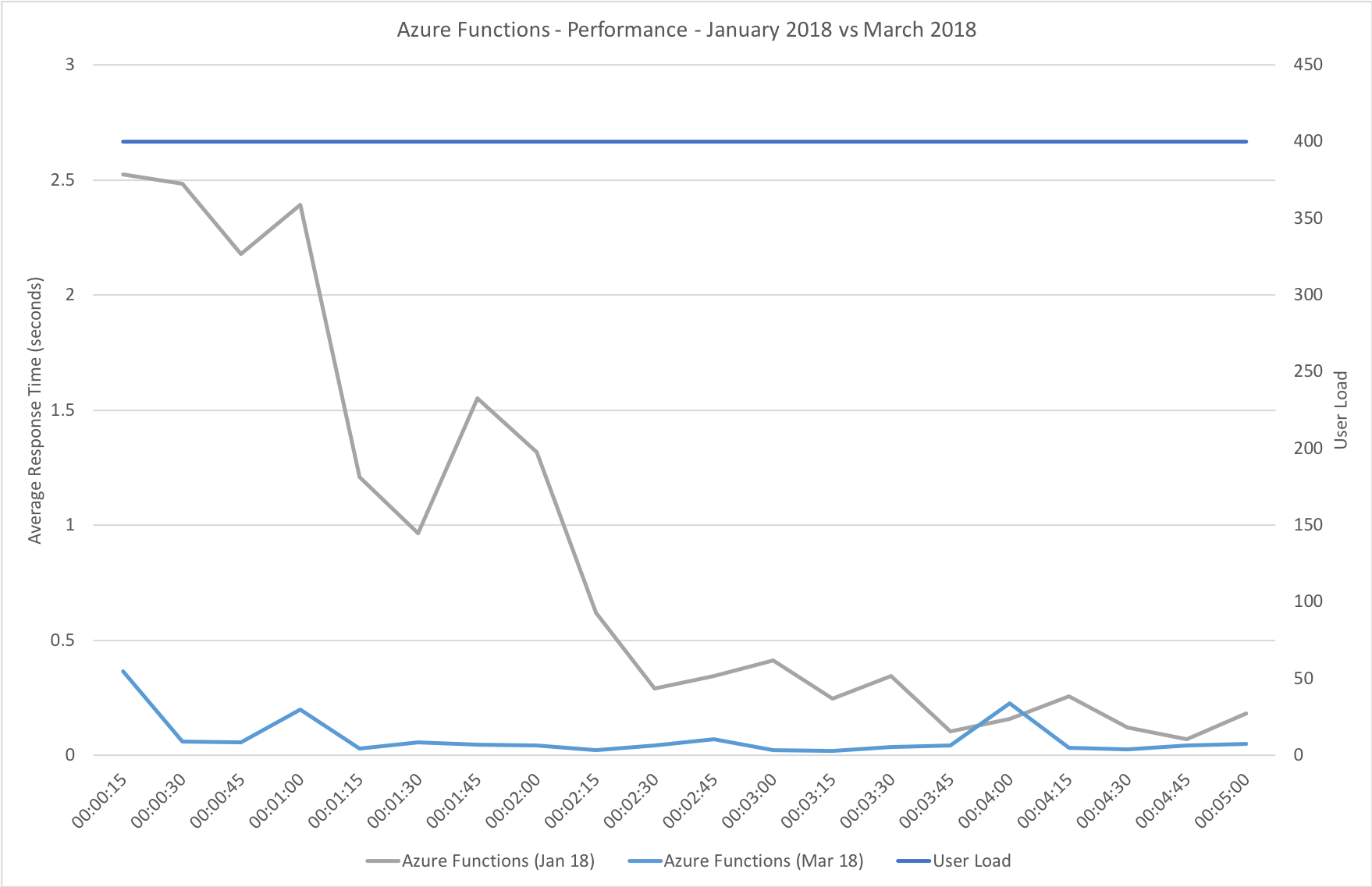

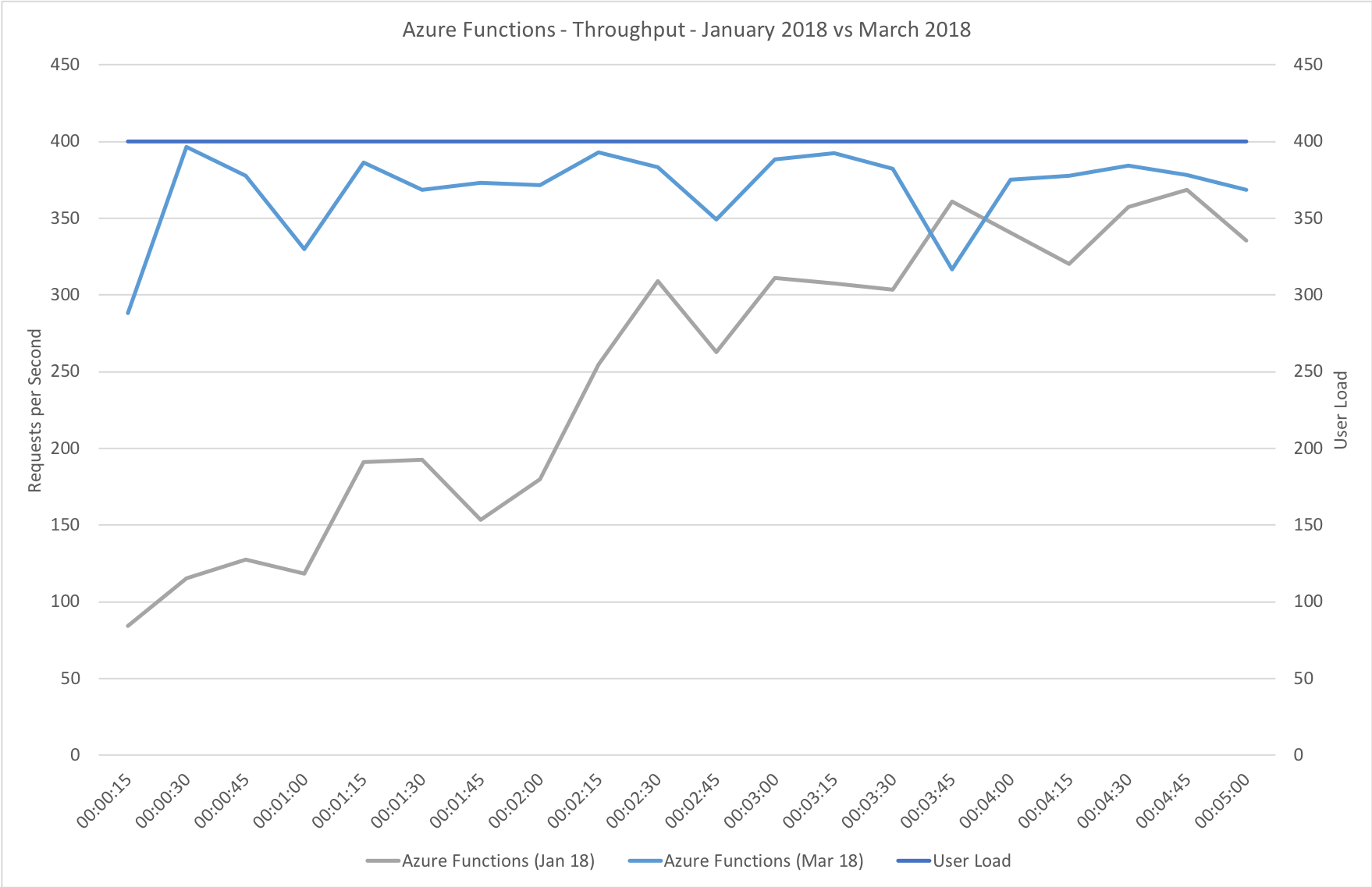

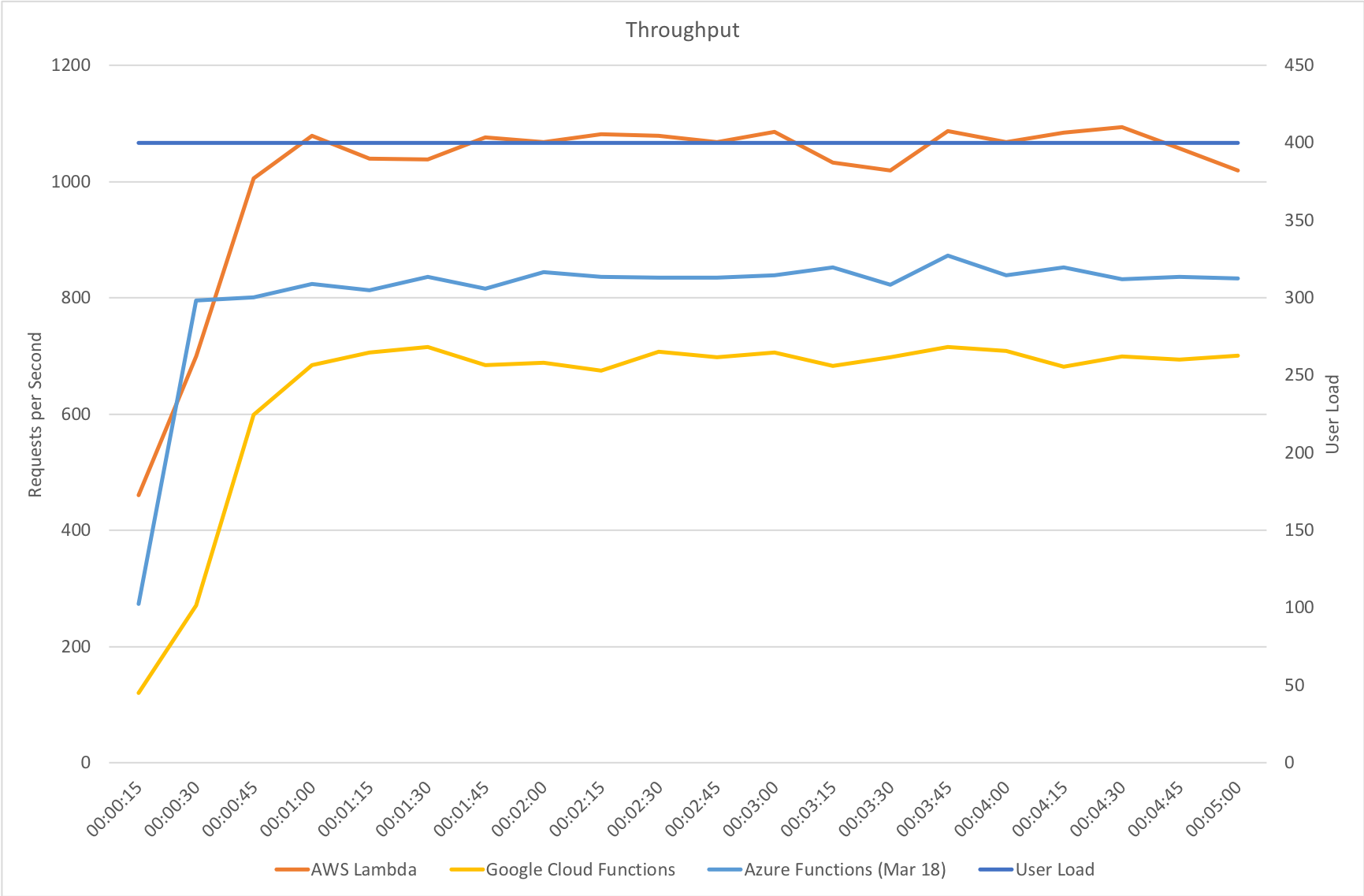

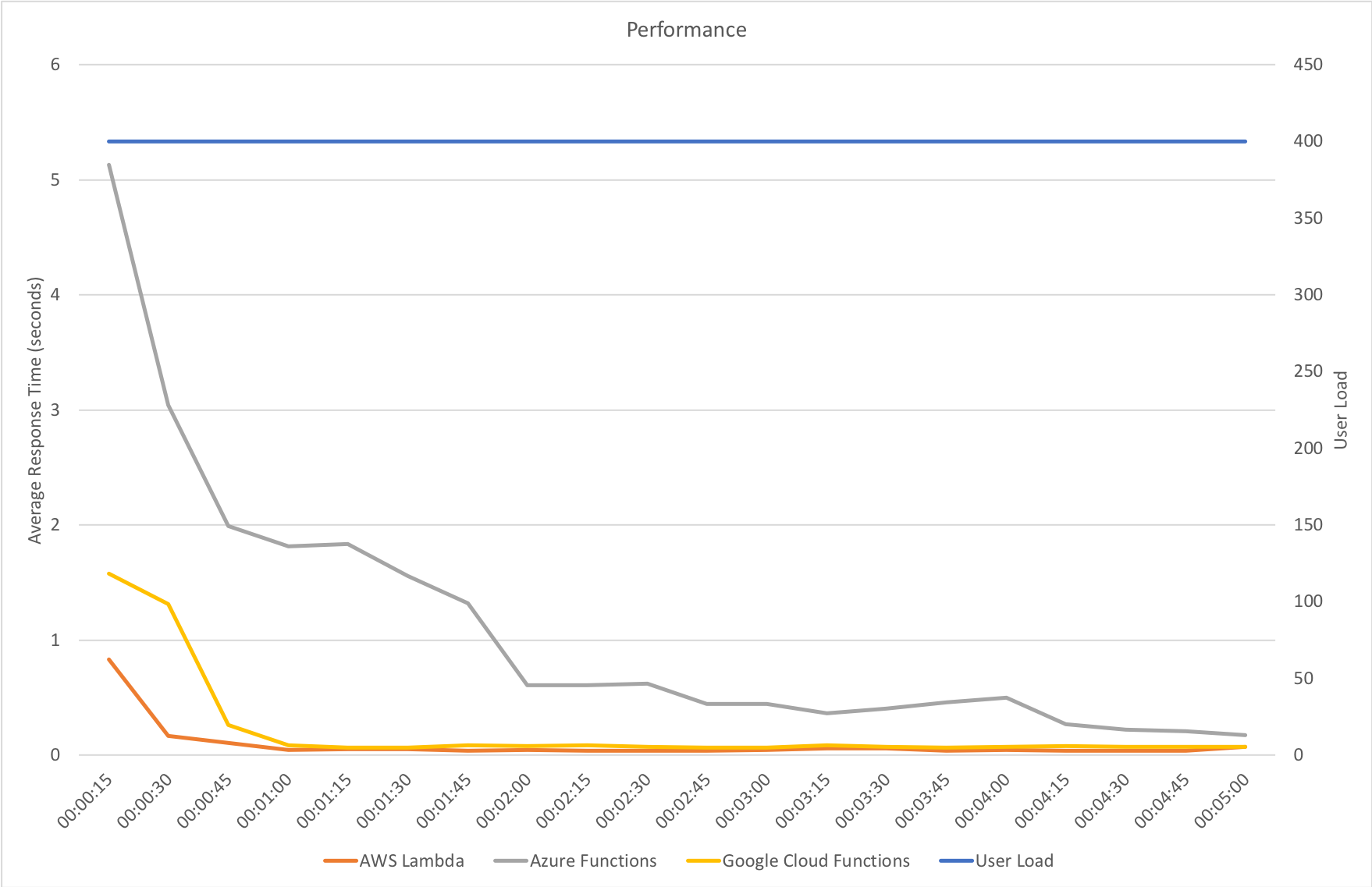

Immediate High Demand

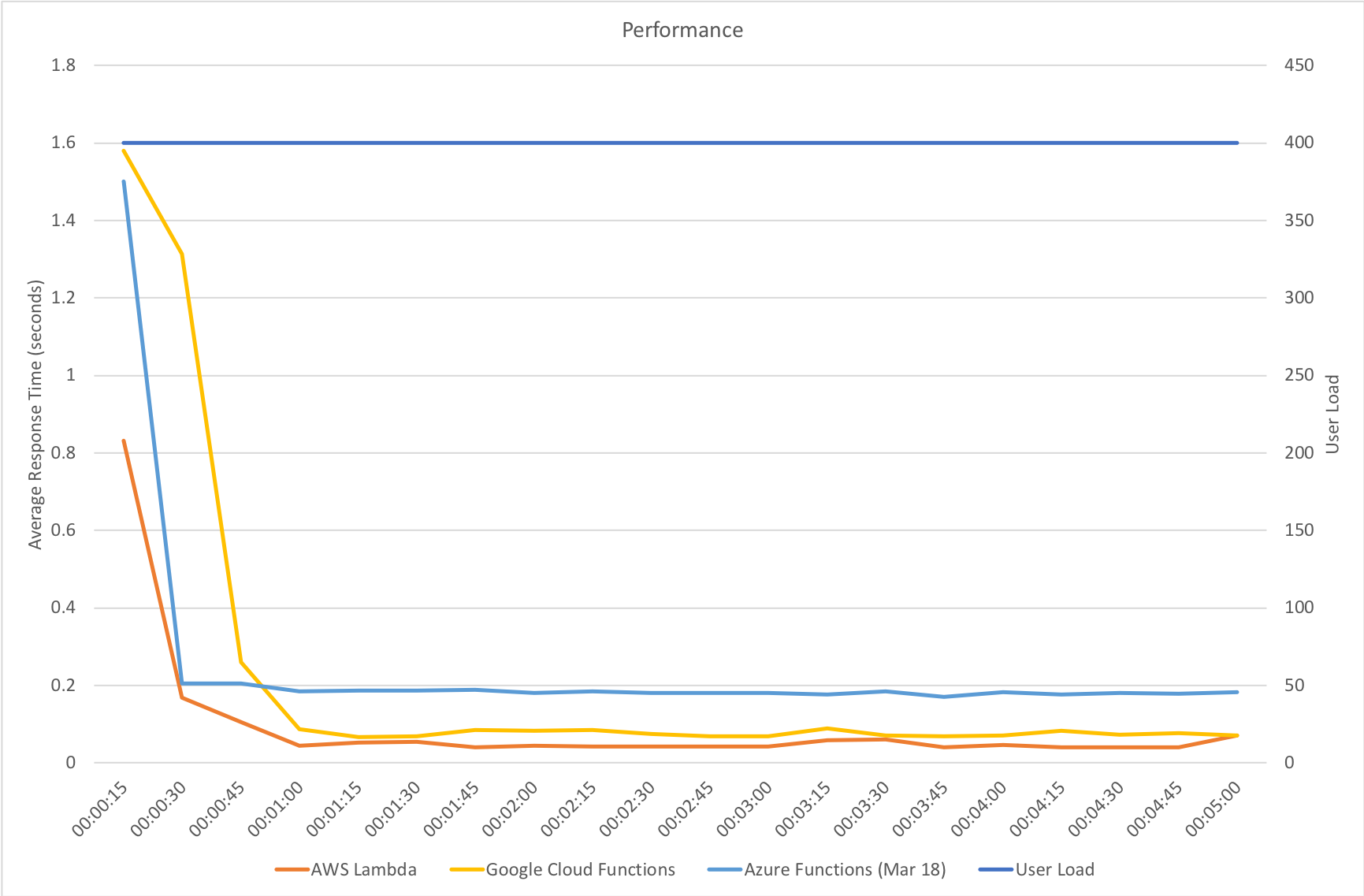

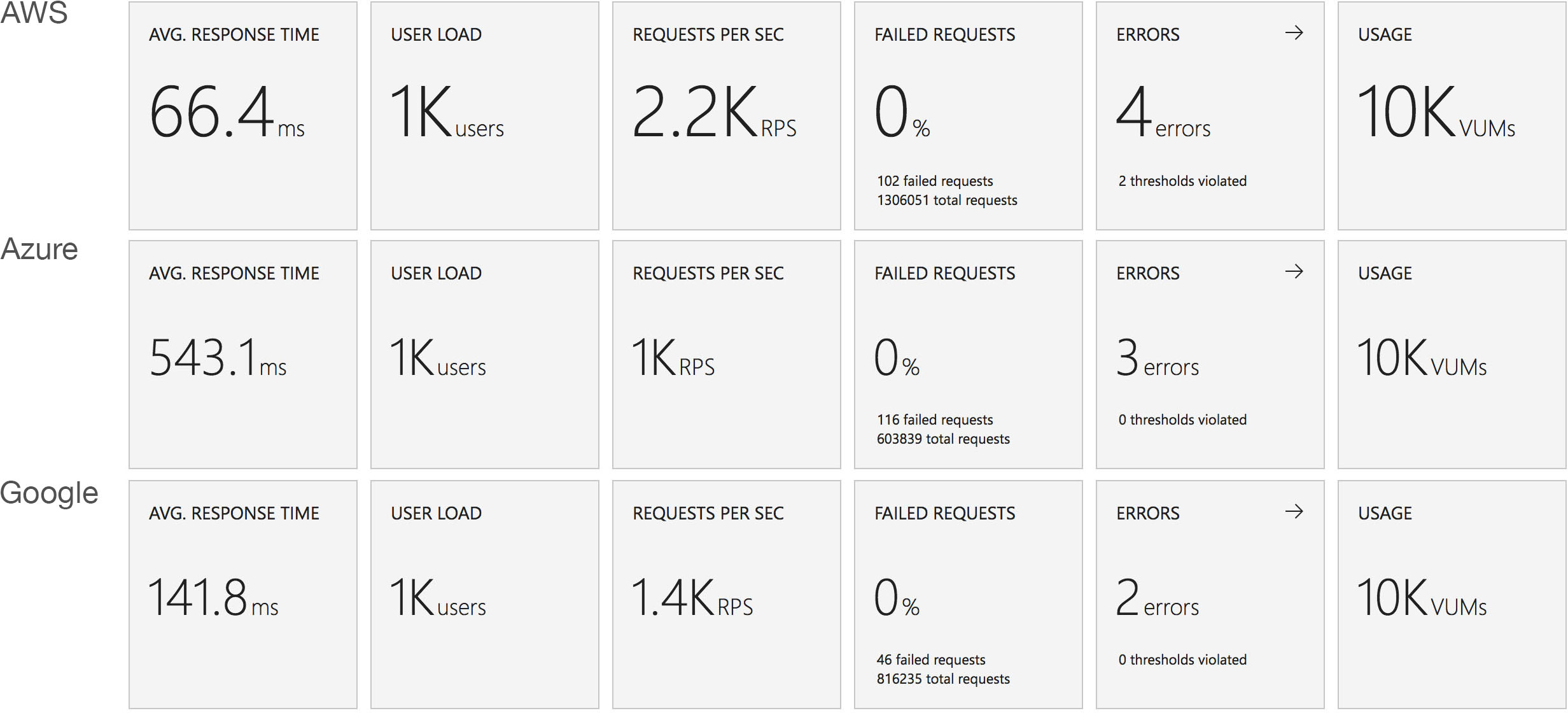

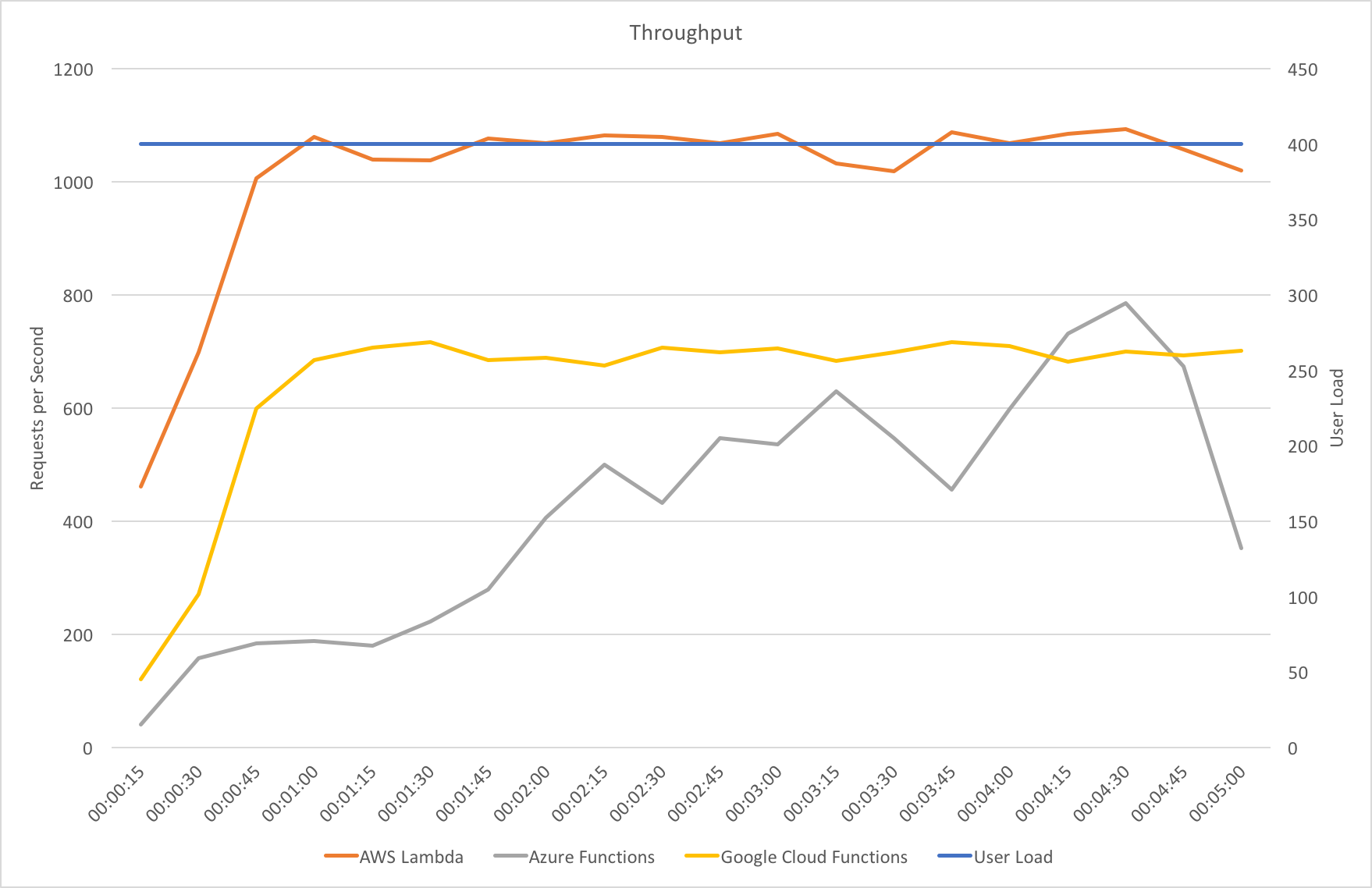

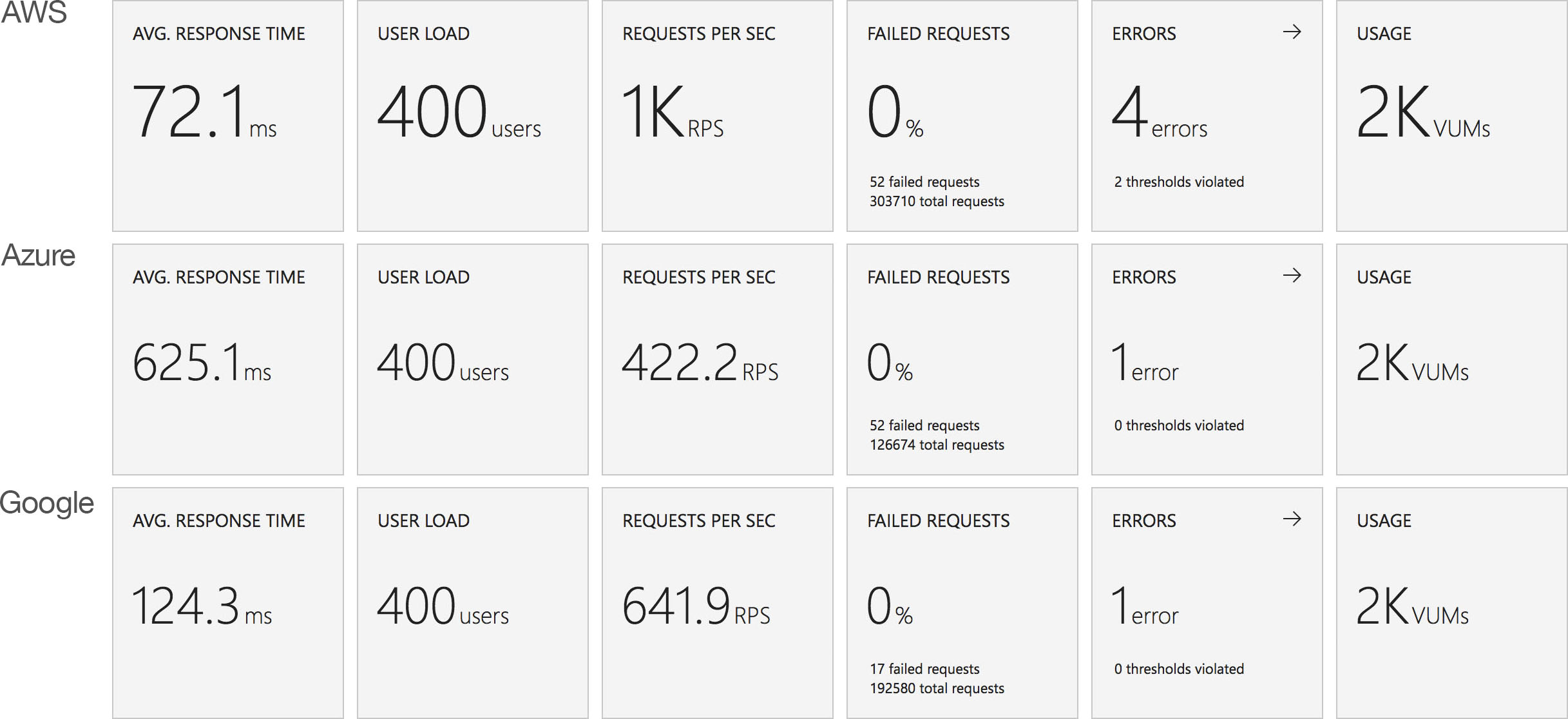

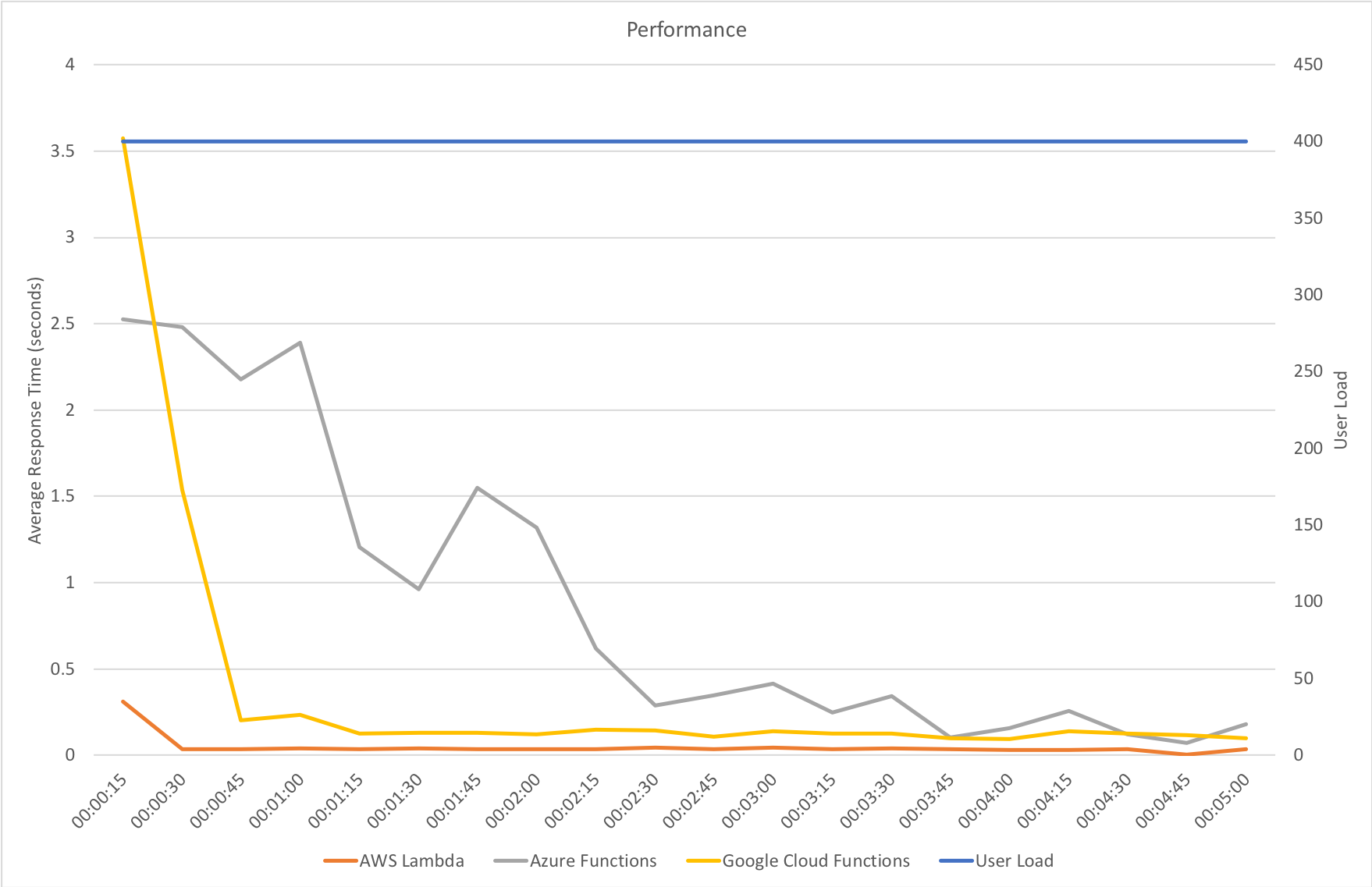

This test case starts immediately with 400 concurrent users and stays at that level of load for 5 minutes demonstrating the response to a sudden spike in demand.

Again this test highlights what a significant improvement has been made in how Azure Functions responds to demand – the new model is able to deal with the sudden influx of users immediately, whereas in January it took nearly the full execution of the test for the system to catch up with the demand.

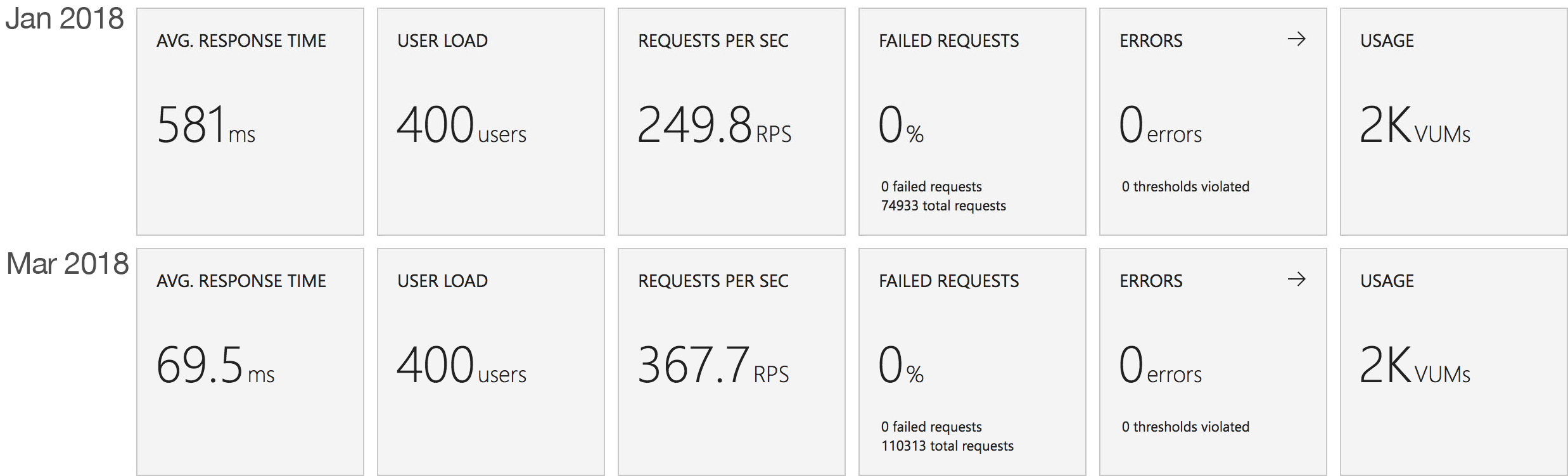

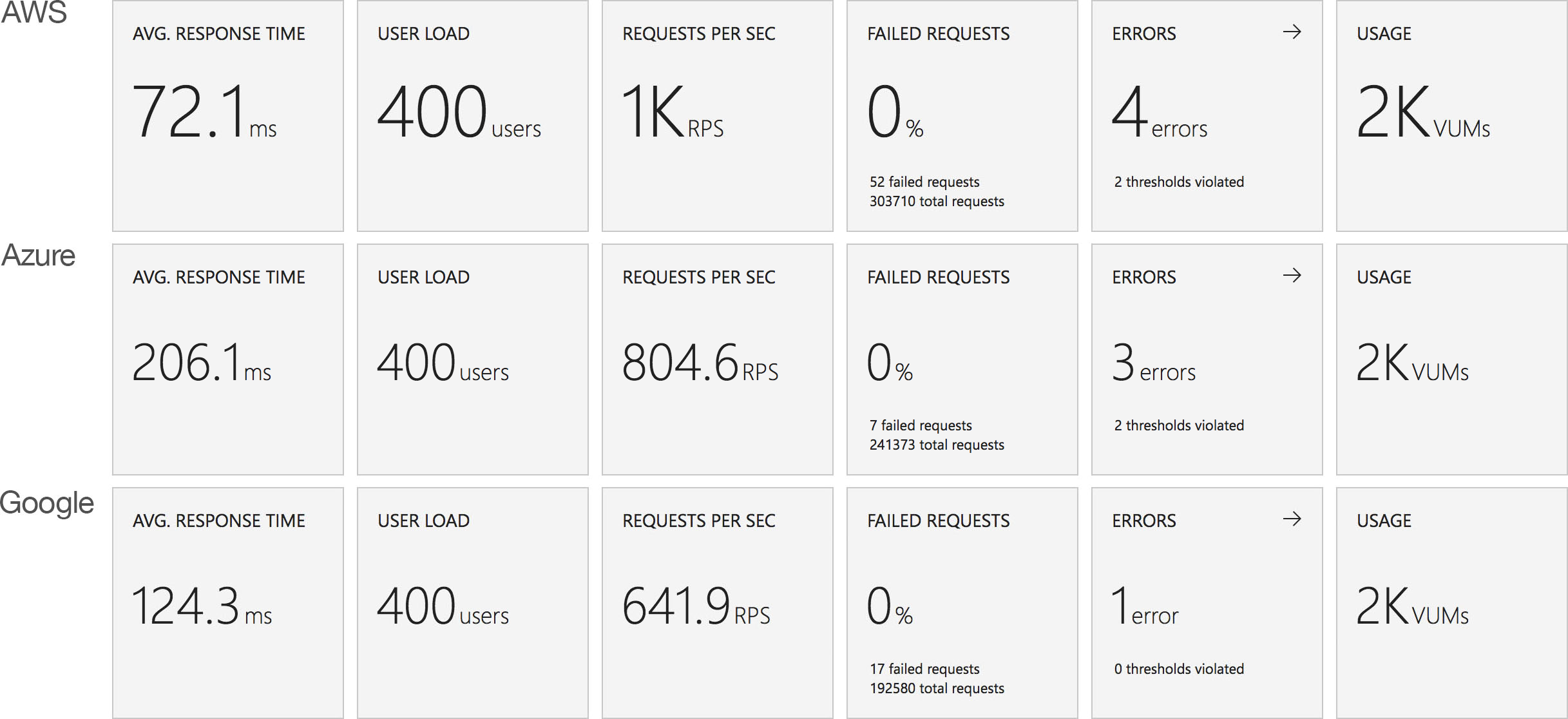

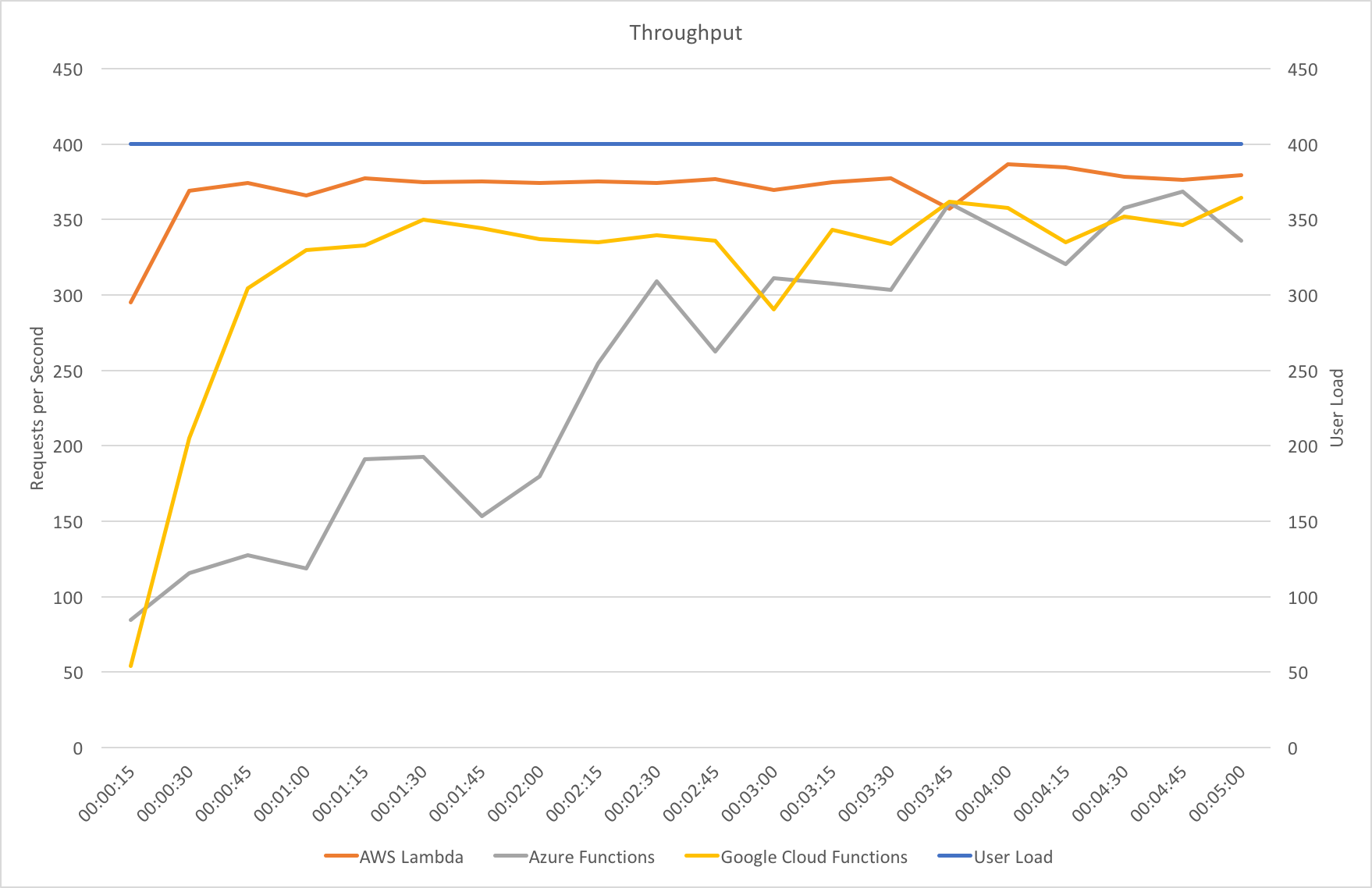

Stock Functions

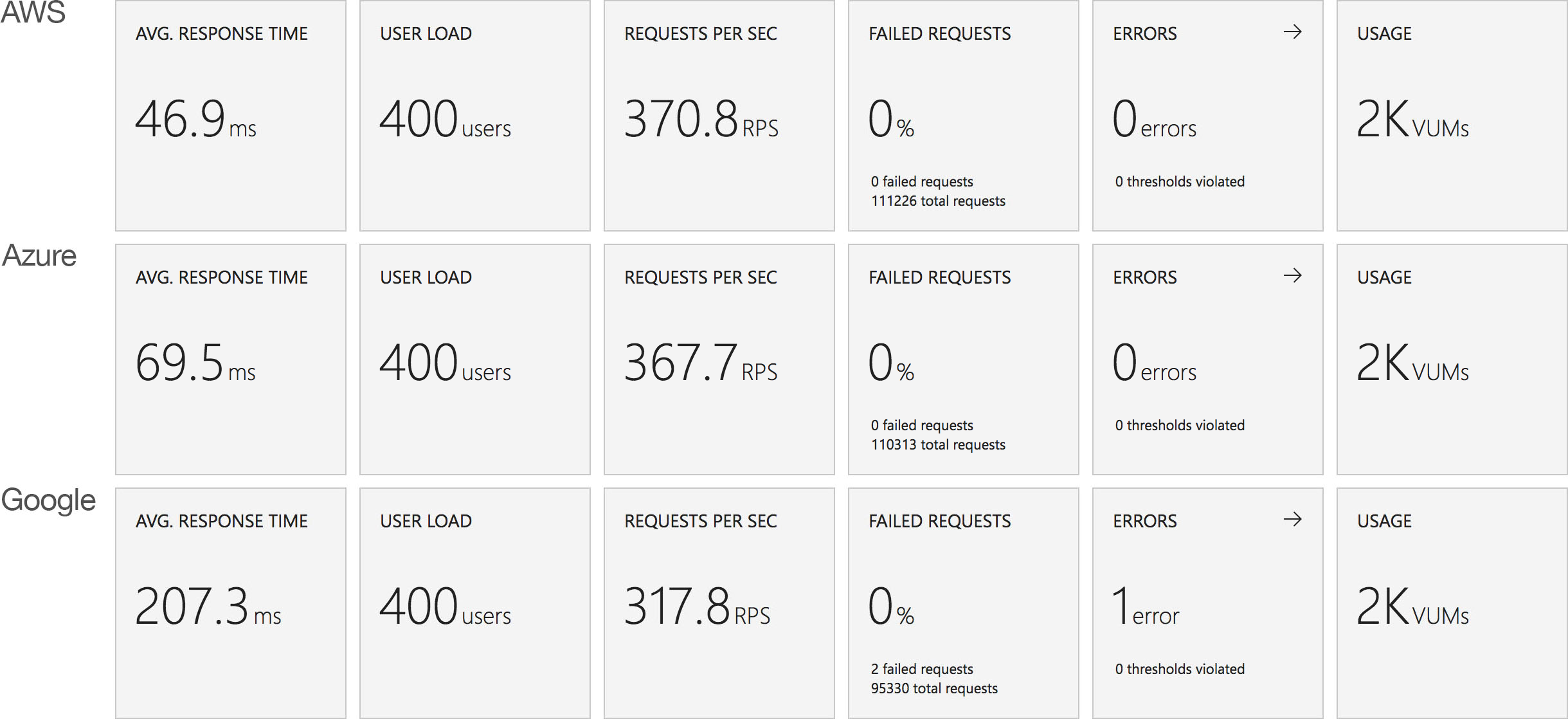

This test uses the stock “return a string” function provided by each platform (I’ve captured the code in GitHub for reference) with the immediate high demand scenario: 400 concurrent users for 5 minutes.

The minimalist nature of this test (return a string) very much highlights the changes made to the Azure Functions hosting model and we can see that not only is there barely any lag in growing to meet the 400 user demand but that response time has been utterly transformed. It’s, to say the least, a significant improvement over what I saw in January when even with essentially no code to execute and no IO to perform Functions suffered from horrendous performance in this test.

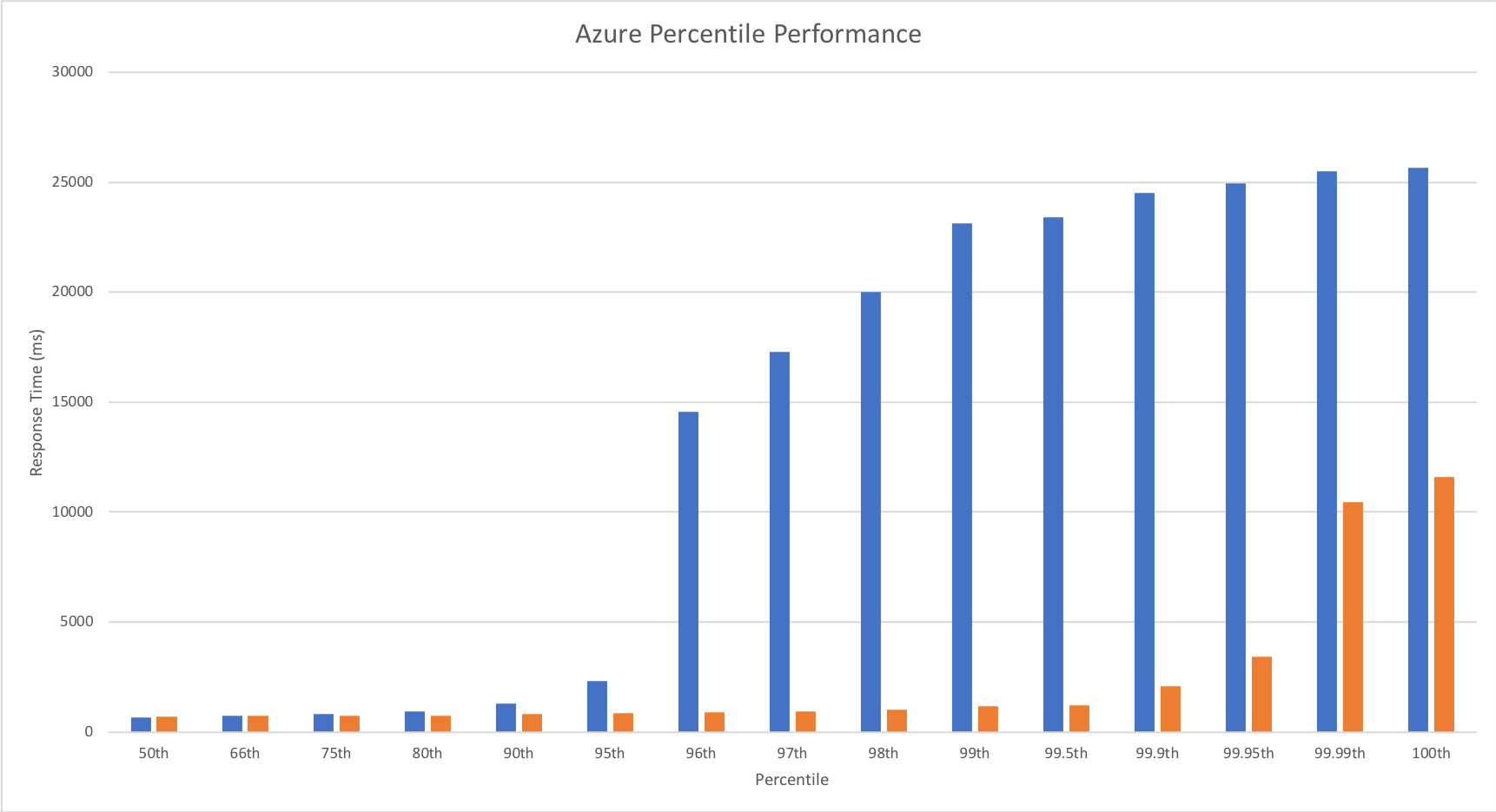

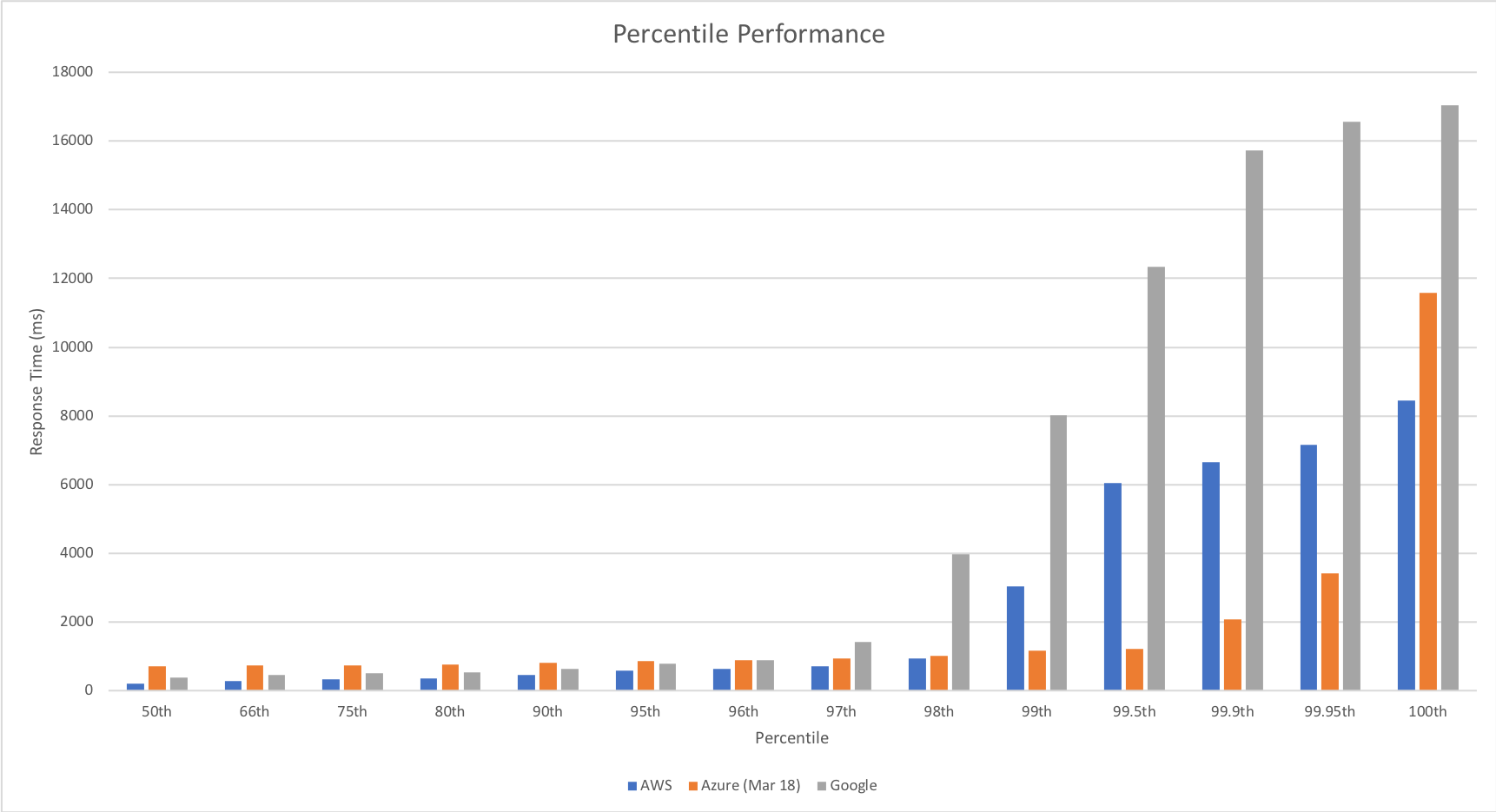

Percentile Performance

I was unable to obtain this data from VSTS and so resorted to running Apache Benchmarker. For this test I used settings of 100 concurrent requests for a total of 10000 requests, collected the raw data, and processed it in Excel. It should be noted that the network conditions were less predictable for these tests and I wasn’t always as geographically close to the cloud function as I was in other tests though repeated runs yielded similar patterns:

Yet again we can see the massive improvements made by the Azure Functions team – performance remains steady up until 99.9th percentile. Full credit to the team – the improvement here is so significant that I actually had to add in the fractional percentiles to uncover the fall off.

Revised Comparison With Other Vendors

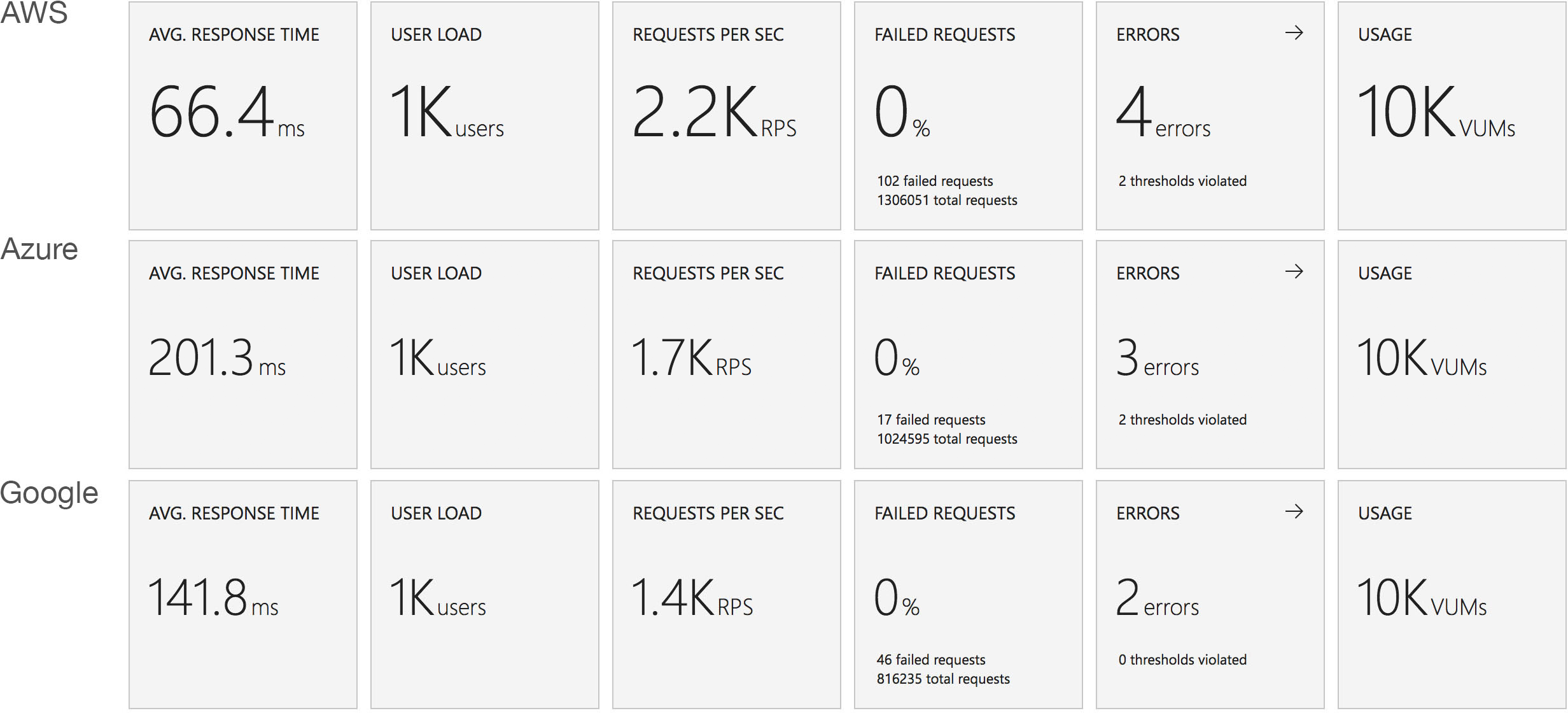

We can safely say by now that this new hosting model for Azure Functions is a dramatic improvement for HTTP triggered functions – but how does it compare with the other vendors? Last time round Functions was barely at the party – this time… lets see!

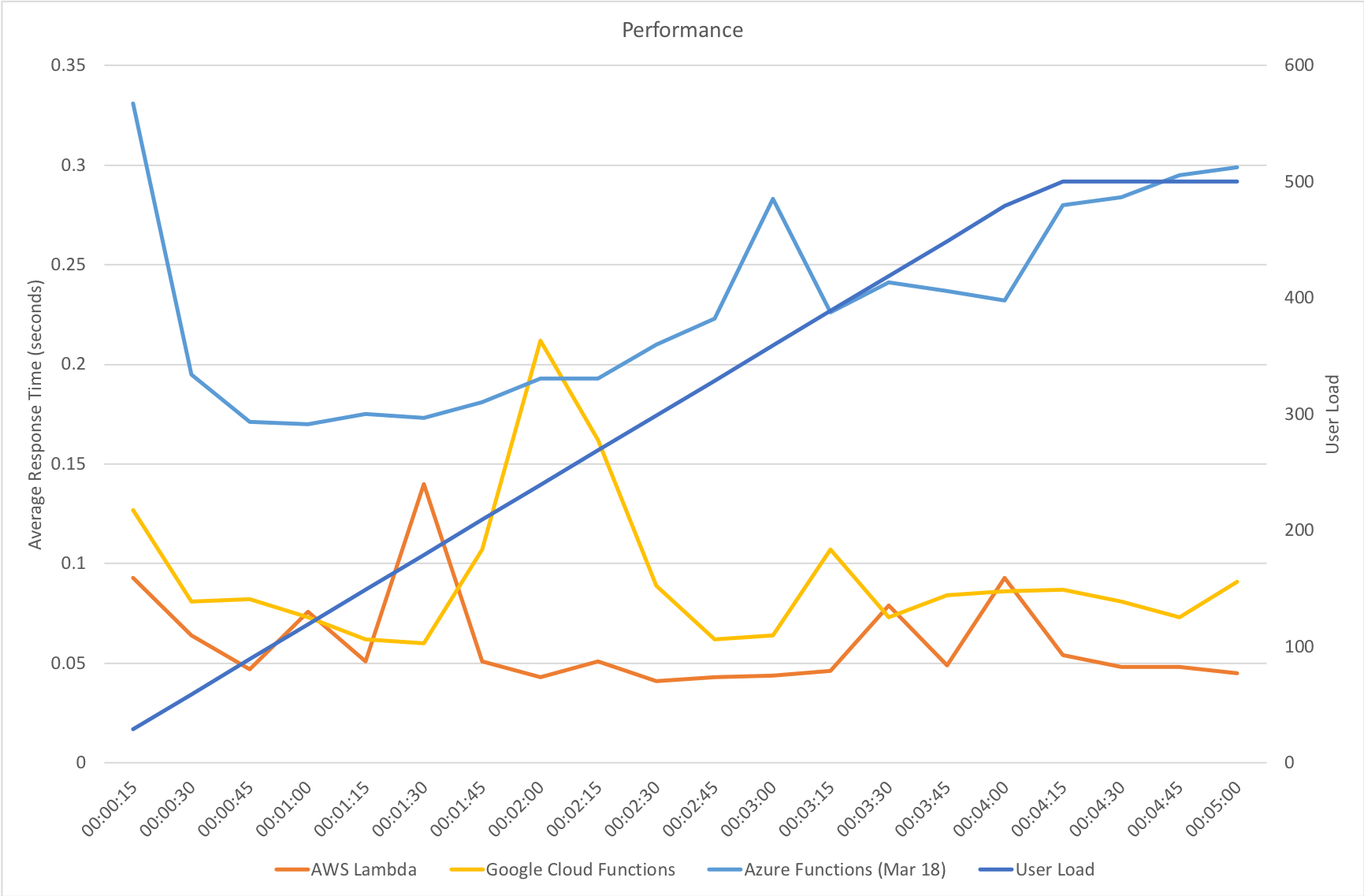

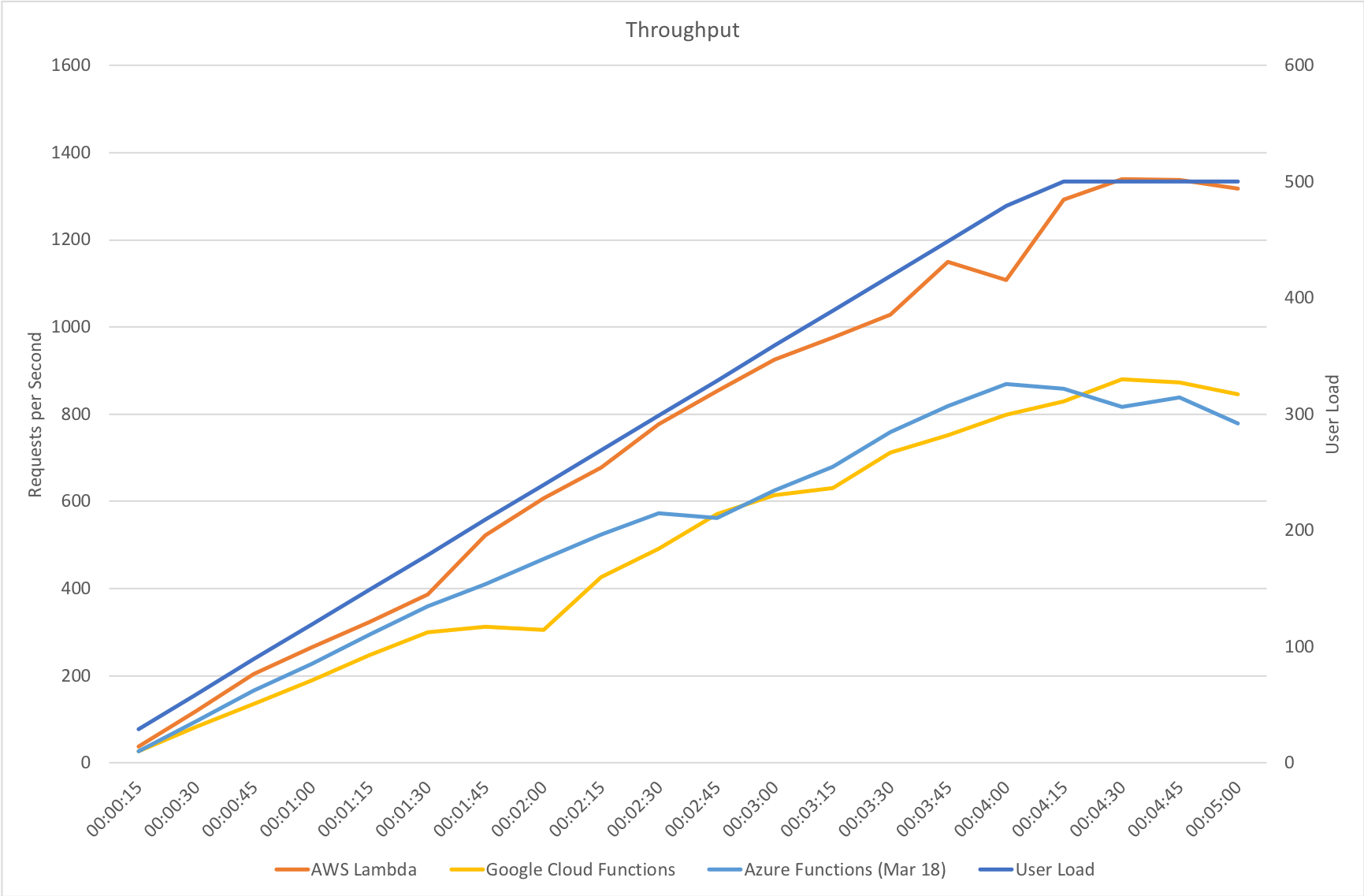

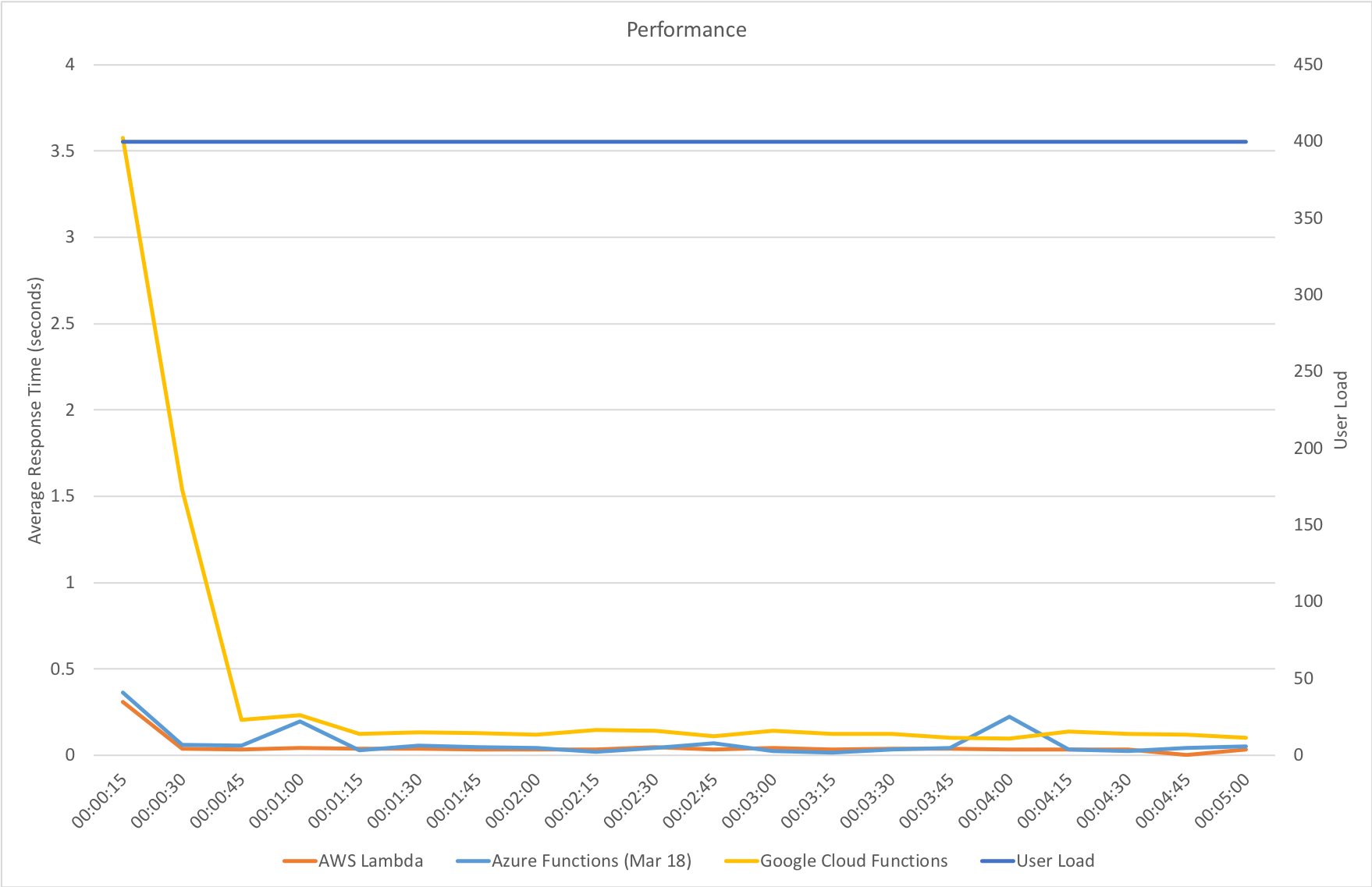

Gradual Ramp Up

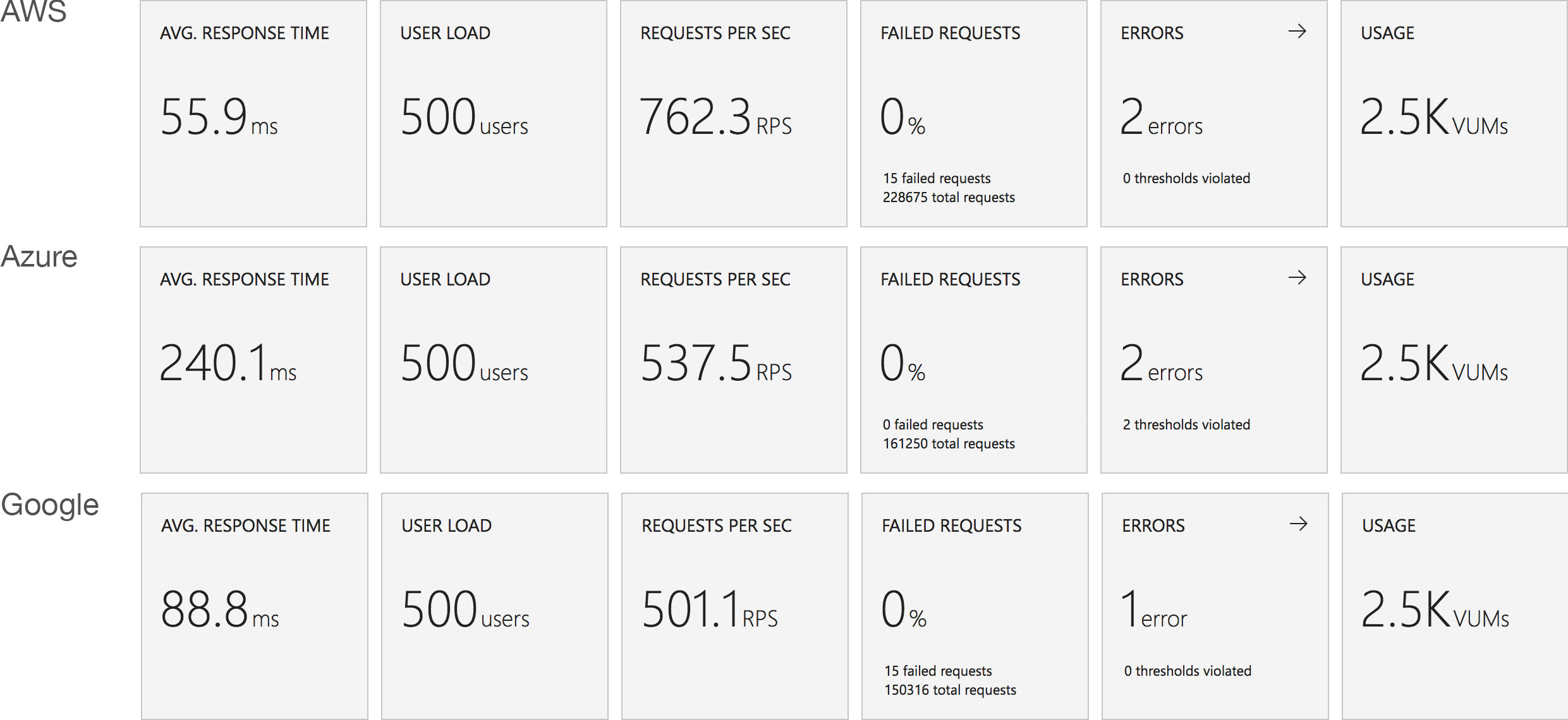

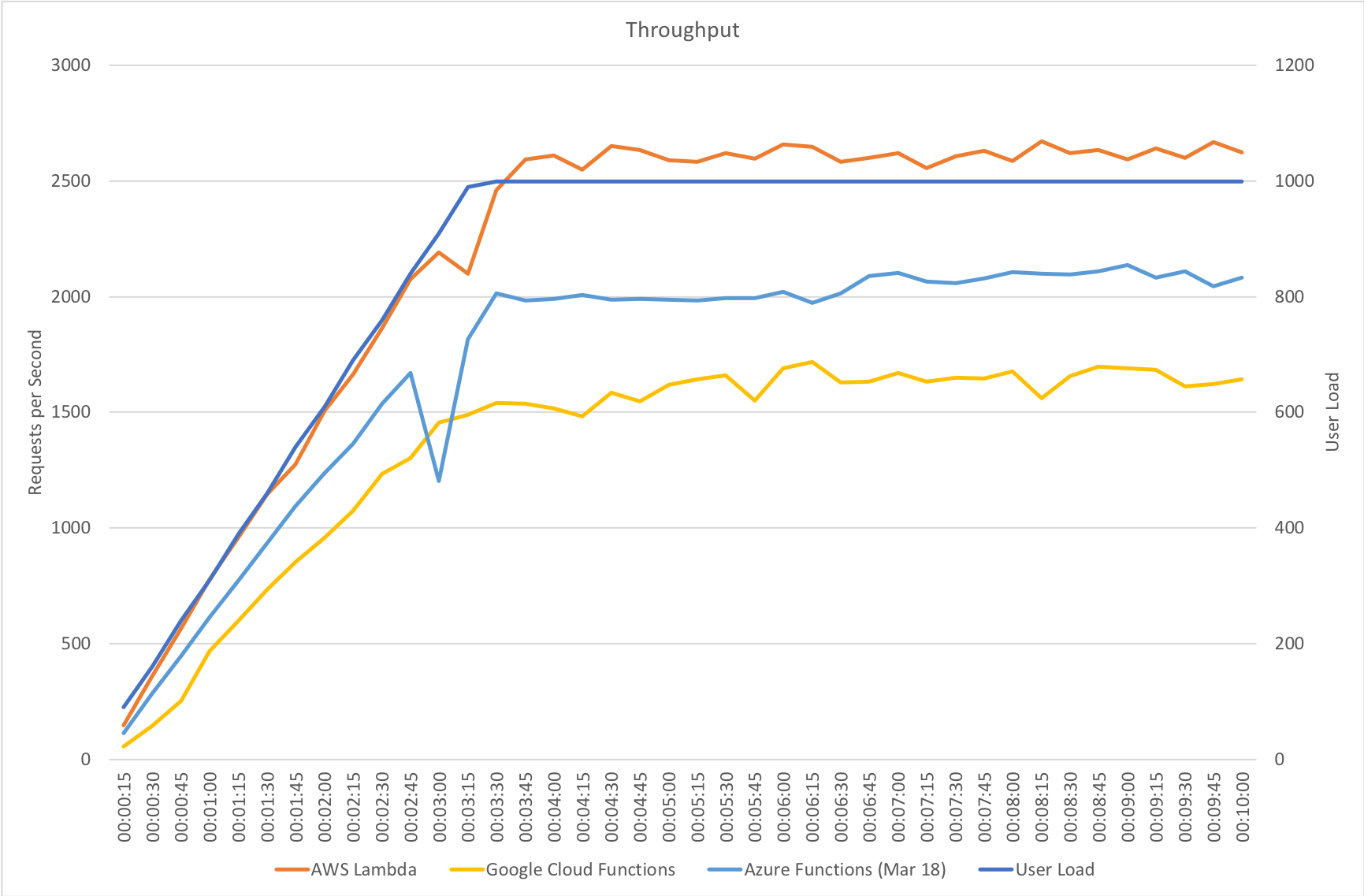

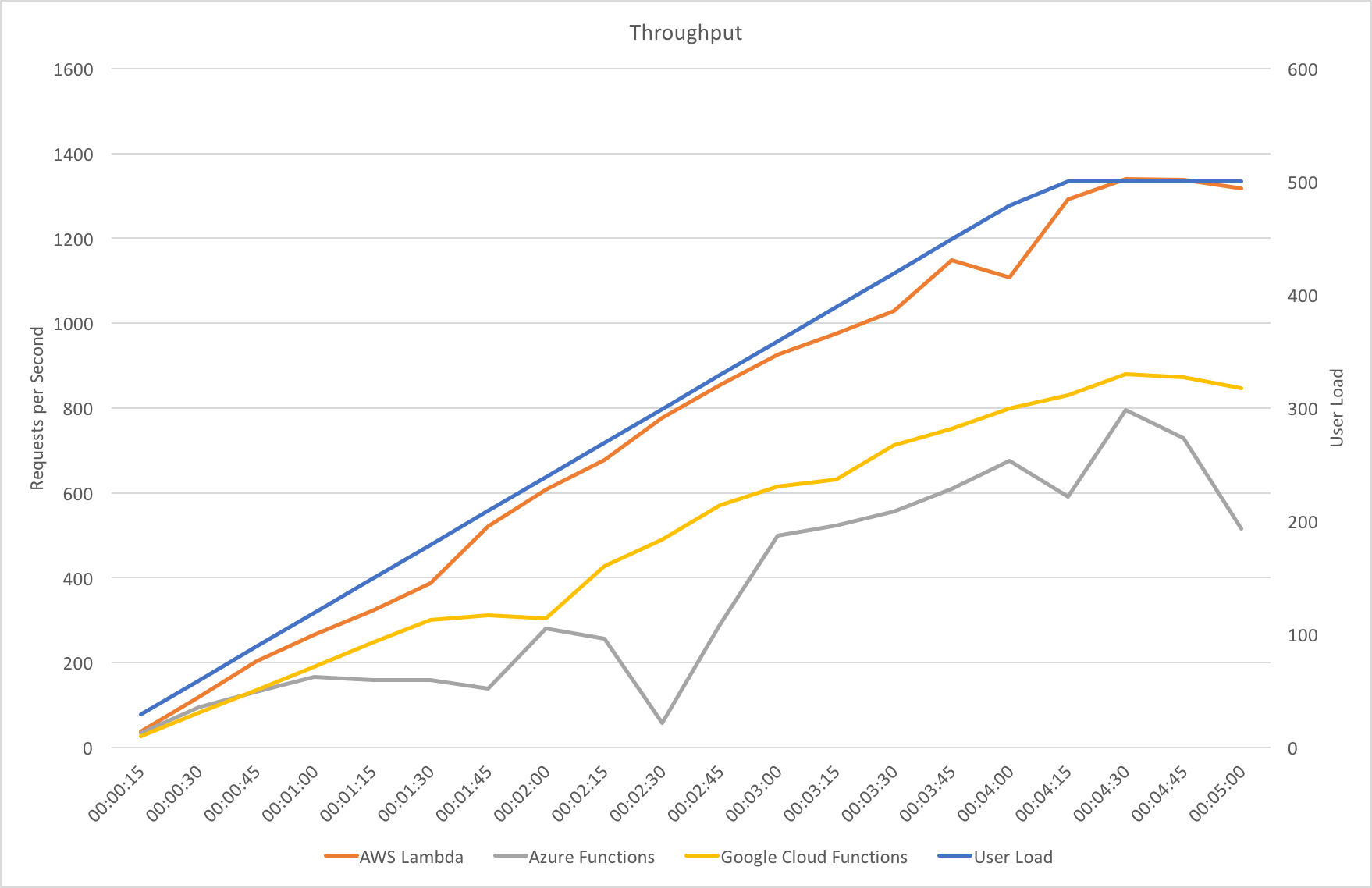

On our gradual ramp up test Azure still lags behind both AWS and Google in terms of response time but actually manages a higher throughput than Google. As demand grows Azure is also experiencing a slight deterioration in response time where the other vendors remain more constant.

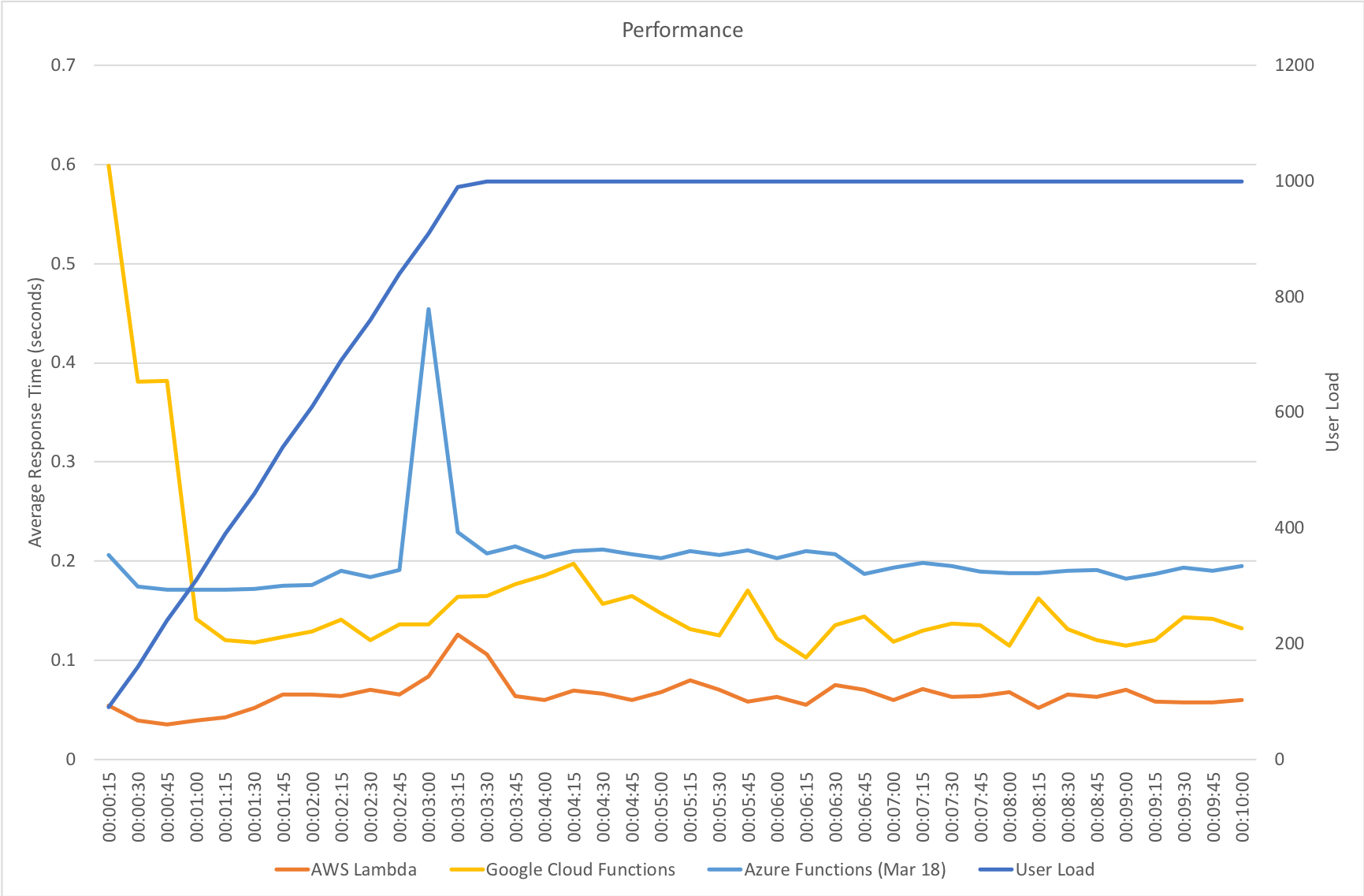

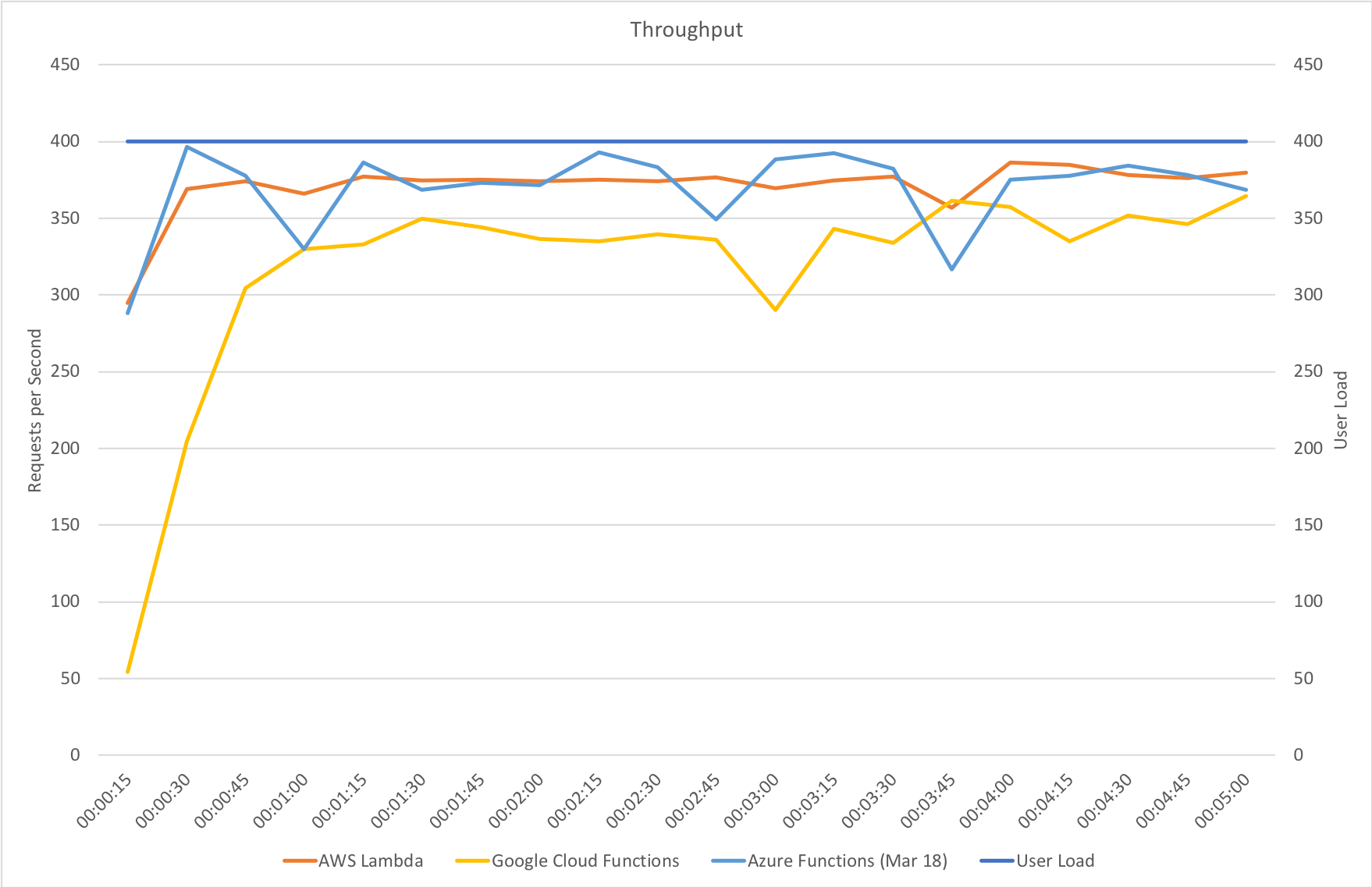

Rapid Ramp Up

Response time and throughput results for our rapid ramp up test are not massively dissimilar to the gradual ramp up test. Azure experiences a significant fall in performance around the 3 minute mark as the number of users approaches 1000 – but as I said earlier the Functions team are working on further area at this level of scale and beyond and I would assume at this point that some form of resource reallocation is causing this that needs smoothing out.

It’s also notable that although some way behind AWS Lambda Azure manages a reasonably higher throughput that Google Cloud – in fact it’s almost half way between the two competing vendors so although response times are longer there seems to be more overall capacity which could be an important factor in any choice between those two platforms.

Immediate High Demand

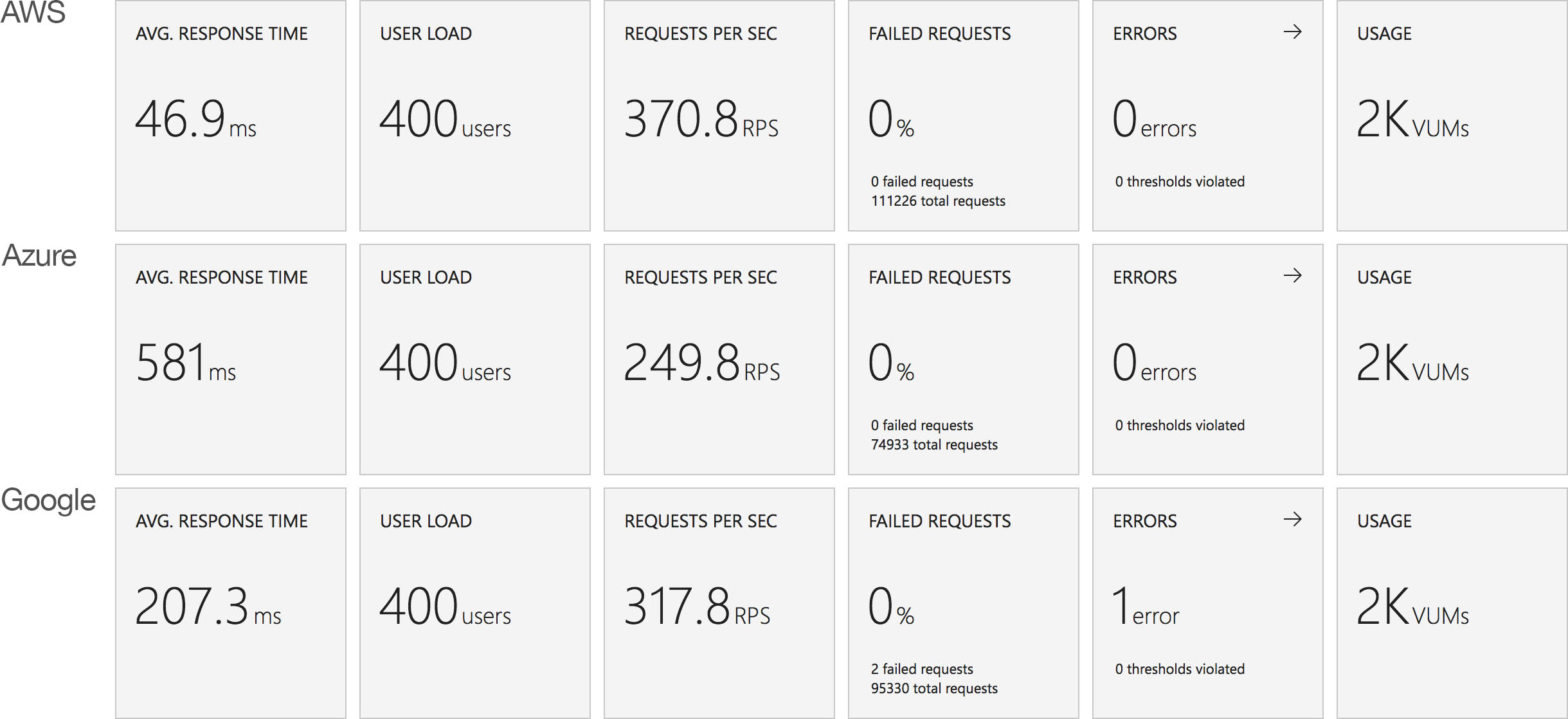

Again we see very much the same pattern – AWS Lambda is the clear leader in both response time and throughput while 2nd place for response time goes to Google and 2nd place for throughput goes to Azure.

Stock Functions

Interestingly in this comparison of stock functions (returning a string and so very isolated) we can see that Azure Functions has drawn extremely close to AWS Lambda and ahead of Google Cloud which really is an impressive improvement.

This suggests that other factors are now playing a proportionally bigger factor in the scaling tests than Functions capability to scale – previously this was clearly driving the results. Additional tests would need to be run to isolate if this is the case and whether or not this is related to the IO capabilities of the Functions host or the capabilities of external dependencies.

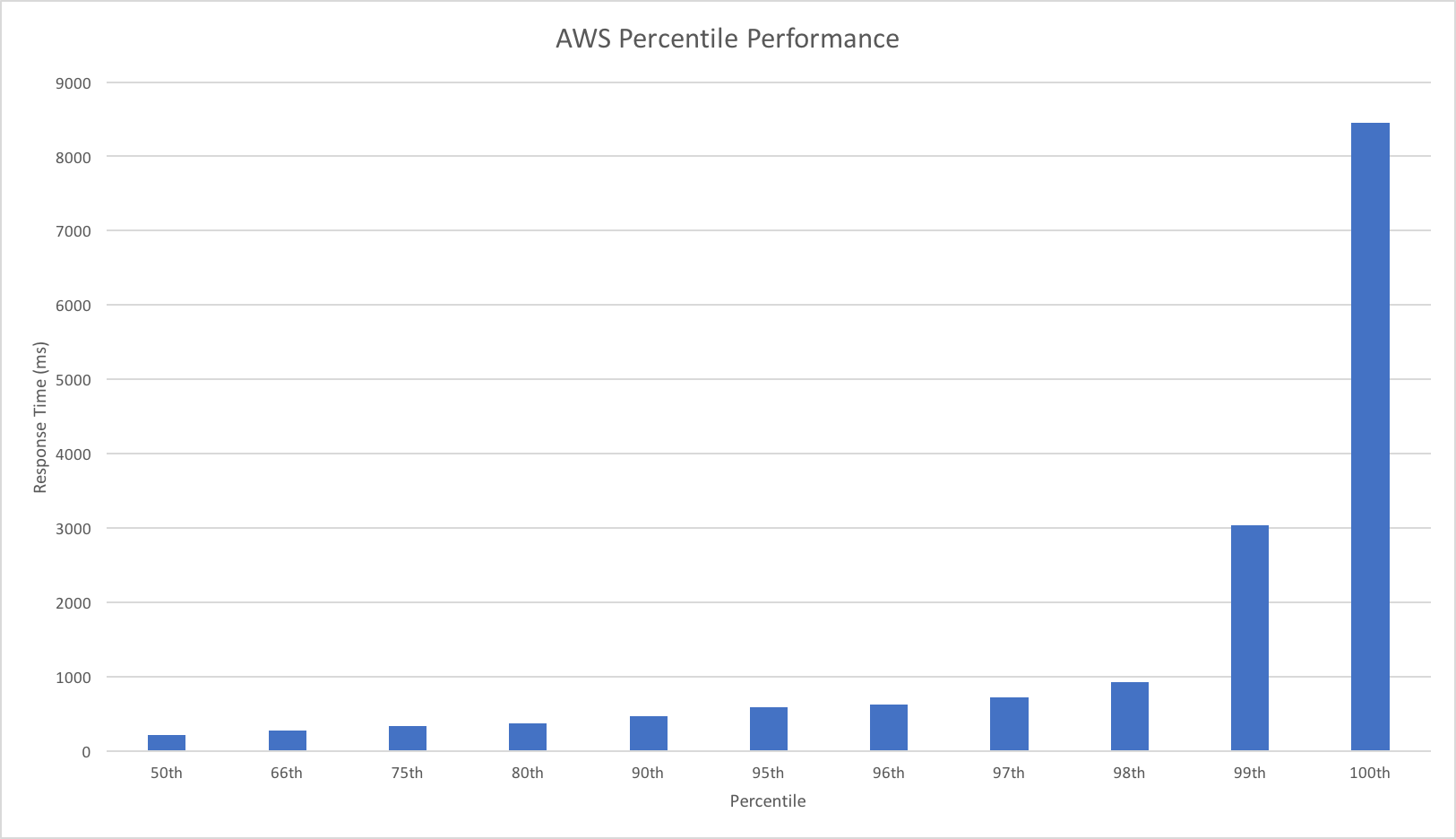

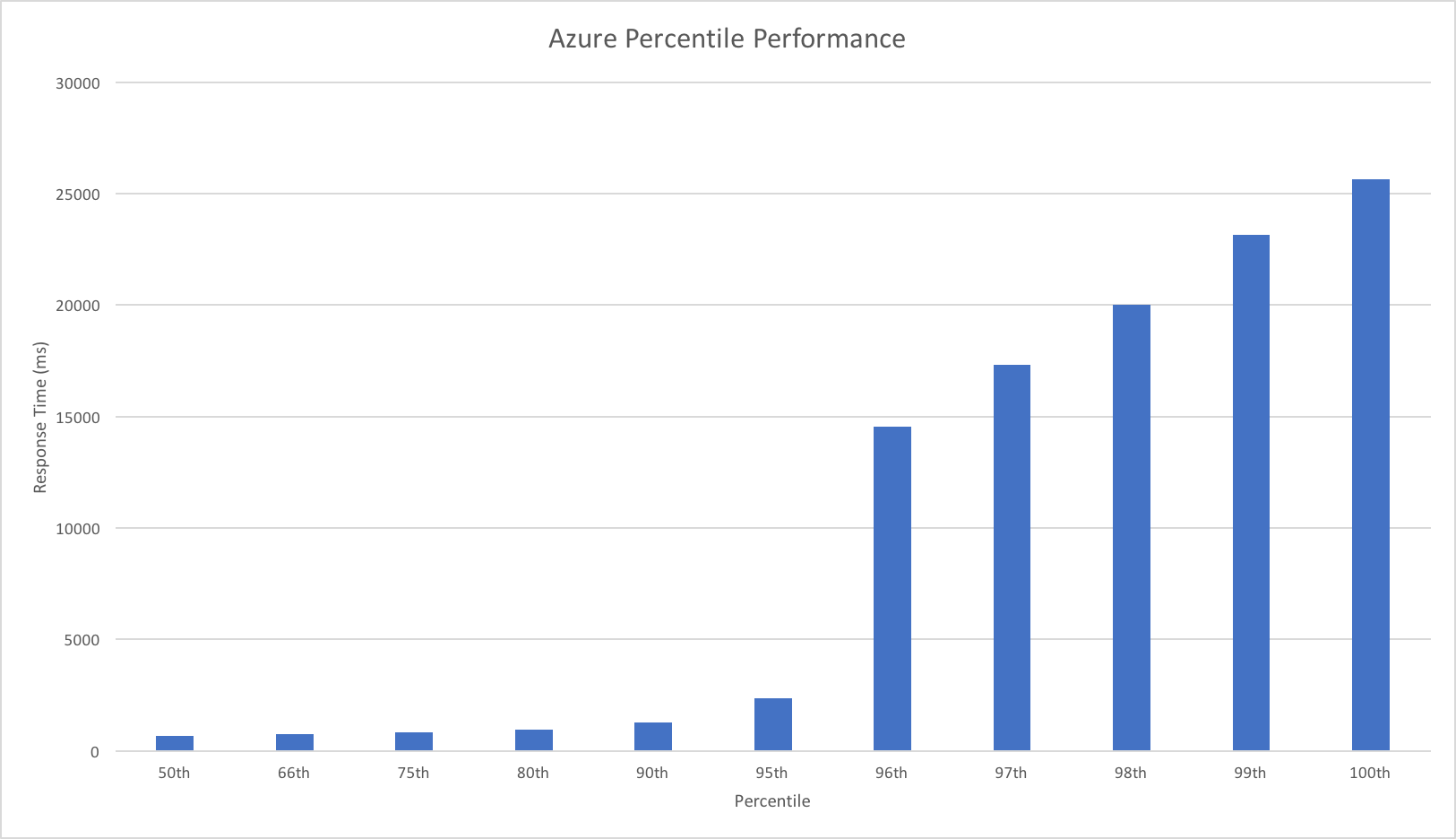

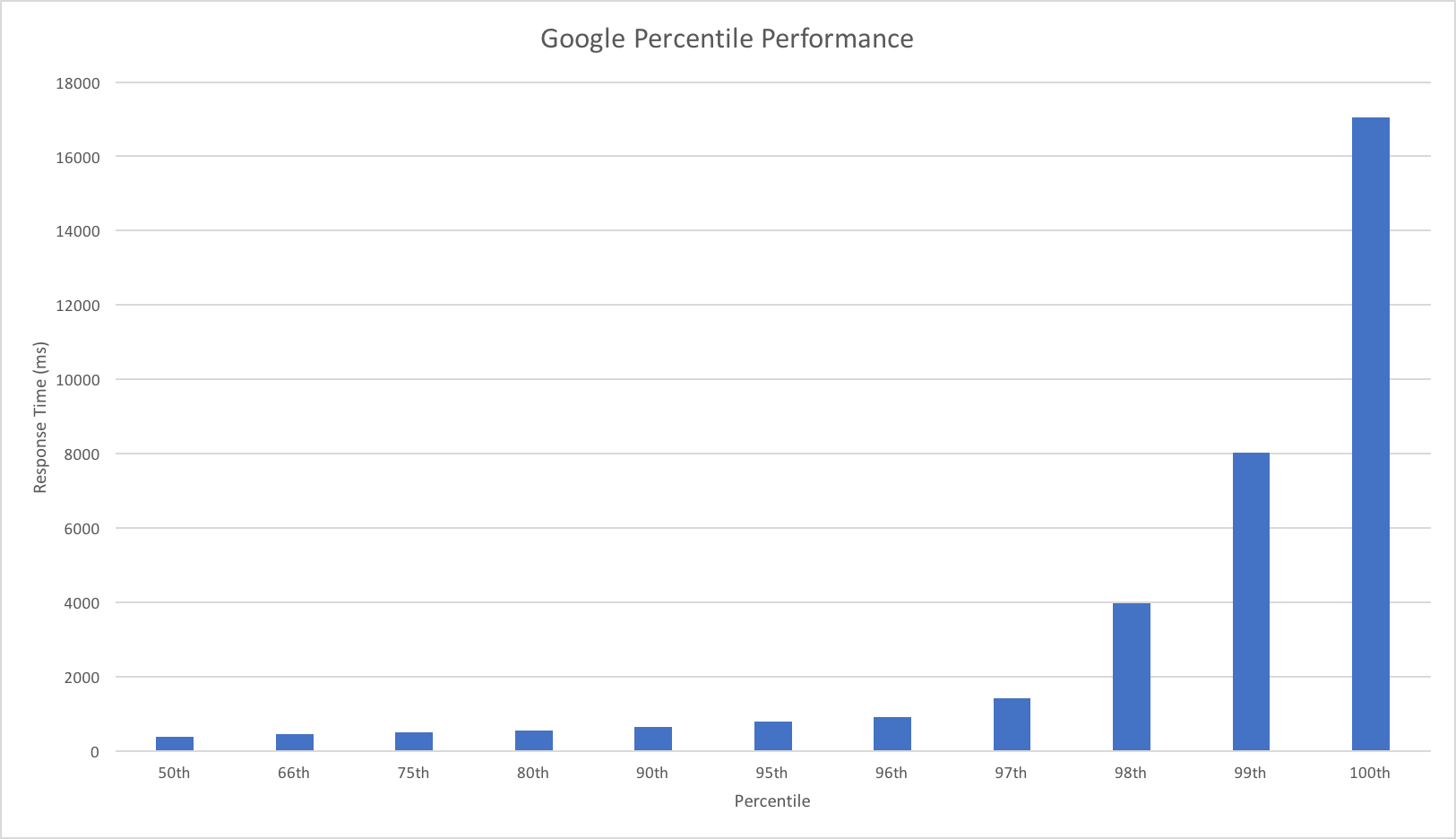

Percentile Performance

The percentile comparison shows some very interesting differences between the three platforms. At lower percentiles AWS and Google outperform Azure however as we head into the later percentiles they both deteriorate while Azure deteriorates more gradually with the exception of the worst case response time.

Across the graph Azure gives a more generally even performance suggesting that if consistent performance across a broader percentile range is more important than outright response time speed it may be a better choice for you.

Enabling The Improvements

The improvements I’ve measured and highlighted here are not yet enabled by default, but will be with the next release. In the meantime you can give them a go by adding an App Setting with the name WEBSITE_HTTPSCALEV2_ENABLED to 1.

Conclusions

In my view the Azure Functions team have done some impressive work in a fairly short space of time to transform the performance of Azure Functions triggered by HTTP requests. Previously the poor performance made them difficult to recommend except in a very limited range of scenarios but the work the team have done has really opened this up and made this a viable platform for many more scenarios. Performance is much more predictable and the system scales quickly to deal with demand – this is much more in line with what I’d hoped for from the platform.

I was sceptical about how much progress was possible without significant re-architecture but, as an Azure customer and someone who wants great experiences for developers (myself included), I’m very happy to have been wrong.

In the real world representative tests there is still a significant response time gap for HTTP triggered compute between Azure Functions and AWS Lambda however it is not clear from these tests alone if this is related to Functions or other Azure components. Time allowing I will investigate this further.

Finally my thanks to the @azurefunctions team, @jeffhollan and @davidebbo both for their work on improving Azure Functions but also for the ongoing dialogue we’ve had around serverless on Azure – it’s great to see a team so focused on developer experience and transparent about the platform.

If you want to discuss my findings or tech in general then I can be found on Twitter: @azuretrenches.

Recent Comments