Azure’s table storage service allows for highly scalable and reliable access to large quantities of data but if you come from a SQL background it can seem very primitive – there is essentially no support for transactions (ok so you can transact a batch but that’s not often that useful) and only support for optimistic concurrency within the Table Storage itself. You can’t do much about the former, though there are some strategies you can adopt that help – future blog post, but their is a technique you can use if optimistic concurrency isn’t good enough and you want exclusive access to table storage resources for a period of time – essentially obtaining a lock.

The trick is in coupling table storage with blob storage to take advantage of the leasing functionality available on blobs. I frequently use this technique when I want to access or perform an update on data across multiple tables and be certain the data is going to be consistent.

There is a simple example hosted on GitHub here from which I’m going to highlight some of the code to illustrate how this approach works in practice.

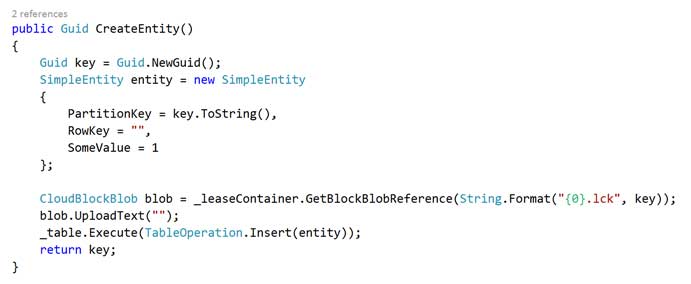

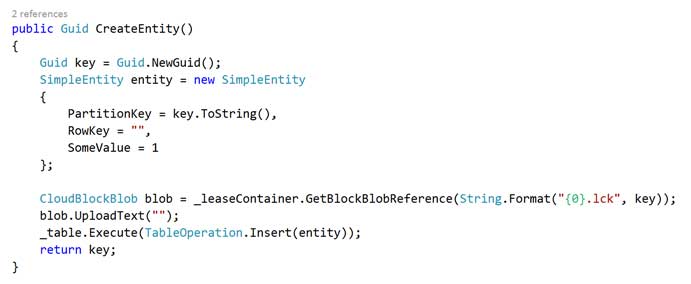

Firstly we need to create a table entry and a blob to go with it:

You can see that this is fairly standard code for uploading a blob and inserting an entity however note that we’ve given the blob a name that matches up with the key of our table entity. We have no row key but if you did you’d form the blob from the composite of the key (unless you were interested in locking a range).

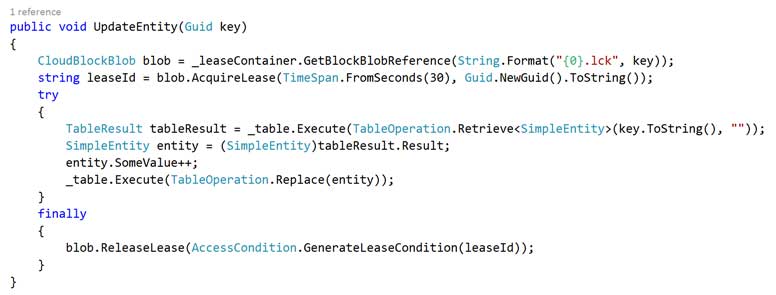

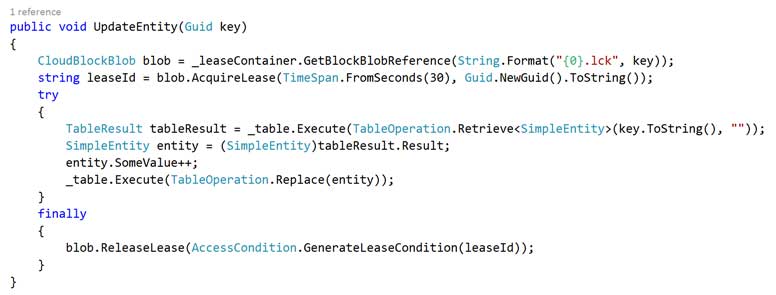

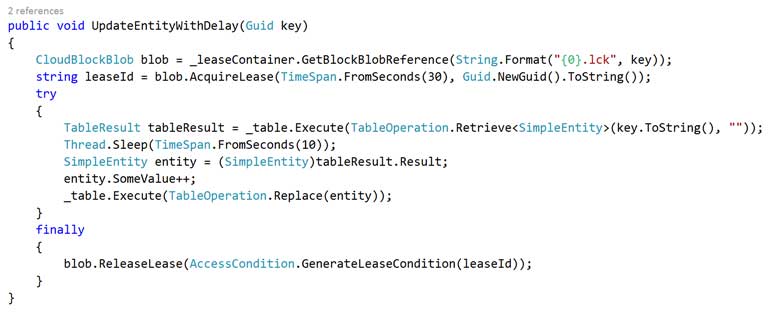

Now lets look at the code for a lease protected table access:

The code inside the try block is the fairly familiar looking code for accessing and updating entities for table storage however before we access table storage you can see that we get a reference to our blob using the entities key as a name again and then we call AcquireLease on the blob.

Importantly we do this with a timespan. It’s possible to indefinitely acquire a lease on a blob but this is not usually a good idea: if you suffer a crash (either your code or an Azure failure) you’re going to have a real problem on your hands as the blob will be leased by something that no longer exists.

It’s important to consider how long you want the lease for – thinking hard about retry policies and how long a series of operations could theoretically take. This is an extremely simple example but lets assume you were updating two tables – how long could that take? Well normally milliseconds assuming you’ve keyed your tables well. But let’s assume both operations require a significant retry period. The default retry policy for the storage client (on version 2 through to 3.0.3.0) has a maximum duration of 120 seconds. So if all your operations (read table 1, read table 2, write table 1, write table 2) succeed but are at the upper range of this threshold then you are looking at around 480 seconds for it to fully complete. In my experience this is unlikely – but it does happen.

So to cover this lets say you set your leases timespan to 490 seconds – it will cover the total possible operation time but if there is an issue and your lease doesn’t get released due to an application crash (or Azure issue) then the entity you are attempting to lock cannot be updated again until the full 490 seconds have passed. You can mitigate this from an application error with a finally block as in this sample code but that won’t help you if your process dies.

Another option open to you is to renew the lease between operations. Their is a method on the blob called RenewLease that will do exactly what it says on the tin and renew the lease and this can be an effective, if messy looking, solution but it does come with a performance penalty. Just like acquiring a lease in the first place takes time renewing a lease does too – in most cases it is extremely quick but you should be prepared for variance.

There’s no magic answer and, as ever, it’s a series of trade offs and you need to pick the best fit for your use case. It’s so use case specific that it’s difficult to give general advice – however general common sense is reasonable apply and try to cater for the common case and treat exceptional cases as just that: exceptional. As long as you know the fault has happened you can do something about it later – just don’t put your head in the sand and ignore it.

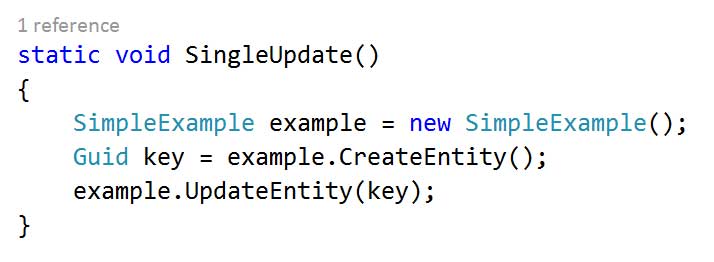

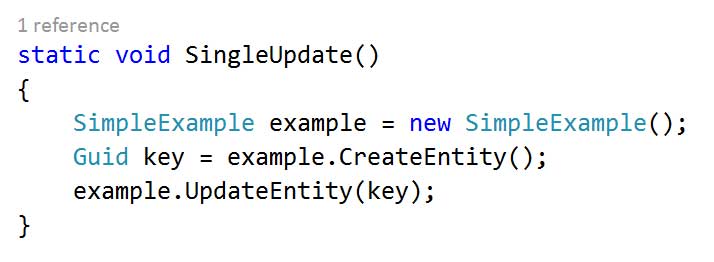

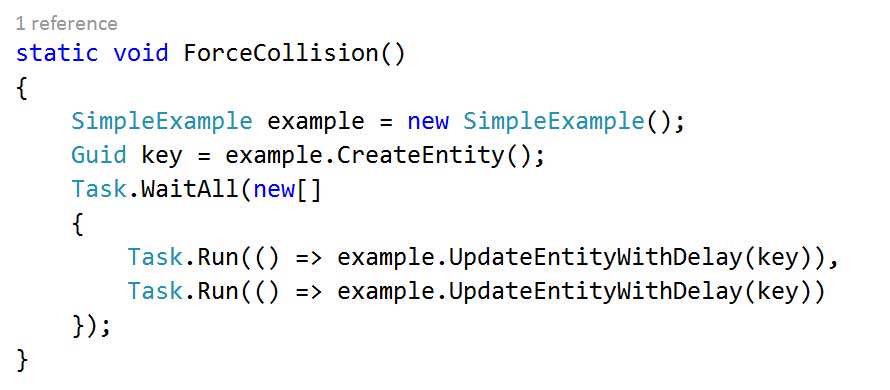

With that aside back to our example. Run the application and have it call the SimpleExample method shown below:

At the end of this you should see the expected output in the storage emulator – an entry in table storage in the entities table and a blob with a name that matches the partition key in the leaseObjects blob container.

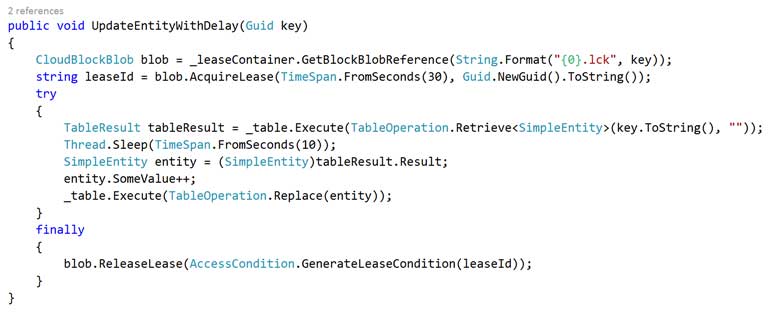

Now lets add a method that adds a delay into the update process so we can see force a collision and see what happens:

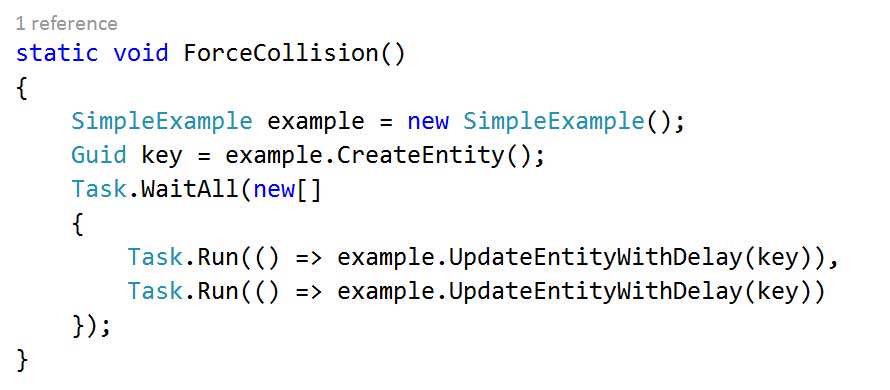

And finally lets use that to run two updates concurrently with the task library:

You should find that a storage exception is raised on the AcquireLease line with a status code of 409 – conflict. The lease is acquired and so the second attempt to acquire the lease fails. Depending on your use case you may choose to fail the operation entirely or catch the exception and use a backoff policy to retry later.

Obviously the example here is somewhat simplistic and artificial but hopefully it illustrates how you can use this technique in more complex scenarios. And you can of course use the blob lease pattern in other concurrency scenarios.

Finally – the AccidentalFish.ApplicationSupport library on GitHub contains a dependency injectable lease manager you can use to simplify your code.

Recent Comments