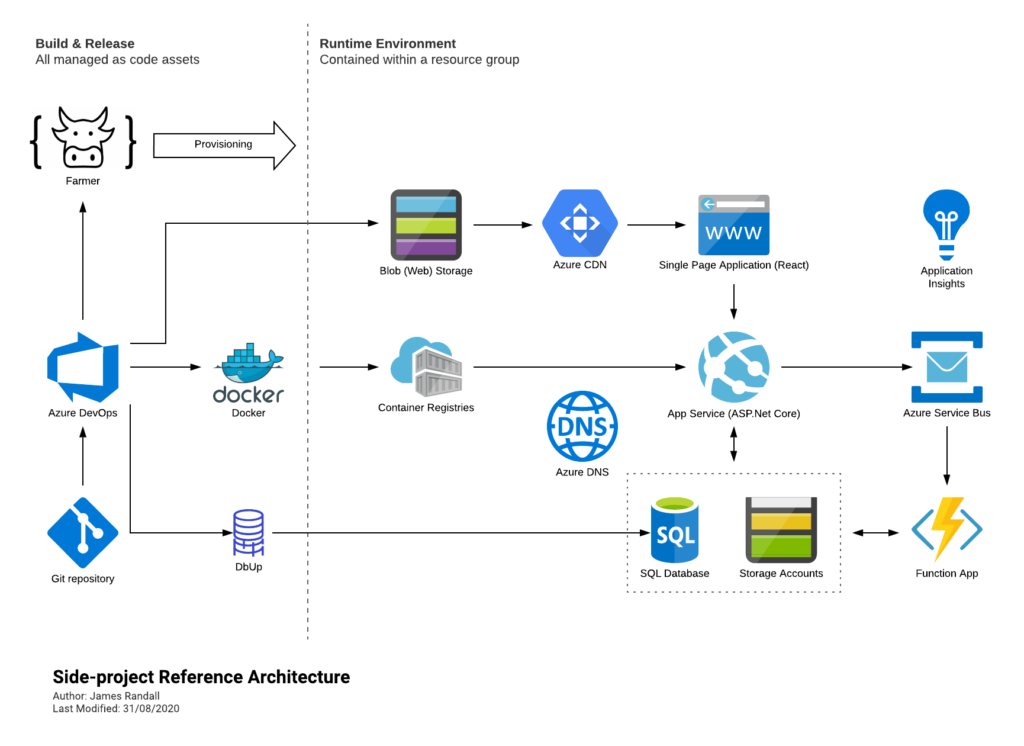

Farmer is a DSL for generating and executing ARM templates and one of the great things about it is that its based on .NET Core. That means that you can use it in combination with other components from the .NET ecosystem to create end to end typesafe deployment solutions.

As an aside – I recently posted a critique of Microsofts new DSL Bicep. One of the things I didn’t mention in that but did in a series of tweets was the shortcomings of inventing a new language that lives in its own ecosystem.

Ultimately Bicep will need to support “extension points” or you’ll have to wrap them in script and communicate information across boundaries (of course their can be benefits to that approach too). Not to mention they need to write all the tooling from scratch and developers / administrators need to learn another language.

By taking the approach Farmer has handling boundaries is a lot cleaner – as we’ll see – and we can take advantage of some neat language features.

In this example I’m going to provision an Azure SQL Database into Azure and then upgrade its schema using DbUp and we’ll run all this through GitHub Actions giving us an automated end to end deployment / upgrade system for our SQL database. You could do this with less F# code (almost none) but I also want to try and illustrate how this approach can form a nice framework for more complicated deployment scenarios so we’re also going to look at error handling across a deployment pipeline.

As all the components themselves are well documented I’m not going to go end to end on all the detail of each component here – instead I’m going to focus on the big picture and the glue. You can find the code for the finished demonstration on GitHub here.

Starting with a F# console app, adding the Farmer NuGet package, and the boilerplate Program.fs file first we need to declare our Azure resources – in this case a SQL Server and a database and then bring them together in an ARM template:

let demoDatabase = sqlServer {

name serverName

admin_username "demoAdmin"

enable_azure_firewall

add_databases [

sqlDb { name databaseName ; sku DbSku.Basic }

]

}

let template = arm {

location Location.UKWest

add_resource demoDatabase

output "connection-string" (demoDatabase.ConnectionString databaseName)

}

Pretty straightforward but a couple of things worth noting:

- Both serverName and databaseName are simple constants (e.g. let databaseName = “myDatabaseName”) that I’ve created as I’m going to use them a couple of times.

- Opening up the database to azure services (enable_azure_firewall) will allow the GitHub Actions Runner to access the database.

- On the final line of our arm block we output the connection string for the database so we can use it later.

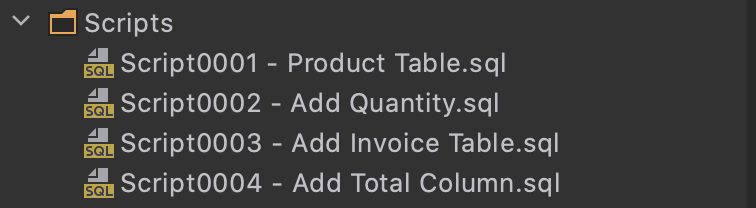

That’s our Azure resources but how do we apply our SQL scripts to generate our schema? First we’ll need to add the dbup-sqlserver NuGet package and with that in place we’ll first add a Scripts folder to our solution and in my example four scripts:

DbUp keeps track of the last script it ran and applies subsequent scripts – essentially its a forward only ladder of migrations. If you’re adding scripts of your own make sure you mark them as Embedded Resource otherwise DbUp won’t find them. To apply the scripts we simply need some fairly standard DbUp code like that shown below, I’ve placed this in a F# module called DbUpgrade so, as we’ll see in a minute, we can pipe to it quite elegantly:

let tryExecute =

Result.bind (fun (outputs:Map<string,string>) ->

try

let connectionString = outputs.["connection-string"]

let result =

DeployChanges

.To

.SqlDatabase(connectionString)

.WithScriptsEmbeddedInAssembly(Assembly.GetExecutingAssembly())

.LogToConsole()

.Build()

.PerformUpgrade()

match result.Successful with

| true -> Ok outputs

| false -> Error (sprintf "%s: %s" (result.Error.GetType().Name.ToUpper()) result.Error.Message)

with _ -> Error "Unexpected error occurred upgrading database"

)

If you’re not familiar with F# you might wonder what this Result.bind function is. F# has a wrapper type for handling success and error states called options and a bunch of helper functions for their use. One of the neat things about it is it lets you chain lots of functions together with an elegant pattern for handling failure – this is often referred to as Railway Oriented Programming.

We’ve now declared our Azure resources and we’ve got a process for deploying our upgrade scripts and we need to bring it all together and actually execute all this. First lets create our deployment pipeline that first provisions the resources and then upgrades the database:

let deploymentPipeline = Deploy.tryExecute "demoResourceGroup" [ adminPasswordParameter ] >> DbUpgrade.tryExecute

If we had additional tasks to run in our pipeline we’d join them together with the >> operator as I’ve done here.

To run the deployment we need to provide an admin passford for SQL server which you can see in this code snippet as sqlServerPasswordParameter and we need to do this securely – so it can’t sit in the source code. Instead as I’m going to be running this from GitHub Actions an obvious place is the Secrets area of GitHub and an easy way to make that available to our deployment console app is through an environment variable in the appropriate action (which we’ll look at later). We can then access this and format it for use with Farmber by adding this line:

let adminPasswordParameter =

Environment.GetEnvironmentVariable("ADMIN_PASSWORD") |> createSqlServerPasswordParameter serverName

Farmer uses a convention approach to a parameter name – I’ve built a little helper function createSqlServerPassword to form that up.

(We could take a number of different approaches to this – ARM parameters for example – I’ve just picked a simple mechanism for this demo)

Finally to invoke all this we add this line at the bottom of our file:

template |> deploymentPipeline |> asGitHubAction

asGitHubAction is another little helper I’ve created that simply returns a 0 on success or prints a message to the console and returns a 1 in the event of an error. This will cause the GitHub Action to fail as we want.

That’s the code side of things done. Our finished Program.cs looks like this:

open System

open Farmer

open Farmer.Builders

open Sql

open Constants

open Helpers

[<EntryPoint>]

let main _ =

let adminPasswordParameter =

Environment.GetEnvironmentVariable("ADMIN_PASSWORD") |> createSqlServerPasswordParameter serverName

let demoDatabase = sqlServer {

name serverName

admin_username "demoAdmin"

enable_azure_firewall

add_databases [

sqlDb { name databaseName ; sku DbSku.Basic }

]

}

let template = arm {

location Location.UKWest

add_resource demoDatabase

output "connection-string" (demoDatabase.ConnectionString databaseName)

}

let deploymentPipeline =

Deploy.tryExecute "demoResourceGroup" [ adminPasswordParameter ]

>> DbUpgrade.tryExecute

template |> deploymentPipeline |> asGitHubAction

All we need to do now is wrap it up in a GitHub Action. I’ve based this action on the stock .NET Core build one – lets take a look at it:

name: Deploy SQL database

on:

push:

branches: [ master ]

pull_request:

branches: [ master ]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Setup .NET Core

uses: actions/setup-dotnet@v1

with:

dotnet-version: 3.1.301

- name: Install dependencies

run: dotnet restore

- name: Build

run: dotnet build --configuration Release --no-restore

- name: Login via Az module

uses: azure/login@v1.1

with:

creds: ${{secrets.AZURE_CREDENTIALS}}

enable-AzPSSession: true

- name: Run

env:

ADMIN_PASSWORD: ${{ secrets.ADMIN_PASSWORD}}

run: dotnet DeployDb/bin/Release/netcoreapp3.1/DeployDb.dll

If you’re familiar with GitHub Actions most of this should be fairly self explanatory – there’s nothing special about our deployment code, its a standard .NET Core console app so we begin by building it as we would any other (again this is one of the things I like about Farmer – its just .NET, and if you’re using .NET there’s nothing else required). However after building it we do a couple of things:

- To do the deployment Farmer will use the Azure CLI and so we need to login to Azure via that. We do that in the Login via Az module step which is pretty stock and documented on GitHub here. I’ve stored the secret for the service principal in the secrets area of GitHub.

- In the final step we run our deployment – again its just a standard console app. You an see in this step the use of the env section – we take a secret we’ve called ADMIN_PASSWORD and set it as an environment variable making it available to our console app.

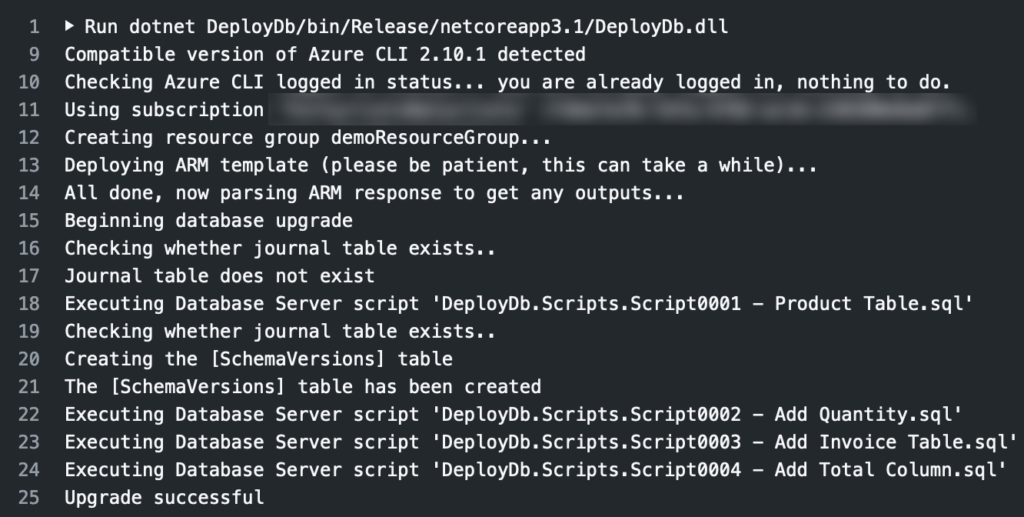

And that’s it! At this point you’ve got an automated solution that will make sure your Azure SQL database infrastructure and its schema are managed get up to date. Change the configuration of your SQL database and/or add a SQL script and this will kick off and apply the changes for you. If / when you run it for the first time you should see output like this from the build section of the Action:

I think its a simple, but powerful, example of infrastructure as code and the benefits of using an existing language and ecosystem for creating DSLs – you get so much for free by doing so. And if the rest of your codebase is in .NET then with Farmer you can share code, whether that be simple constants and names or implementation, easily across your deployment and runtime environments. Thats a big win. I’m slowly adding it into my Performance for Cyclists project and this approach here is largely lifted from their.

Finally I think its worth emphasising – you don’t need to really know F# to use Farmer and you certainly don’t need to be using it elsewhere in your solution. Its a pretty simple DSL build on top of F# and a fantastic example of how good F# is as a basis for DSLs. I’ve dug a little deeper into the language here to integrate another .NET tool but if all you want to do is generate ARM templates then, as you can see from the Farmer examples on its website, you really don’t need to get into the F# side (though I do encourage you to!).

Recent Comments