If you're looking for help with C#, .NET, Azure, Architecture, or would simply value an independent opinion then please get in touch here or over on Twitter.

With Microsoft and Apple both now beginning to use ARM chips in laptops, what was traditionally the domain of x86/x64 architecture, I found myself curious as to the ramifications of this move – particularly by Apple who are transitioning their entire lineup to ARM over the next 2 years.

While musing on the pain points of this I found myself wandering if Azure supported ARM processors, they don’t, and got pointed to AWS who do. @thebeebs (an AWS developer advocate) mentioned that some customers had seen significant cost reductions by moving some workloads over to ARM and so I, inevitably, found myself curious as to how typical .NET workloads might run in comparison to x64 and set about some tests.

The Tests

I quickly rustled up a simple API containing two invocable workloads:

- A computation heavy workload – I’m rendering a Mandelbrot and returning it as an image. This involves floating point maths.

- A simulated await workload – often with APIs we hand off to some other system (e.g. a database) and then do a small amount of computation. I’ve simulated this with Task.Delay and a (very small) random factor to simulate the slight variations you will get with any network / remote service request and then around this I compute two tiny Mandelbrots and return a couple of numbers. It would be nice to come back at some point and use a more structured approach for the simulated remote latency.

I’ve written this in F# (its not particularly “functional”) using Giraffe on top of ASP.Net Core just because that’s my go to language these days. Its running under the .NET 5 runtime.

The code for this is here. Its not particularly elegant and I simply converted some old JavaScript code of mine into F# for the Mandelbrot. It does a job.

The Setup

Within AWS I created three EC2 Linux instances:

- t4g.micro – ARM based, 2 vCPU, 1Gb memory, $0.0084 per hour

- t3.micro – x64 based, 2 vCPU, 1Gb memory, $0.0104 per hour

- t2.micro – x64 based, 1 vCPU, 1Gb memory, $0.0116 per hour

Its worth noting that my ARM instance is costing me 20% less than the t3.micro.

I’ve deliberately chosen very small instances in order to make it easier to stress them without having to sell a kidney to fund the load testing. We should be able to stress these instances quite quickly.

I then SSHed into each box and installed .NET 5 from the appropriate binaries and setup Apache as a reverse proxy. On the ARM machine I also had to install GCC and compile a version of libicui18n for .NET to work.

Next I used git clone to bring down the source and ran dotnet restore followed by dotnet run. At this point I had the same code working on each of my EC2 instances. Easy to verify as the root of the site shows a Mandelbrot:

This was all pretty easy to set up. You can also do it using a Cloud Formation sample that I was pointed at (again by @thebeebs).

I still think its worth remarking how much .NET has changed in the last few years – I’ve not touched Windows here and have the same source running on two different CPU architectures with no real effort on my part. Yes its “get through the door” stakes these days but it was hard to imagine this a few years back.

Benchmarks

My tests were fairly simple – I’ve used loader.io to maintain a steady state of a given number of clients per second and gathered up the response times and total execution counts along with the number of timeouts. I had the timeout threshold set at 10 seconds.

Time allowing I will come back to this and run some percentile analysis – loader doesn’t support this and so I would need to do some additional work.

I’ve run the test several times and averaged the results – though they were all in the same ballpark.

Mandelbrot

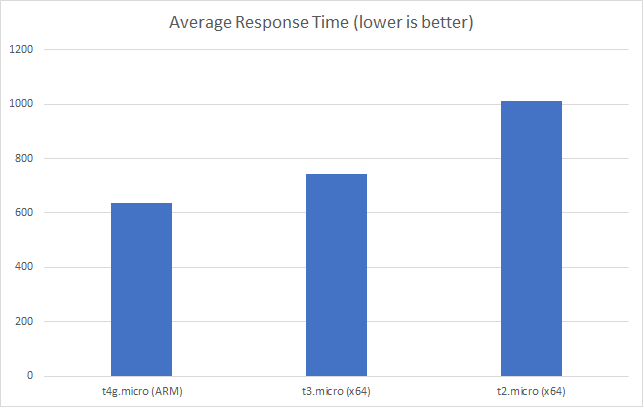

Firstly as a baseline lets look at things running with just two clients per second:

With little going on we can see that the ARM instance already has a slight advantage – its consistently (min, max and average) around 100ms faster than the closest x64 based instance.

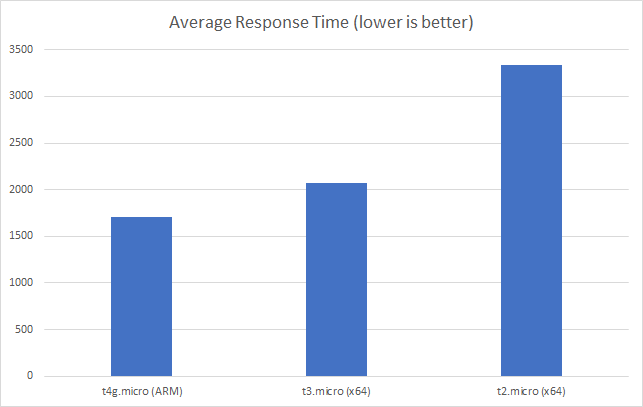

Unsurprisingly if we push things a little harder to 5 clients per second this becomes magnified:

We’re getting no errors or timeouts at this point and you can see the total throughput over the 30 second run below:

The ARM instance has completed around 20% more requests than the nearest x64 instance, with a 18% improvement in average response time and at 80% of the cost.

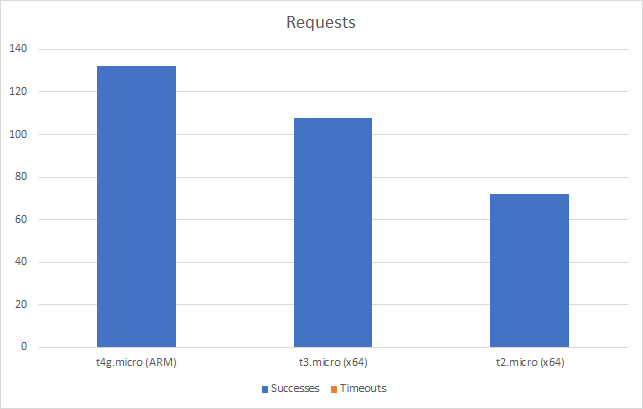

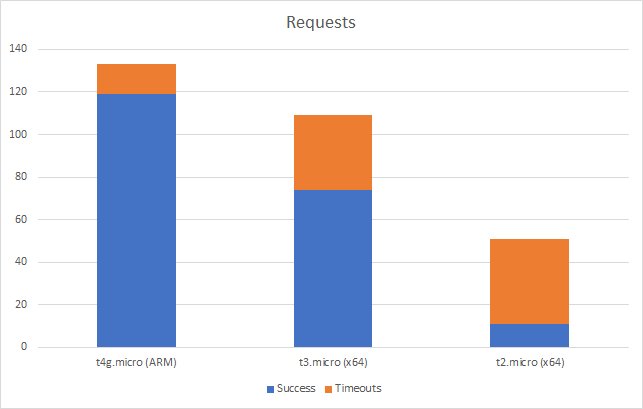

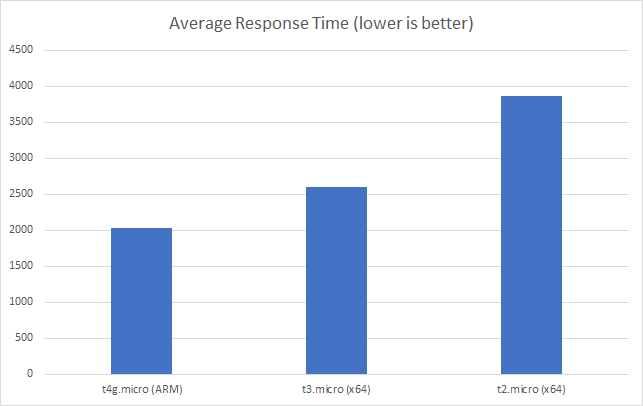

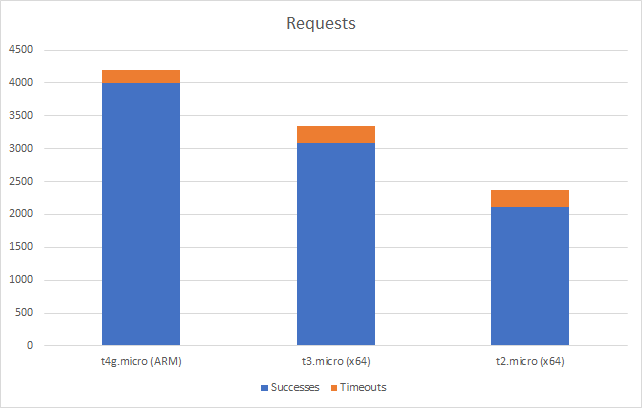

And if we push this out to 20 clients per second (my largest scale test) the ARM instance looks better again:

Its worth noting that at this point all three instances are generating timeouts in our load test suite but again the ARM instance wins out here – we get fewer timeouts and get through more overall requests:

You can see from this that our ARM instance is performing much better under this level of load. We can say that:

- Its successfully completed 60% more requests than the nearest x64 instance

- It has a roughly 12% improvement on average response time

- And it is doing this at 80% of the cost of the x64 instance

With our Mandelbrot test its clear that the ARM instance has a consistent advantage both in performance and cost.

Simulated Async Workload

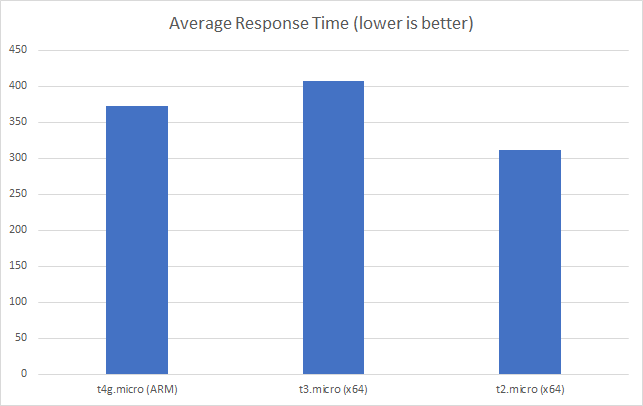

Starting again with a low scale test (in this case 50 clients per second – this test spends significant time awaiting) in this case we can see that our t2 x64 instance had an advantage of around 40ms:

However if we move up to 100 clients per second we can see the t2 instance essentially collapse while out t4g ARM instance and t3 x64 instance are essentially level pegging (286ms and 292ms) respectively:

We get no timeouts at this point and our ARM and x64 instance level peg again on total requests:

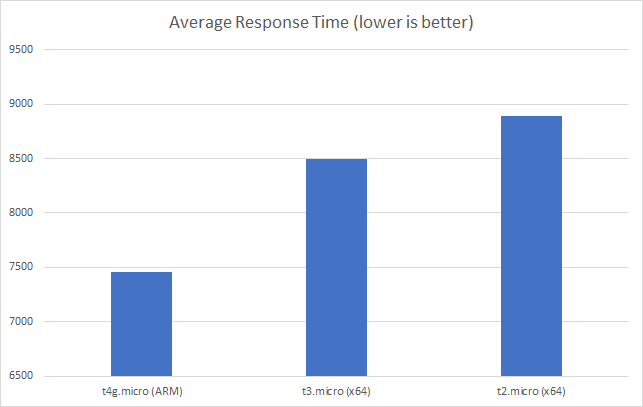

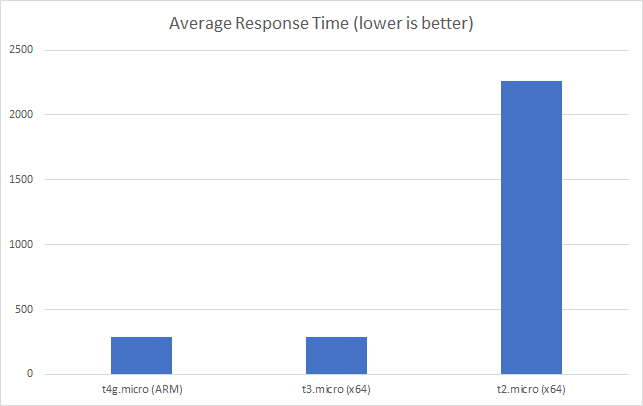

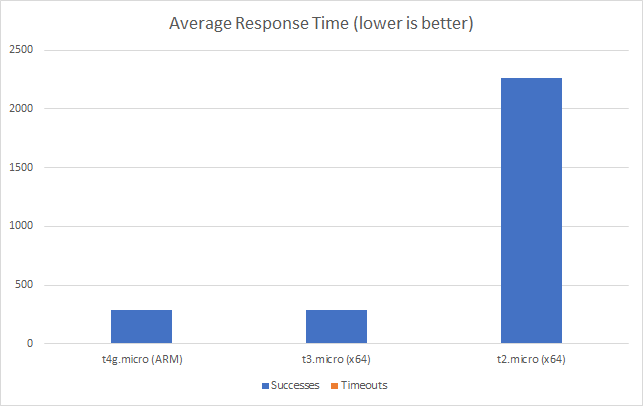

However if we push on to a higher scale test (200 clients per second) we can see the ARM instance begin to pull ahead:

Conclusions

Going into this I really didn’t know what to expect but these fairly simple tests suggest their is an economic advantage to running under ARM in the cloud. At worst you will see comparable performance at a lower price point but for some workloads you may see a significant performance gain – again at a lower price point.

20% performance gain at 80% the price is most certainly not to be sniffed at and for large workloads could quickly offset the cost of moving infrastructure to ARM.

Presumably the price savings are due to the power efficiency of the ARM chips. However what is hard to tell is how much of the pricing is “early adopter” to encourage people to move to CPUs that have long term advantage to cloud vendors (even minor power efficiency gains over cloud scale data centers must total significant numbers on the bottom line) and how much of that will be sustained and passed on to users in the long term.

Doubtless we’ll land somewhere in the middle.

Question I have now is: where the heck is Azure in all this? Between Lambda and ARM on AWS its hard not to feel as if the portability advantages, both processor and OS, of .NET Core / 5 are being realised more effectively by Amazon than they are by Microsoft themselves. Strange times.

Full Results

| Response Times (ms) | |||||||

| Test | Instance | Clients per second | Min | Max | Average | Successful Responses | Timeouts |

| Mandelbrot | t4g.micro (ARM) | 2 | 618 | 751 | 638 | 60 | 0 |

| Mandelbrot | t4g.micro (ARM) | 5 | 765 | 2794 | 1709 | 132 | 0 |

| Mandelbrot | t4g.micro (ARM) | 10 | 761 | 6958 | 3882 | 130 | 0 |

| Mandelbrot | t4g.micro (ARM) | 15 | 759 | 10203 | 5704 | 127 | 1 |

| Mandelbrot | t4g.micro (ARM) | 20 | 802 | 10207 | 7459 | 119 | 14 |

| Mandelbrot | t3.micro (x64) | 2 | 701 | 885 | 744 | 60 | 0 |

| Mandelbrot | t3.micro (x64) | 5 | 878 | 3313 | 2069 | 108 | 0 |

| Mandelbrot | t3.micro (x64) | 10 | 855 | 8037 | 4498 | 103 | 0 |

| Mandelbrot | t3.micro (x64) | 15 | 973 | 10202 | 6930 | 84 | 9 |

| Mandelbrot | t3.micro (x64) | 20 | 1030 | 10215 | 8495 | 74 | 35 |

| Mandelbrot | t2.micro (x64) | 2 | 675 | 1140 | 1010 | 58 | 0 |

| Mandelbrot | t2.micro (x64) | 5 | 651 | 5324 | 3332 | 72 | 0 |

| Mandelbrot | t2.micro (x64) | 10 | 1867 | 10193 | 6999 | 56 | 8 |

| Mandelbrot | t2.micro (x64) | 15 | 1445 | 10203 | 9458 | 32 | 44 |

| Mandelbrot | t2.micro (x64) | 20 | 1486 | 10206 | 8895 | 11 | 40 |

| Async | t4g.micro (ARM) | 20 | 236 | 371 | 275 | 600 | 0 |

| Async | t4g.micro (ARM) | 50 | 222 | 4178 | 373 | 1498 | 0 |

| Async | t4g.micro (ARM) | 100 | 231 | 414 | 286 | 2994 | 0 |

| Async | t4g.micro (ARM) | 200 | 310 | 17388 | 2028 | 3995 | 200 |

| Async | t3.micro (x64) | 20 | 233 | 402 | 279 | 600 | 0 |

| Async | t3.micro (x64) | 50 | 235 | 4912 | 407 | 1498 | 0 |

| Async | t3.micro (x64) | 100 | 235 | 545 | 292 | 2994 | 0 |

| Async | t3.micro (x64) | 200 | 234 | 17376 | 2598 | 3089 | 260 |

| Async | t2.micro (x64) | 20 | 242 | 412 | 298 | 600 | 0 |

| Async | t2.micro (x64) | 50 | 241 | 545 | 312 | 1497 | 0 |

| Async | t2.micro (x64) | 100 | 244 | 9829 | 2260 | 1989 | 0 |

| Async | t2.micro (x64) | 200 | 347 | 17375 | 3858 | 2118 | 252 |

Recent Comments